Of course! This is a very common topic for anyone working with Natural Language Processing (NLP) in Python. The relationship between NLTK and Stanford's NLP tools is a source of confusion for many.

Let's break it down clearly.

The Core Relationship: Two Separate Tools

At its heart, the relationship is this:

- NLTK (Natural Language Toolkit) is a general-purpose Python library for teaching and working with NLP. It's like a Swiss Army knife for NLP in Python.

- Stanford NLP Group produces a suite of high-quality, state-of-the-art NLP tools (like a Part-of-Speech Tagger, a Named Entity Recognizer, a Parser, etc.). These tools are written in Java.

NLTK is NOT the Stanford tools. Instead, NLTK provides a wrapper or an interface that allows you to easily call the Stanford Java tools from within your Python code.

Why Use Stanford Tools with NLTK?

You might wonder, "Why go through all this trouble? Why not just use the models that come with NLTK?"

The answer is performance and accuracy.

Stanford's tools are considered the gold standard for many tasks. They are:

- Highly Accurate: They are trained on massive datasets and often achieve state-of-the-art results.

- Feature-Rich: They offer more detailed and robust analyses than many other tools.

- Well-Researched: They are developed and maintained by a leading academic research group.

The main trade-off is that they are more complex to set up and are slower than some pure Python alternatives (like spaCy or those within NLTK itself).

Step-by-Step Guide: Using Stanford NLP with NLTK

Here is a complete, step-by-step guide to get you started.

Step 1: Download the Stanford NLP Package

- Go to the Stanford CoreNLP website.

- Click the "Download" link.

- You will download a ZIP file (e.g.,

stanford-corenlp-4.5.5.zip). - Unzip this file to a permanent location on your computer. Let's say you unzip it to

C:/stanfordnlp/(on Windows) or~/stanfordnlp/(on macOS/Linux).

Step 2: Download the Models

The package contains the engine (the Java code), but not the trained models it needs to run. You need to download these separately.

-

Go to the Stanford CoreNLP Models page.

-

Download the model file that matches the version of CoreNLP you downloaded (e.g.,

stanford-corenlp-4.5.5-models.jar). -

Place this downloaded

.jarfile inside the same directory where you unzipped CoreNLP. Your directory should now look something like this:C:/stanfordnlp/ ├── stanford-corenlp-4.5.5.jar ├── stanford-corenlp-4.5.5-models.jar <-- PUT THE MODEL FILE HERE ├── edu/ ├── license.txt └── ... (other files)

Step 3: Set Up Environment Variables (Recommended)

To make your life easier, you should tell your system where to find the Stanford tools. This avoids having to write the full path to the .jar file in your Python script every time.

-

Windows:

- Search for "Environment Variables" in the Start Menu.

- Click "Edit the system environment variables".

- In the "System variables" section, click "New...".

- Variable name:

STANFORD_CORENLP - Variable value:

C:\stanfordnlp(or the path to your unzipped directory). - Click OK on all windows.

-

macOS / Linux:

- Open your terminal.

- Add the following line to your shell's configuration file (e.g.,

~/.bashrc,~/.zshrc):export STANFORD_CORENLP="~/stanfordnlp"

- Save the file and then run

source ~/.bashrc(orsource ~/.zshrc) to apply the changes.

Step 4: Install NLTK and Java

Make sure you have Python and NLTK installed. You also need Java installed on your system and available in your system's PATH.

pip install nltk

To check if Java is installed, open your terminal or command prompt and type:

java -version

If you get a version number, you're good to go. If not, you'll need to download and install the Java Development Kit (JDK) from the Oracle website.

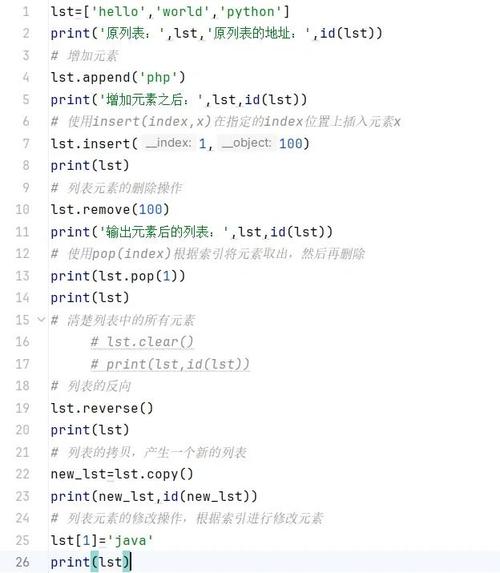

Step 5: Write the Python Code

Now for the fun part! Let's use NLTK to call the Stanford POS Tagger.

First, you need to download the stanford package from NLTK. This package contains the wrapper code that connects NLTK to the Stanford tools.

import nltk

# You only need to do this once

nltk.download('stanford')

nltk.download('punkt') # Often needed for tokenization

Here is the Python script to run the tagger. We will use the StanfordPOSTagger class.

from nltk.tag import StanfordPOSTagger

import os

# --- IMPORTANT: Set the paths ---

# 1. Path to the Stanford POS Tagger model file (the .jar with the model)

# If you set the environment variable, you can use this:

# model_path = os.path.join(os.environ.get('STANFORD_CORENLP'), 'stanford-postagger-4.5.5.jar')

# Or, you can hardcode the path:

model_path = 'C:/stanfordnlp/stanford-postagger-4.5.5.jar'

# 2. Path to the Stanford CoreNLP .jar file

# corenlp_path = os.path.join(os.environ.get('STANFORD_CORENLP'), 'stanford-corenlp-4.5.5.jar')

# Or, hardcoded:

corenlp_path = 'C:/stanfordnlp/stanford-corenlp-4.5.5.jar'

# --- Create the tagger object ---

# Note: The StanfordPOSTagger takes the model path and the corenlp path

# as arguments. The corenlp path is needed for memory settings and other properties.

try:

tagger = StanfordPOSTagger(

model_path,

corenlp_path,

encoding='utf8'

)

# --- Use the tagger ---

sentence = "The quick brown fox jumps over the lazy dog."

tokens = nltk.word_tokenize(sentence)

tagged_words = tagger.tag(tokens)

print(f"Sentence: {sentence}")

print("Tagged Output:")

print(tagged_words)

except Exception as e:

print(f"An error occurred: {e}")

print("\nPlease make sure:")

print("1. Java is installed and in your system's PATH.")

print("2. The paths to the .jar files are correct.")

print("3. You have downloaded the model file and placed it in the correct directory.")

Common Stanford Tools Used with NLTK

The process is similar for other Stanford tools. You just need to import the correct class and use the right model file.

| Task | NLTK Class | Stanford Model File (Example) |

|---|---|---|

| Part-of-Speech Tagging | StanfordPOSTagger |

stanford-postagger-4.5.5-models.jar |

| Named Entity Recognition (NER) | StanfordNERTagger |

stanford-ner-4.5.5-models.jar |

| Parsing (Dependency) | StanfordDependencyParser |

Included in the main stanford-corenlp-4.5.5-models.jar |

Example: Named Entity Recognition (NER)

from nltk.tag import StanfordNERTagger

import os

# Make sure you have downloaded the NER model

# nltk.download('stanford') # should cover it

# Set the path to the NER model

# ner_model_path = os.path.join(os.environ.get('STANFORD_CORENLP'), 'edu', 'stanford', 'nlp', 'models', 'ner', 'english.muc.7class.distsim.crf.ser.gz')

# Or hardcoded:

ner_model_path = 'C:/stanfordnlp/edu/stanford/nlp/models/ner/english.muc.7class.distsim.crf.ser.gz'

# Set the path to the CoreNLP jar

corenlp_path = 'C:/stanfordnlp/stanford-corenlp-4.5.5.jar'

try:

ner_tagger = StanfordNERTagger(

ner_model_path,

corenlp_path,

encoding='