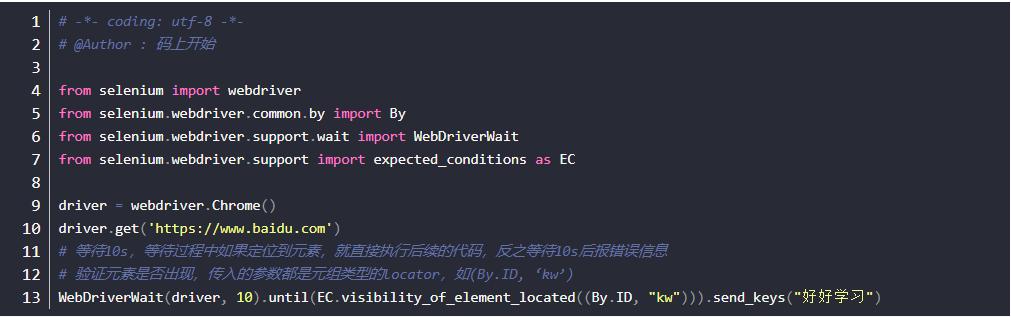

Of course! Using Python with Selenium to handle PDFs is a common task in web scraping and automation. The key thing to understand is that Selenium itself cannot parse or read the content of a PDF file.

Selenium is a browser automation tool. Its job is to drive a web browser. So, when you click a link that opens a PDF, Selenium's job is to make the browser download the file. Then, you use a separate Python library to read the downloaded PDF file.

Here’s a complete guide covering the most common scenarios:

- Scenario 1: Downloading a PDF from a link (Most Common)

- Scenario 2: Handling PDFs that open in the browser tab (Inline)

- Scenario 3: Reading Text from the Downloaded PDF

Prerequisites

First, you need to install the necessary libraries.

# For browser automation pip install selenium # For managing browser drivers (easiest way) pip install webdriver-manager # For reading PDF content (we'll use PyPDF2 for this example) pip install PyPDF2

Scenario 1: Downloading a PDF from a Link (The Standard Approach)

This is the most robust method. You configure the browser to automatically save PDFs to a specific folder without opening them, and then you process the file.

Step 1: Set Up Browser Preferences for Automatic Download

We'll use Chrome for this example. The core idea is to change Chrome's download.default_directory and plugins.always_open_pdf_externally settings.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

import os

# --- 1. Configure Download Directory ---

# Create a directory to save the PDFs if it doesn't exist

download_dir = os.path.join(os.getcwd(), "pdf_downloads")

if not os.path.exists(download_dir):

os.makedirs(download_dir)

# --- 2. Set Chrome Options ---

chrome_options = webdriver.ChromeOptions()

# Set the download directory

prefs = {

"download.default_directory": download_dir,

"download.prompt_for_download": False, # To automatically download the file

"download.directory_upgrade": True,

"safebrowsing.enabled": True # Disable safe browsing to avoid warnings

}

chrome_options.add_experimental_option("prefs", prefs)

# --- 3. Initialize the WebDriver ---

# Use webdriver-manager to handle the driver automatically

service = ChromeService(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service, options=chrome_options)

# --- 4. Automate the Download ---

try:

# Navigate to a page with a PDF link

driver.get("https://www.w3.org/WAI/ER/tests/xhtml/testfiles/resources/pdf/dummy.pdf")

# Find the link to the PDF (you'll need to inspect the page to find the correct selector)

# For this dummy PDF, the link text is "Download the dummy PDF file"

pdf_link = driver.find_element("link text", "Download the dummy PDF file")

pdf_link.click()

print(f"PDF download initiated. Check the '{download_dir}' folder.")

# Wait for the download to complete (optional but recommended)

# A simple way is to wait for the file to appear and its size to be stable.

# For a more robust solution, consider using WebDriverWait with a custom condition.

import time

time.sleep(5) # Simple wait, replace with a better method for production

finally:

# Close the browser

driver.quit()

Step 2: Reading the Downloaded PDF

Now that you have the PDF file in your pdf_downloads folder, you can use a library like PyPDF2 to read its text content.

import os

import PyPDF2

# Path to the downloaded file

# We need to find the latest file in the directory

download_dir = "pdf_downloads"

try:

# Get a list of all files in the directory

files = os.listdir(download_dir)

# Filter for PDF files

pdf_files = [f for f in files if f.endswith('.pdf')]

if not pdf_files:

print("No PDF files found in the download directory.")

else:

# Get the most recently modified PDF file

latest_pdf = max(pdf_files, key=lambda f: os.path.getmtime(os.path.join(download_dir, f)))

pdf_path = os.path.join(download_dir, latest_pdf)

print(f"Reading PDF: {pdf_path}")

with open(pdf_path, 'rb') as file:

reader = PyPDF2.PdfReader(file)

# Get the number of pages

num_pages = len(reader.pages)

print(f"The PDF has {num_pages} pages.")

# Extract text from all pages

text = ""

for page_num in range(num_pages):

page = reader.pages[page_page_num]

text += page.extract_text() + "\n"

print("\n--- Extracted Text ---")

print(text)

except FileNotFoundError:

print(f"Error: The directory '{download_dir}' was not found.")

except Exception as e:

print(f"An error occurred: {e}")

Scenario 2: Handling PDFs that Open in the Browser Tab (Inline)

Sometimes, websites are configured to open PDFs directly in the browser tab instead of downloading them. Selenium can switch to this new "tab" and get its URL.

The Challenge

You cannot use driver.page_source to get the PDF's content. It will be a garbled binary mess. You need to get the direct URL to the PDF file and then download it manually using a library like requests.

Solution: Get the PDF URL and Download with requests

import requests

import os

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from webdriver_manager.chrome import ChromeDriverManager

# --- 1. Initialize WebDriver (no special download options needed) ---

service = ChromeService(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service)

# --- 2. Automate to get the PDF URL ---

try:

# Navigate to a page that opens a PDF inline

driver.get("https://www.adobe.com/support/products/enterprise/knowledgecenter/media/c4611_sample_explain.pdf")

# The PDF is the only content on the page, so its URL is the current page's URL

pdf_url = driver.current_url

print(f"Found PDF URL: {pdf_url}")

# --- 3. Download the PDF using the 'requests' library ---

# Create a directory to save the PDFs

download_dir = "pdf_downloads_inline"

if not os.path.exists(download_dir):

os.makedirs(download_dir)

# Get the filename from the URL

filename = os.path.basename(pdf_url)

save_path = os.path.join(download_dir, filename)

# Send a GET request to the URL

response = requests.get(pdf_url, stream=True)

response.raise_for_status() # Raise an exception for bad status codes

# Write the content to a file

with open(save_path, 'wb') as f:

for chunk in response.iter_content(chunk_size=8192):

f.write(chunk)

print(f"PDF downloaded successfully to: {save_path}")

finally:

# Close the browser

driver.quit()

Summary and Best Practices

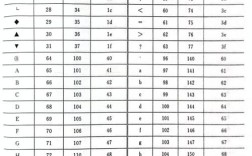

| Method | Pros | Cons | Best For |

|---|---|---|---|

| Scenario 1: Browser Download Settings | Most reliable. Fully automated, no extra libraries needed. | Requires specific browser configuration. | When you have control over the browser and want a completely automated download process. |

Scenario 2: Get URL + requests |

Very flexible. Works for any file type, not just PDFs. | Requires an extra library (requests). Slightly more code. |

When PDFs open inline or in new tabs, or when you can't modify browser settings. |

Scenario 3: PyPDF2 / pdfplumber |

Essential for data extraction. Allows you to search, read, and analyze PDF content. | Cannot download or handle complex PDFs (scanned images, forms). | The mandatory second step after you have successfully downloaded a PDF file. |

Pro Tip: For more advanced PDF text extraction, especially with complex layouts, consider using the pdfplumber library. It's built on top of pdfminer.six and is often better at preserving text structure.

pip install pdfplumber

import pdfplumber

with pdfplumber.open("my_downloaded_file.pdf") as pdf:

first_page = pdf.pages[0]

print(first_page.extract_text())