Of course. Here is a comprehensive guide to using urllib2 in Python 2.7.

Important Note: Python 2.7 is End-of-Life

Before we dive in, it's crucial to understand that Python 2.7 reached its official end-of-life on January 1, 2025. It no longer receives security updates, bug fixes, or other improvements. Using it in production is strongly discouraged.

However, understanding urllib2 is still valuable for:

- Maintaining legacy Python 2.7 applications.

- Reading and understanding older codebases.

- Appreciating the history of Python's standard library.

For any new project, you should be using Python 3, where the urllib library has been reorganized and improved into urllib.request, urllib.parse, and urllib.error.

urllib2 Overview

urllib2 is a Python 2.7 standard library module for fetching URLs (opening and reading them). It's a powerful tool that can handle:

- HTTP and HTTPS URLs.

- Different HTTP methods (GET, POST).

- Custom headers (e.g.,

User-Agent,Authorization). - Cookies.

- Authentication (Basic, Digest).

- Following redirects.

The primary objects you'll work with are:

urllib2.urlopen(): The main function for opening a URL.urllib2.Request: A class for building a request with custom headers, data, etc.urllib2.install_opener(): To build and install a fancy opener (for cookies, proxies, etc.).

Basic GET Request

The simplest use case is fetching the content of a webpage.

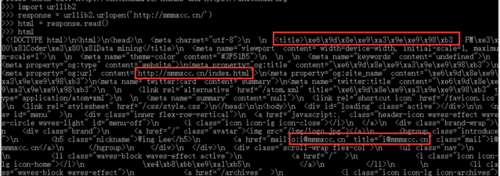

import urllib2

try:

# The URL you want to fetch

url = "http://www.example.com"

# Open the URL and read the response

response = urllib2.urlopen(url)

# The response object is like a file object

html = response.read()

# Print the first 500 characters

print(html[:500])

except urllib2.URLError as e:

print("Failed to open URL: " + str(e))

except Exception as e:

print("An error occurred: " + str(e))

finally:

# It's good practice to close the response

if 'response' in locals() and response:

response.close()

Explanation:

urllib2.urlopen(url)sends a simple GET request to the URL.- It returns a file-like object (

response). response.read()reads the entire content of the response as a string.response.getcode()returns the HTTP status code (e.g., 200 for OK, 404 for Not Found).response.headerscontains the response headers.- Always close the response to free up resources, or use a

withstatement if available (thoughurllib2.urlopendoesn't natively support it, you can wrap it).

Adding Custom Headers (e.g., User-Agent)

Many websites block default Python user-agents. You can customize your request headers using the Request object.

import urllib2

url = "http://httpbin.org/user-agent" # A site that echoes back your User-Agent

# Create a Request object instead of calling urlopen directly

request = urllib2.Request(url)

# Add a custom User-Agent header

request.add_header('User-Agent', 'My-Cool-App/1.0 (Windows NT 10.0; Win64; x64)')

try:

response = urllib2.urlopen(request)

html = response.read()

print(html)

except urllib2.URLError as e:

print("Error: " + str(e))

finally:

if 'response' in locals() and response:

response.close()

Explanation:

- Instead of

urllib2.urlopen(url), we first create aRequestobject. request.add_header()lets you add any HTTP header you need.- You can also set headers when creating the request:

urllib2.Request(url, headers={'User-Agent': '...'}).

Making a POST Request

To send data in the body of a request (e.g., submitting a form), you use the data argument with urllib2.Request. The data must be in a specific format: application/x-www-form-urlencoded.

import urllib2

import urllib # Note: We need the 'urllib' module for urlencode

url = "http://httpbin.org/post" # A site that echoes back POST data

# The data to send (a dictionary)

post_data = {

'username': 'test_user',

'password': 's3cr3t_p@ss'

}

# URL-encode the dictionary into a string like 'username=test_user&password=s3cr3t_p%40ss'

encoded_data = urllib.urlencode(post_data)

# Create a Request object with the URL and the encoded data

# Note: The data argument makes the request a POST request

request = urllib2.Request(url, data=encoded_data)

try:

response = urllib2.urlopen(request)

html = response.read()

print(html)

except urllib2.URLError as e:

print("Error: " + str(e))

finally:

if 'response' in locals() and response:

response.close()

Explanation:

urllib.urlencode()is essential for converting a Python dictionary into a format suitable for a POST request.- When you pass the

dataargument toRequest,urllib2automatically sets theContent-Typeheader toapplication/x-www-form-urlencodedand changes the request method toPOST.

Handling HTTP Errors (404, 500, etc.)

By default, urllib2.urlopen() will raise an exception for HTTP error status codes (4xx, 5xx). You can catch these exceptions to handle them gracefully.

import urllib2

url = "http://httpbin.org/status/404" # This URL returns a 404 Not Found error

try:

response = urllib2.urlopen(url)

print("Success! Status code:", response.getcode())

print(response.read())

except urllib2.HTTPError as e:

# This block catches HTTP errors like 404, 500, etc.

print("HTTP Error occurred!")

print("Error code:", e.code)

print("Error reason:", e.reason)

# You can even read the error page content

print("Error page:", e.read())

except urllib2.URLError as e:

# This catches other URL-related errors (e.g., network down, invalid domain)

print("URL Error occurred!")

print("Reason:", e.reason)

Following Redirects

urllib2 automatically follows HTTP redirects (status codes 301, 302, etc.) by default. You can disable this behavior.

import urllib2

url = "http://httpbin.org/redirect/1" # Redirects once to /get

# To disable redirects, create an opener with a redirect handler

opener = urllib2.build_opener(urllib2.HTTPRedirectHandler)

# opener.open() will now raise an exception on redirect

try:

# This will raise an HTTPError because we're not following redirects

response = opener.open(url)

print("Should not be here")

except urllib2.HTTPError as e:

print("Caught redirect because we disabled it.")

print("Status code:", e.code)

print("Redirect URL was:", e.headers.get('Location'))

Handling Cookies

For websites that require a login, you need to handle cookies. The best way is to use an HTTPCookieProcessor.

import urllib2

import cookielib # Note: 'cookielib' is the Python 2 module name

# Create a cookie jar to store cookies

cookie_jar = cookielib.CookieJar()

# Build an opener that will handle cookies for us

opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cookie_jar))

# Install this opener as the default opener for all future calls

urllib2.install_opener(opener)

# Now, any request made with urlopen will automatically handle cookies

# Example: Log in to a site (this is a hypothetical example)

login_url = "http://example.com/login"

login_data = urllib.urlencode({'username': 'user', 'password': 'pass'})

# First, make a POST request to log in. The cookies will be stored.

response = urllib2.urlopen(login_url, login_data)

print("Logged in. Cookie jar now contains:")

for cookie in cookie_jar:

print(cookie.name + " = " + cookie.value)

# Now, make a request to a protected page. The cookies are sent automatically.

protected_page_url = "http://example.com/dashboard"

response = urllib2.urlopen(protected_page_url)

print("\nContent of protected page:")

print(response.read()[:200])

The Modern Python 3 Alternative

For context, here is how you would perform the same tasks in Python 3. The library was split into submodules.

# Python 3 Example

import urllib.request

import urllib.parse

import urllib.error

# 1. Basic GET Request

try:

with urllib.request.urlopen('http://www.example.com') as response:

html = response.read()

print(html[:500].decode('utf-8')) # Decode bytes to string

except urllib.error.URLError as e:

print("Error:", e.reason)

# 2. Custom Headers & POST Request

url = 'http://httpbin.org/post'

data = {'username': 'test_user', 'password': 's3cr3t_p@ss'}

# Encode data and create request in one go

req = urllib.request.Request(url, data=urllib.parse.urlencode(data).encode('utf-8'), headers={'User-Agent': 'My-Python-3-App/1.0'})

try:

with urllib.request.urlopen(req) as response:

print(response.read().decode('utf-8'))

except urllib.error.HTTPError as e:

print("HTTP Error:", e.code, e.reason)

# 3. Cookies

import http.cookiejar

cj = http.cookiejar.CookieJar()

opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj))

urllib.request.install_opener(opener)

# ... rest of the cookie logic is similar ...

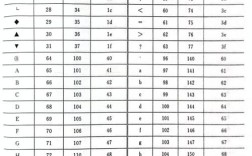

Summary: Key Differences (Python 2 urllib2 vs. Python 3 urllib)

| Feature | Python 2.7 (urllib2) |

Python 3 (urllib.request) |

|---|---|---|

| Module Name | urllib2 |

urllib.request |

| URL Encoding | urllib.urlencode() |

urllib.parse.urlencode() |

| Error Handling | urllib2.URLError, urllib2.HTTPError |

urllib.error.URLError, urllib.error.HTTPError |

| Cookies | cookielib.CookieJar() |

http.cookiejar.CookieJar() |

| Import | import urllib2 |

import urllib.request |

| Open URL | urllib2.urlopen(url) |

urllib.request.urlopen(url) |

| Request Object | urllib2.Request(url) |

urllib.request.Request(url) |

| Data Encoding | urllib.urlencode(dict) returns a string |

urllib.parse.urlencode(dict).encode('utf-8') returns bytes |