Of course! Let's dive deep into SGDRegressor from Scikit-learn.

What is SGDRegressor?

SGDRegressor is a linear model that uses Stochastic Gradient Descent (SGD) as its optimization algorithm. In simple terms, it's a way to find the best-fit line (or hyperplane) for your data by making small, incremental updates to the model's parameters.

Key Concepts: How it Works

-

Linear Model: Like

LinearRegression,SGDRegressortries to model the target variableyas a linear combination of the input featuresX.y ≈ w0 + w1*x1 + w2*x2 + ... + wn*xn- Here,

w0is the bias (or intercept) andw1, w2, ..., wnare the feature weights (or coefficients).

-

Stochastic Gradient Descent (SGD): This is the core of the algorithm.

- "Gradient Descent": It works by calculating the "gradient" (the direction of steepest ascent) of the loss function (a measure of how wrong the model is) with respect to the model's weights. It then takes a small step in the opposite direction of the gradient to reduce the error.

- "Stochastic": The key difference from standard Gradient Descent is that instead of calculating the gradient using the entire dataset for each update, SGD calculates it using just one randomly selected sample (or a small "mini-batch" of samples) at a time.

Why is SGD Useful?

- Speed: For very large datasets (millions or billions of samples), loading the entire dataset into memory to calculate the gradient is impossible. SGD can handle this because it only needs one sample at a time. It's much faster for large-scale problems.

- Online Learning: SGD can be used for "online" learning, where the model is updated as new data arrives. You can simply call the

partial_fit()method with new data points.

Key Parameters

Understanding the parameters is crucial for getting good results with SGDRegressor.

| Parameter | Default | Description |

|---|---|---|

loss |

'squared_error' |

The function to measure the model's error. Common choices: 'squared_error' (standard linear regression), 'huber' (less sensitive to outliers), 'epsilon_insensitive' (used in Support Vector Regression). |

penalty |

None |

The type of regularization to apply to prevent overfitting. Options: None, 'l2', 'l1', 'elasticnet'. This is a very important parameter. |

alpha |

0001 |

The constant that multiplies the regularization term. A higher value means stronger regularization. |

l1_ratio |

15 |

The mixing parameter for elasticnet penalty. l1_ratio=0 is pure L2, l1_ratio=1 is pure L1. |

max_iter |

1000 |

The maximum number of passes over the entire training dataset (epochs). |

tol |

1e-3 |

The stopping criterion. If the loss doesn't improve by at least tol for n_iter_no_change consecutive epochs, training stops. |

learning_rate |

'invscaling' |

The schedule for learning rate updates. Options: 'constant', 'optimal', 'invscaling', 'adaptive'. |

eta0 |

01 |

The initial learning rate. |

random_state |

None |

The seed for the random number generator. Use this for reproducible results. |

early_stopping |

False |

Whether to use early stopping to terminate training when validation score is not improving. Requires validation_fraction. |

When to Use SGDRegressor vs. LinearRegression

| Feature | LinearRegression |

SGDRegressor |

|---|---|---|

| Best For | Small to medium-sized datasets that fit in memory. | Very large datasets that are too big to process all at once. |

| Algorithm | Analytical solution (Normal Equation) or SVD. | Iterative optimization (Stochastic Gradient Descent). |

| Speed | Very fast for small datasets. | Can be much faster for large datasets. |

| Memory Usage | High, as it needs the whole dataset. | Low, as it processes one sample at a time. |

| Regularization | None by default. Requires Ridge, Lasso, or ElasticNet. |

Built-in L1, L2, and ElasticNet regularization. |

| Flexibility | Less flexible. | More flexible (loss functions, learning rates). |

| Convergence | Finds the exact global minimum (for squared loss). | Finds an approximate minimum. The result can vary slightly between runs. |

Code Example

Let's walk through a complete example, including data preparation, training, and evaluation.

Import Libraries and Generate Data

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import SGDRegressor

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import make_pipeline

from sklearn.metrics import mean_squared_error, r2_score

# Generate some sample data

# y = 2.5 * x + noise

np.random.seed(42)

X = 2 * np.random.rand(100, 1)

y = 4 + 2.5 * X + np.random.randn(100, 1)

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

print("Shape of X_train:", X_train.shape)

print("Shape of y_train:", y_train.shape)

Create and Train the Model

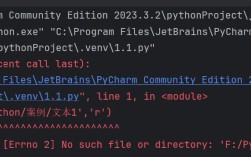

This is the most critical part. SGDRegressor is highly sensitive to feature scaling. You should almost always scale your data first. The easiest way is to use a Pipeline.

# Create a pipeline that first scales the data, then applies the regressor.

# This is the recommended practice.

# StandardScaler standardizes features by removing the mean and scaling to unit variance.

model = make_pipeline(

StandardScaler(),

SGDRegressor(

max_iter=1000, # Maximum number of epochs

tol=1e-3, # Tolerance for stopping

random_state=42, # For reproducibility

# Let's use L2 regularization (Ridge-like)

penalty='l2',

alpha=0.1,

# Let's use a constant learning rate

learning_rate='constant',

eta0=0.01

)

)

# Train the model

model.fit(X_train, y_train.ravel()) # .ravel() converts y from (n,1) to (n,)

print("Model training complete.")

Make Predictions and Evaluate

# Make predictions on the test set

y_pred = model.predict(X_test)

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print("\n--- Model Evaluation ---")

print(f"Mean Squared Error (MSE): {mse:.4f}")

print(f"R-squared (R²): {r2:.4f}")

# The coefficients and intercept can be accessed from the model's steps

# The first step is the scaler, the second is the regressor

intercept = model.named_steps['sgdregressor'].intercept_

coefficients = model.named_steps['sgdregressor'].coef_

print("\n--- Model Parameters ---")

print(f"Intercept (w0): {intercept[0]:.4f}")

print(f"Coefficient (w1): {coefficients[0]:.4f}")

Visualize the Results

It's always good to plot the data and the regression line to see how well the model fits.

# Plot the original data

plt.scatter(X, y, color='blue', alpha=0.6, label='Data points')

# Plot the regression line

# We need to create a line to plot, so we use the min and max of X

x_line = np.linspace(X.min(), X.max(), 100).reshape(-1, 1)

y_line = model.predict(x_line)

plt.plot(x_line, y_line, color='red', linewidth=2, label='Regression Line')

'SGDRegressor Fit')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.grid(True)

plt.show()

Important Tips and Best Practices

-

Always Scale Your Data: This is the most important tip. Features on different scales (e.g., age in years vs. income in dollars) will cause the optimization to perform poorly. Use

StandardScalerorMinMaxScalerinside aPipeline. (图片来源网络,侵删)

(图片来源网络,侵删) -

Tune Hyperparameters:

SGDRegressorhas many knobs to turn.loss: Try'huber'if you suspect your data has outliers.penaltyandalpha: These control overfitting. Experiment with'l1'(for sparsity, i.e., some coefficients become zero) and'l2'(Ridge-like).learning_rate:'invscaling'is a good default, but if the model doesn't converge, try'adaptive'or'constant'.

-

Use

GridSearchCVorRandomizedSearchCV: To find the best combination of hyperparameters, use Scikit-learn's search tools. They make the process systematic. -

Monitor Convergence: You can check if the model has converged by looking at the

loss_curve_attribute (available ifearly_stoppingis False).# Access the loss curve from the trained regressor regressor = model.named_steps['sgdregressor'] plt.plot(regressor.loss_curve_) plt.title("Loss Curve") plt.xlabel("Epoch") plt.ylabel("Loss (Mean Squared Error)") plt.show()If the loss curve is still decreasing significantly at the end of

max_iter, you may need to increasemax_iter. If it flattens out early, you might have found a good solution.