Of course! The term "Python buildnetwork" is a bit generic, but it most commonly refers to using Python to create a neural network. This is a core task in machine learning and deep learning.

I'll guide you through the process, starting with the fundamental concepts and then providing practical code examples using the most popular Python libraries.

The Core Concepts: What is a Neural Network?

Think of a neural network as a simplified model of the human brain. It's a series of interconnected "neurons" organized in layers:

- Input Layer: Receives the initial data (e.g., pixel values from an image, numerical features from a dataset).

- Hidden Layers: One or more layers in between that perform the complex computations. The network "learns" by adjusting the connections (weights) within these layers.

- Output Layer: Produces the final result (e.g., a classification like "cat" or "dog", a predicted price, a translated sentence).

Key Components:

- Neurons (Nodes): Each neuron receives inputs, performs a calculation, and produces an output.

- Weights: Parameters that are adjusted during training to determine the strength and sign of a connection between neurons.

- Bias: An extra parameter that helps the model fit the data better.

- Activation Function: A non-linear function applied to the output of a neuron, allowing the network to learn complex patterns. Common examples are ReLU, Sigmoid, and Tanh.

Essential Python Libraries

You can't build a network from scratch every time (though it's a great learning exercise!). We use powerful, optimized libraries.

| Library | Description | Best For... |

|---|---|---|

| TensorFlow | An end-to-end platform for machine learning, developed by Google. | Production systems, large-scale deployment, mobile/edge devices (via TensorFlow Lite). |

| Keras | A high-level, user-friendly API for building neural networks. It's now the official API for TensorFlow. | Rapid prototyping, beginners, and standard deep learning models. |

| PyTorch | A dynamic computation library developed by Facebook's AI Research lab. | Flexibility, research, and tasks where the model's structure needs to change dynamically. |

Recommendation for Beginners: Start with TensorFlow and its Keras API. It's the most straightforward for getting started.

Practical Example 1: Building a Simple Network with Keras (TensorFlow)

Let's build a neural network for a classic classification problem: the MNIST dataset of handwritten digits. The goal is to take an image of a digit (0-9) and correctly classify it.

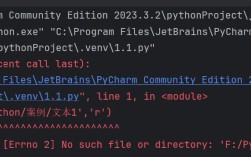

Step 1: Setup and Installation

First, make sure you have the necessary libraries installed.

pip install tensorflow numpy matplotlib

Step 2: The Code (A Complete, Runnable Example)

This code will:

- Load the MNIST dataset.

- Pre-process the data.

- Build a sequential neural network model.

- Compile the model (define the optimizer, loss function, and metrics).

- Train the model on the data.

- Evaluate its performance.

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

# 1. Load the MNIST dataset

# It's already split into training and testing sets

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

# Let's see what the data looks like

print("Shape of training images:", x_train.shape) # (60000, 28, 28)

print("Shape of training labels:", y_train.shape) # (60000,)

print("Shape of test images:", x_test.shape) # (10000, 28, 28)

print("Shape of test labels:", y_test.shape) # (10000,)

# Display a sample image

plt.imshow(x_train[0], cmap='gray')f"Label: {y_train[0]}")

plt.show()

# 2. Pre-process the data

# Normalize pixel values from the 0-255 range to the 0.0-1.0 range.

# This helps the network train more effectively.

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0

# Flatten the 28x28 images into a 1D vector of 784 pixels (28*28)

# Our network expects a flat input, not a 2D image.

x_train = x_train.reshape(-1, 28 * 28)

x_test = x_test.reshape(-1, 28 * 28)

# One-hot encode the labels. This converts an integer (e.g., 5) into a vector.

# e.g., 5 -> [0, 0, 0, 0, 0, 1, 0, 0, 0, 0]

num_classes = 10

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

# 3. Build the Neural Network Model

# We use a Sequential model, which is a linear stack of layers.

model = keras.Sequential([

# Input Layer: Dense layer with 128 neurons.

# 'relu' (Rectified Linear Unit) is a common and effective activation function.

# 'input_shape' is required for the first layer.

keras.layers.Dense(128, activation='relu', input_shape=(784,)),

# Optional: Add a Dropout layer to prevent overfitting.

# It randomly sets a fraction of input units to 0 at each update during training.

keras.layers.Dropout(0.2),

# Hidden Layer: Another dense layer with 64 neurons.

keras.layers.Dense(64, activation='relu'),

# Output Layer: Dense layer with 10 neurons (one for each digit class).

# 'softmax' activation converts the output into a probability distribution.

# The neuron with the highest probability is the network's prediction.

keras.layers.Dense(num_classes, activation='softmax')

])

# Display the model's architecture

model.summary()

# 4. Compile the Model

# This step configures the model for training.

model.compile(optimizer='adam',

loss='categorical_crossentropy', # Good for multi-class classification

metrics=['accuracy'])

# 5. Train the Model

# 'epochs' is the number of times the model will cycle through the entire training dataset.

# 'batch_size' is the number of samples processed before the model is updated.

print("\n--- Training the model ---")

history = model.fit(x_train, y_train,

batch_size=128,

epochs=10,

validation_split=0.1) # Use 10% of training data for validation

print("--- Training finished ---")

# 6. Evaluate the Model

print("\n--- Evaluating the model on test data ---")

loss, accuracy = model.evaluate(x_test, y_test)

print(f"Test Accuracy: {accuracy:.4f}")

print(f"Test Loss: {loss:.4f}")

# Make a prediction on a single image

sample_image = x_test[0]

# The model expects a batch of images, so we add a dimension

prediction = model.predict(np.expand_dims(sample_image, axis=0))

predicted_label = np.argmax(prediction)

true_label = np.argmax(y_test[0])

print(f"\nPrediction for the first test image: {predicted_label}")

print(f"True label for the first test image: {true_label}")

Practical Example 2: Building a Convolutional Neural Network (CNN)

For image data, a simple Dense network isn't the best choice. A Convolutional Neural Network (CNN) is specifically designed to recognize patterns in images. It uses Conv2D and MaxPooling2D layers.

import tensorflow as tf

from tensorflow import keras

# Load and preprocess data (same as before, but we DON'T flatten it)

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

# Normalize pixel values

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0

# Reshape to include the channel dimension (for grayscale, it's 1)

# New shape: (num_samples, height, width, channels)

x_train = x_train.reshape(-1, 28, 28, 1)

x_test = x_test.reshape(-1, 28, 28, 1)

# One-hot encode labels

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

# Build the CNN model

model_cnn = keras.Sequential([

# Input Layer

keras.layers.Input(shape=(28, 28, 1)),

# Convolutional Layer: 32 filters, 3x3 kernel size, 'relu' activation

keras.layers.Conv2D(32, kernel_size=(3, 3), activation='relu'),

# Pooling Layer: Reduces the spatial dimensions (downsamples)

keras.layers.MaxPooling2D(pool_size=(2, 2)),

# Flatten the results to feed into a dense layer

keras.layers.Flatten(),

# Dense Layer

keras.layers.Dense(128, activation='relu'),

# Output Layer

keras.layers.Dense(10, activation='softmax')

])

# Compile and train

model_cnn.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

print("\n--- Training the CNN model ---")

model_cnn.fit(x_train, y_train,

batch_size=128,

epochs=5, # CNNs train faster and often need fewer epochs

validation_split=0.1)

print("--- CNN Training finished ---")

# Evaluate

print("\n--- Evaluating the CNN model ---")

loss, accuracy = model_cnn.evaluate(x_test, y_test)

print(f"CNN Test Accuracy: {accuracy:.4f}")

Next Steps and Advanced Topics

Once you're comfortable with the basics, you can explore:

- Hyperparameter Tuning: Adjusting the number of layers, neurons, learning rate, batch size, etc., to find the optimal model.

- Transfer Learning: Using a powerful, pre-trained model (like VGG16, ResNet) and fine-tuning it for your specific task. This is extremely common in computer vision and NLP.

- More Complex Architectures: Building Recurrent Neural Networks (RNNs) or Transformers for sequence data like text or time series.

- Deployment: Saving your trained model and serving it as an API using tools like Flask, FastAPI, or cloud platforms like Google AI Platform or AWS SageMaker.