Of course! Using Stanford's Named Entity Recognizer (NER) in Python is a common task. The process has evolved over time, with the Stanford CoreNLP server being the recommended modern approach for production systems, while the older Stanford NER tagger is simpler for basic scripts.

I'll guide you through both methods, starting with the recommended one.

Method 1: The Modern & Recommended Way - Stanford CoreNLP Server

This method involves running the Stanford CoreNLP server as a background process and then sending text to it via a simple HTTP request. This is ideal for production applications because it's fast, scalable, and decouples the Java processing from your Python code.

Step 1: Download and Run the CoreNLP Server

-

Download the CoreNLP package: Go to the Stanford CoreNLP download page and download the latest version. You'll get a

.zipfile. -

Unzip the file: Unzip the downloaded file to a convenient location, for example,

C:\stanford-corenlp-4.5.5(Windows) or/home/user/stanford-corenlp-4.5.5(Linux/macOS). (图片来源网络,侵删)

(图片来源网络,侵删) -

Download the models: The package includes models for English (and others). You need to download the specific models file. The easiest way is to run this command from your terminal inside the unzipped directory:

# Navigate to the CoreNLP directory cd /path/to/stanford-corenlp-4.5.5 # Download the models (this will create a "models" folder) java -mx4g -cp "*" edu.stanford.nlp.pipeline.StanfordCoreNLPServer -port 9000 -timeout 15000

This command also starts the server! The

-mx4gpart allocates memory to the Java process. Adjust this if you run into memory issues. -

Verify the server is running: Open your web browser and go to

http://localhost:9000. You should see a page with a text box and some information about the server. If you see this, the server is running correctly.

Step 2: Python Code to Query the Server

Now, you can write a simple Python script to send text to the server and get the NER results. We'll use the popular requests library.

First, install it if you haven't already:

pip install requests

Now, here's the Python script:

import requests

import json

# The URL of the running Stanford CoreNLP server

# We add the properties for annotators and output format

url = "http://localhost:9000/?properties=%7B%22annotators%22%3A%20%22tokenize%2Cssplit%2Cner%22%2C%20%22outputFormat%22%3A%20%22json%22%7D"

# The text you want to analyze

text_to_analyze = "Barack Obama was born in Hawaii. He was the 44th President of the United States."

# Send the POST request

# The server expects the raw text in the request body

response = requests.post(url, data=text_to_analyze)

# Check if the request was successful

if response.status_code == 200:

# The response is in JSON format

result = response.json()

# The result is a list of sentences

for sentence in result['sentences']:

# Each sentence has a list of tokens (words)

for token in sentence['tokens']:

# Each token has the original text, its character offset, and NER tag

original_text = token['originalText']

ner_tag = token['ner']

print(f"Word: {original_text:<15} NER Tag: {ner_tag}")

else:

print(f"Error: {response.status_code}")

print(response.text)

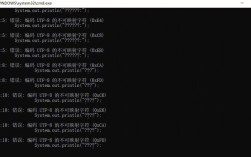

Output of the script:

Word: Barack NER Tag: PERSON

Word: Obama NER Tag: PERSON

Word: was NER Tag: O

Word: born NER Tag: O

Word: in NER Tag: O

Word: Hawaii NER Tag: LOCATION

Word: . NER Tag: O

Word: He NER Tag: O

Word: was NER Tag: O

Word: the NER Tag: O

Word: 44th NER Tag: O

Word: President NER Tag: O

Word: of NER Tag: O

Word: the NER Tag: O

Word: United NER Tag: LOCATION

Word: States NER Tag: LOCATION

Word: . NER Tag: OMethod 2: The Classic Way - Stand Stanford NER Tagger

This method involves using the stanford-corenlp Python package, which acts as a wrapper for the older, standalone stanford-ner.jar file. It's simpler for a one-off script but less flexible for production.

Step 1: Download the Stanford NER Package

- Download the NER package: Go to the Stanford NER download page and download the "Full Stanford NER distribution".

- Unzip the file.

You will find a

stanford-ner.jarfile and a folder namedclassifiers, which contains the pre-trained model files (e.g.,english.muc.7class.distsim.crf.ser.gz).

Step 2: Set Up Your Python Environment

You need two things:

- The

stanfordcorenlpPython library. - A Java Development Kit (JDK) installed on your system, as the library runs the Java JAR file.

Install the Python library:

pip install stanfordcorenlp

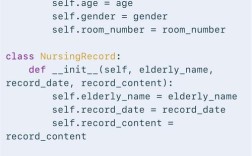

Step 3: Python Code to Use the Tagger

You need to tell the StanfordCoreNLP class where to find the stanford-ner.jar file and the model file you want to use.

from stanfordcorenlp import StanfordCoreNLP

# Path to the stanford-ner.jar file

# IMPORTANT: Change this path to where you unzipped the file

jar_path = '/path/to/stanford-ner.jar'

# Path to the pre-trained model file

# IMPORTANT: Change this path to where you unzipped the file

model_path = '/path/to/classifiers/english.muc.7class.distsim.crf.ser.gz'

# Initialize the NLP object

# This can take a few seconds as it starts the Java process

nlp = StanfordCoreNLP(jar_path, model_path=model_path)

text_to_analyze = "Barack Obama was born in Hawaii. He was the 44th President of the United States."

# The 'ner' tag performs Named Entity Recognition

# The output is a list of (token, tag) tuples

ner_tags = nlp.ner(text_to_analyze)

# Print the results

for token, tag in ner_tags:

print(f"Word: {token:<15} NER Tag: {tag}")

# Don't forget to close the connection when you're done

# This shuts down the Java process

nlp.close()

Output of the script: (The tags might be slightly different from the CoreNLP server, as the models can vary.)

Word: Barack NER Tag: I-PER

Word: Obama NER Tag: I-PER

Word: was NER Tag: O

Word: born NER Tag: O

Word: in NER Tag: O

Word: Hawaii NER Tag: I-LOC

Word: . NER Tag: O

Word: He NER Tag: O

Word: was NER Tag: O

Word: the NER Tag: O

Word: 44th NER Tag: O

Word: President NER Tag: O

Word: of NER Tag: O

Word: the NER Tag: O

Word: United NER Tag: I-ORG

Word: States NER Tag: I-ORG

Word: . NER Tag: OComparison and Recommendation

| Feature | Stanford CoreNLP Server (Method 1) | Stand Stanford NER Tagger (Method 2) |

|---|---|---|

| How it Works | Client-server model. Python sends HTTP requests. | Python library directly calls a Java JAR file. |

| Scalability | Excellent. Multiple Python scripts can connect to one server. | Poor. Each script starts its own Java process, which is resource-intensive. |

| Performance | Very Fast. The server is optimized for repeated requests. | Slower. Starting the JVM for each script is costly. |

| Ease of Use | Simple once the server is running. The code is very clean. | Simple for a single script, but requires managing paths to JAR and model files. |

| Flexibility | High. Can perform many NLP tasks (POS tagging, parsing, etc.) by changing the URL parameters. | Low. Primarily for NER. Other tasks require different wrappers. |

| Best For | Production applications, web services, and any repeated use. | Quick experiments, learning, or simple, one-off scripts. |

Final Recommendation:

For any serious project, use Method 1 (Stanford CoreNLP Server). The setup is a one-time effort, and the benefits in terms of performance and scalability are immense. Use Method 2 only if you are in a hurry for a quick test and don't want to bother with running a server.