Of course! The datatable library is a fantastic tool in Python, especially for users coming from a R or Pandas background who need to work with very large datasets.

Here’s a comprehensive guide to datatable, covering what it is, why you'd use it, how to install it, and how it compares to Pandas with clear code examples.

What is datatable?

datatable (often referred to as dt for short) is a Python library for manipulating tabular data. Its primary goal is to provide a high-performance, memory-efficient DataFrame object.

Think of it as a supercharged version of Pandas, optimized for speed and memory usage, particularly for datasets that are too large to fit into your computer's RAM.

Key Strengths:

- Extreme Speed: Operations are incredibly fast, often 10-100x faster than Pandas for large datasets. This is because it's written in C++ and uses a multi-threaded, columnar approach.

- Low Memory Footprint: It's designed to handle datasets much larger than your available RAM by using memory-mapped files and efficient data representations.

- Pandas-like API: The syntax is very familiar to Pandas users, making it easy to learn and switch between them.

- Type Inference: It automatically infers the best data types for your columns, saving memory and speeding up computations.

Installation

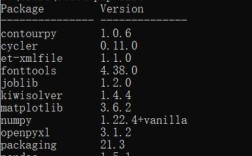

Installation is straightforward using pip. The package is well-maintained and available on PyPI.

pip install datatable

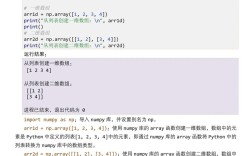

Core Concepts: The Frame Object

The central data structure in datatable is the Frame. It's the equivalent of a Pandas DataFrame.

You can create a Frame from various sources:

- A Python dictionary

- A list of lists or tuples

- A CSV file (very fast)

- An in-memory Pandas DataFrame

import datatable as dt

# 1. From a dictionary

data = {

'ID': [1, 2, 3, 4, 5],

'Name': ['Alice', 'Bob', 'Charlie', 'David', 'Eva'],

'Age': [25, 30, 35, 28, 32],

'Salary': [70000, 80000, 120000, 75000, 90000]

}

frame1 = dt.Frame(data)

print("--- Frame from Dictionary ---")

print(frame1)

--- Frame from Dictionary ---

| ID Name Age Salary

-- + ---- ----- ----- ------

0 | 1 Alice 25 70000

1 | 2 Bob 30 80000

2 | 3 Charlie 35 120000

3 | 4 David 28 75000

4 | 5 Eva 32 90000

[5 rows x 4 columns]datatable vs. Pandas: A Practical Comparison

Let's see how common operations are performed in both libraries.

A. Data Inspection

Pandas:

import pandas as pd df = pd.DataFrame(data) print(df.head()) # First 5 rows print(df.info()) # Column info and memory usage print(df.describe()) # Descriptive statistics

datatable:

# The Frame object itself is the main inspection tool

print(frame1) # Prints the first and last few rows

# Get basic info

print(f"\nShape: {frame1.shape}") # (rows, columns)

print(f"\nColumn names: {frame1.names}")

print(f"\nColumn types:\n{frame1.stypes}")

# Get descriptive statistics

print(f"\nSummary statistics:\n{frame1.summary()}")

B. Selecting and Filtering Data (The "DT Expression" Language)

This is where datatable shines. Instead of using .loc[], .iloc[], or .query(), you use a powerful and concise expression language.

Pandas:

# Select columns print(df[['Name', 'Salary']]) # Filter rows print(df[df['Age'] > 30])

datatable:

# Select columns using a list of names

print("--- Selecting 'Name' and 'Salary' ---")

print(frame1[:, ['Name', 'Salary']])

# Filter rows using a boolean expression

# The syntax is Frame[rows, columns]

print("\n--- Filtering for Age > 30 ---")

print(frame1[frame1[:, 'Age'] > 30, :])

More complex filtering:

# You can chain conditions. The '&' operator is for AND.

# Note: You must wrap conditions in `()` for boolean logic.

print("\n--- Filtering for Age > 30 AND Salary > 85000 ---")

filtered_frame = frame1[(frame1[:, 'Age'] > 30) & (frame1[:, 'Salary'] > 85000), :]

print(filtered_frame)

C. Adding and Modifying Columns

Pandas:

df['Bonus'] = df['Salary'] * 0.10 df['Senior'] = df['Age'] > 30

datatable:

# The `f` object provides easy access to column names

# You can assign a new column or modify an existing one

frame1['Bonus'] = frame1['f.Salary'] * 0.10

frame1['Senior'] = frame1['f.Age'] > 30

print("--- Frame with new columns ---")

print(frame1)

D. Grouping and Aggregating

Pandas:

# Group by a column and calculate the mean salary

avg_salary_by_age_group = df.groupby('Senior')['Salary'].mean().reset_index()

print(avg_salary_by_age_group)

datatable:

# The `by` argument is used for grouping

# The result is a new Frame

avg_salary_by_senior = frame1[:, dt.mean(dt.f.Salary), by(dt.f.Senior)]

print("\n--- Average Salary by Senior Status ---")

print(avg_salary_by_senior)

You can perform multiple aggregations:

# Multiple aggregations using a dictionary

multi_agg = frame1[:, {

'Avg_Salary': dt.mean(dt.f.Salary),

'Max_Age': dt.max(dt.f.Age)

}, by(dt.f.Senior)]

print("\n--- Multiple Aggregations ---")

print(multi_agg)

E. Sorting

Pandas:

# Sort by Salary in descending order df_sorted = df.sort_values(by='Salary', ascending=False)

datatable:

# The `sort` method modifies the Frame in place

# To sort by a column in descending order, use the '-' prefix

frame1.sort('Salary') # Ascending by default

print("\n--- Sorted by Salary (Ascending) ---")

print(frame1)

frame1.sort('-Salary') # Descending

print("\n--- Sorted by Salary (Descending) ---")

print(frame1)

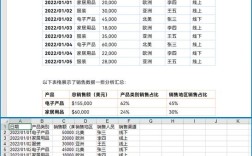

When to Use datatable vs. Pandas

| Feature | Pandas | datatable |

|---|---|---|

| Primary Use Case | General-purpose data analysis, exploration, and smaller datasets (up to a few GB). | Large-scale data processing, ETL, and datasets larger than RAM. |

| Performance | Good for small/medium data. Slower on large data due to GIL and single-threaded operations. | Extremely fast. Multi-threaded by default, optimized for large datasets. |

| Memory Usage | Can be memory-intensive. Often stores data as 64-bit floats/ints by default. | Highly memory-efficient. Uses 32-bit and 8-bit types where possible. |

| API | Mature, extensive, and well-documented. Huge community. | Pandas-like, but not a 1:1 replacement. Some Pandas functions are missing. |

| Ecosystem | The king of the data science ecosystem. Integrates with everything (Scikit-learn, Matplotlib, etc.). | Growing ecosystem. Can convert to/from Pandas, but some integrations might be slower. |

| Learning Curve | The standard for data analysis in Python. | Very low for Pandas users. Main hurdle is learning the expression language. |

Recommendation:

- Start with Pandas. It's the right tool for 90% of data analysis tasks, especially if you need to integrate with other libraries or are just starting.

- Switch to

datatablewhen:- Your dataset is too large for your RAM and you get

MemoryError. - Your Pandas operations are taking too long and you need a significant speed boost.

- You are performing heavy ETL tasks on large CSV files where reading and writing speed is critical.

- Your dataset is too large for your RAM and you get

Working with Large Files (A Key Advantage)

datatable is famous for its incredibly fast CSV reader.