Of course! This is a very common and important topic in Java. Let's break it down clearly.

The key thing to understand first is that Java internally uses UTF-16 to represent strings. When you talk about a "Java Unicode string," you're almost always talking about a java.lang.String object, which is already stored in UTF-16.

The real question you're asking is: "How do I convert this internal UTF-16 representation into a sequence of UTF-8 bytes?"

Here’s a complete guide covering the different scenarios.

The Core Concept: String -> byte[] (The Standard Way)

The most common task is to get a UTF-8 byte array from a String. You do this using the String.getBytes() method, specifying the StandardCharsets.UTF_8 character set.

Method Signature:

byte[] getBytes(StandardCharsets charset)

Example:

This is the standard, recommended approach for converting a String to a UTF-8 byte array.

import java.nio.charset.StandardCharsets;

public class UnicodeToUtf8 {

public static void main(String[] args) {

// Our input string with various characters

// 'A' (ASCII), 'é' (Latin), '€' (Euro symbol), '你' (Chinese)

String originalString = "A é € 你";

System.out.println("Original String: " + originalString);

System.out.println("Original String length (chars): " + originalString.length());

// Convert the String to a UTF-8 byte array

byte[] utf8Bytes = originalString.getBytes(StandardCharsets.UTF_8);

System.out.println("\nUTF-8 Byte Array:");

// Print the bytes in a readable format

for (byte b : utf8Bytes) {

System.out.printf("%02X ", b);

}

System.out.println("\nByte Array Length: " + utf8Bytes.length);

// --- Verification: Convert back to String ---

String decodedString = new String(utf8Bytes, StandardCharsets.UTF_8);

System.out.println("\nDecoded String from UTF-8 bytes: " + decodedString);

System.out.println("Are strings equal? " + originalString.equals(decodedString));

}

}

Output:

Original String: A é € 你

Original String length (chars): 7

UTF-8 Byte Array:

41 20 C3 A9 20 E2 82 AC 20 E4 BD A0

Byte Array Length: 13

Decoded String from UTF-8 bytes: A é € 你

Are strings equal? trueWhy does the byte array length (13) differ from the string length (7)?

A->41(1 byte)` (space) ->20` (1 byte)- ->

C3 A9(2 bytes, because it's not in the ASCII range) ` (space) ->20` (1 byte)- ->

E2 82 AC(3 bytes) ` (space) ->20` (1 byte)你->E4 BD A0(3 bytes)

Total: 1 + 1 + 2 + 1 + 3 + 1 + 3 = 13 bytes. This demonstrates how UTF-8 uses a variable number of bytes (1 to 4) to encode characters.

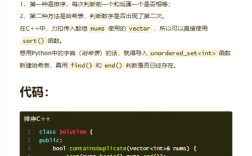

The "Old Way" (Not Recommended)

Before Java 7, you had to use the Charset class, which was more verbose.

import java.nio.charset.Charset;

public class UnicodeToUtf8OldWay {

public static void main(String[] args) {

String originalString = "A é € 你";

// This is the pre-Java 7 way, less efficient and more verbose

Charset utf8Charset = Charset.forName("UTF-8");

byte[] utf8Bytes = originalString.getBytes(utf8Charset);

System.out.println("Byte Array (Old Way): " + java.util.Arrays.toString(utf8Bytes));

}

}

Why is StandardCharsets.UTF_8 better?

- Type Safety: It's a compile-time constant.

Charset.forName("UTF-8")can throw aIllegalCharsetExceptionat runtime if the name is misspelled. - Performance: The

StandardCharsetsare pre-defined and guaranteed to be available, so the JVM can optimize access. - Readability: It's self-documenting and clear.

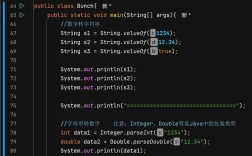

Writing UTF-8 to a File

A very common use case is to write a String directly to a file in UTF-8 encoding. The best tool for this is Files.write() from the NIO (New I/O) API.

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class WriteUtf8ToFile {

public static void main(String[] args) {

String content = "This file contains UTF-8 text: é € 你";

Path path = Paths.get("output.txt");

try {

// The StandardCharsets.UTF_8 argument is crucial!

Files.write(path, content.getBytes(StandardCharsets.UTF_8));

System.out.println("File 'output.txt' written successfully in UTF-8.");

} catch (IOException e) {

System.err.println("Error writing to file: " + e.getMessage());

}

}

}

If you were using older FileOutputStream and OutputStreamWriter, it would look like this (the NIO way is preferred):

// Old, more verbose way

try (FileOutputStream fos = new FileOutputStream("output_old.txt");

OutputStreamWriter osw = new OutputStreamWriter(fos, StandardCharsets.UTF_8)) {

osw.write(content);

} catch (IOException e) {

e.printStackTrace();

}

Reading UTF-8 from a File

Naturally, you'll also need to read files. Use Files.readAllBytes() and then create a String from the bytes.

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class ReadUtf8FromFile {

public static void main(String[] args) {

Path path = Paths.get("output.txt"); // The file we created earlier

try {

// Read all bytes from the file

byte[] fileBytes = Files.readAllBytes(path);

// Convert the UTF-8 byte array back to a String

String content = new String(fileBytes, StandardCharsets.UTF_8);

System.out.println("Content read from file:");

System.out.println(content);

} catch (IOException e) {

System.err.println("Error reading from file: " + e.getMessage());

}

}

}

Advanced: Handling Invalid UTF-8 Sequences

What happens if you read a byte array that is not valid UTF-8? By default, Java will throw a MalformedInputException.

To handle this gracefully, you can use a CharsetDecoder.

import java.nio.ByteBuffer;

import java.nio.CharBuffer;

import java.nio.charset.CharacterCodingException;

import java.nio.charset.Charset;

import java.nio.charset.CharsetDecoder;

import java.nio.charset.CodingErrorAction;

import java.nio.charset.StandardCharsets;

public class HandleInvalidUtf8 {

public static void main(String[] args) {

// Create a byte array that is NOT valid UTF-8

// The byte 0xFF is invalid in UTF-8 sequences

byte[] invalidBytes = "Hello \uFFFD World".getBytes(StandardCharsets.ISO_8859_1); // Get bytes from a different encoding

// Let's manually create an invalid sequence

byte[] trulyInvalidBytes = {(byte) 0xFF, (byte) 0xFF, (byte) 0xFF};

System.out.println("Attempting to decode invalid bytes...");

// Configure the decoder to replace invalid sequences instead of failing

CharsetDecoder decoder = StandardCharsets.UTF_8.newDecoder()

.onMalformedInput(CodingErrorAction.REPLACE) // Replace bad sequences with the Unicode replacement character (U+FFFD)

.onUnmappableCharacter(CodingErrorAction.REPLACE);

try {

CharBuffer charBuffer = decoder.decode(ByteBuffer.wrap(trulyInvalidBytes));

String result = charBuffer.toString();

System.out.println("Decoded result with REPLACE: " + result);

System.out.println("Contains replacement char? " + result.contains("\uFFFD"));

} catch (CharacterCodingException e) {

System.err.println("Decoding failed even with replacement strategy: " + e.getMessage());

}

}

}

Common CodingErrorActions:

REPORT(default): Throws an exception.IGNORE: Drops the malformed input and continues.REPLACE: Replaces the malformed input with a default character (usually\uFFFD).

Summary

| Task | Recommended Method | Key Point |

|---|---|---|

| String to UTF-8 byte array | `my |