Of course! Let's dive deep into PyFilesystem (FS). It's a fantastic Python library that you'll want in your toolkit, especially if you work with files in a flexible, cross-platform way.

What is PyFileSystem (FS)?

At its core, PyFileSystem (FS) is a virtual filesystem layer for Python.

Think of it as a universal adapter for all kinds of filesystems. Instead of writing different code to handle a local disk, a ZIP file, an FTP server, a cloud storage bucket (like S3), or an in-memory filesystem, you use the same set of commands for all of them.

The core idea is to provide a common interface (fs.base.FS) for any storage system. Once you have an FS object, you can interact with it using a consistent API, regardless of its physical location or type.

The "Aha!" Moment: Why Use It?

Imagine you have a function that processes a directory of images.

The "old" way (without PyFileSystem):

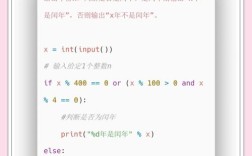

import os

import shutil

from zipfile import ZipFile

def process_images(local_path):

# 1. Process files from a local directory

for filename in os.listdir(local_path):

if filename.endswith('.jpg'):

# ... process the image ...

print(f"Processing {filename} from local disk")

# 2. Now, you want to archive them into a ZIP file

with ZipFile('archive.zip', 'w') as zipf:

for filename in os.listdir(local_path):

if filename.endswith('.jpg'):

zipf.write(os.path.join(local_path, filename))

# This code is tightly coupled to the local filesystem.

# To process images from an FTP server, you'd need to rewrite everything.

The "PyFileSystem" way:

from fs import open_fs

from fs.memoryfs import MemoryFS

from fs.osfs import OSFS

from fs.zipfs import ZipFS

def process_images(fs): # The function only needs an FS object!

"""Processes images from any given filesystem."""

print(f"Processing files from: {fs.__class__.__name__}")

for path, info in fs.walk.files():

if path.endswith('.jpg'):

# The API is the same whether it's local, memory, or zip!

with fs.open(path, 'rb') as f:

# ... process the image from the file object `f` ...

print(f" - Processing {path}")

# --- Now, let's use it with different backends ---

# 1. With a local directory

local_fs = OSFS('/path/to/your/images')

process_images(local_fs)

# 2. With an in-memory filesystem

mem_fs = MemoryFS()

# Let's add some files to it for demonstration

mem_fs.writetext('image1.jpg', 'fake image data')

mem_fs.writetext('image2.png', 'fake png data')

process_images(mem_fs)

# 3. With a ZIP file (can even be a remote one!)

# PyFileSystem can open a zip file directly without extracting it.

with open_fs('zip://archive.zip') as zip_fs:

# You can even add files to it

zip_fs.writetext('new_image.jpg', 'another fake image')

process_images(zip_fs)

As you can see, the process_images function is completely decoupled from the underlying storage system. This is the power of PyFileSystem.

Key Concepts and Terminology

FSObject: This is the central object. You get one by using a "constructor" function likefs.open_fs(). All operations happen on this object.- Path: A string representing the location of a file or directory within the FS (e.g.,

'/data/images/logo.png'). - Info Object: When you list directory contents or walk the filesystem, you get

infoobjects. These contain metadata like the file's name, size, modified time, and whether it's a file or directory. - Opener: A function that takes a "FS URL" and returns an

FSobject. The most common opener isfs.open_fs().

The API: Common Operations

The API is designed to be intuitive and Pythonic.

| Operation | Local OS Equivalent | PyFileSystem API |

|---|---|---|

| Open a file | open('path/to/file.txt', 'r') |

fs.open('path/to/file.txt', 'r') |

| Read a file | f.read() |

fs.readbytes('path') or fs.readtext('path') |

| Write a file | f.write('data') |

fs.writebytes('path', b'data') or fs.writetext('path', 'data') |

| Check existence | os.path.exists('path') |

fs.exists('path') |

| Is it a file? | os.path.isfile('path') |

fs.isfile('path') |

| Is it a dir? | os.path.isdir('path') |

fs.isdir('path') |

| List a dir | os.listdir('path') |

fs.listdir('path') |

| Get metadata | os.stat('path') |

fs.getinfo('path', namespaces=['details']) |

| Make a dir | os.mkdir('path') |

fs.makedir('path') |

| Remove a file | os.remove('path') |

fs.remove('path') |

| Copy a file | shutil.copy('src', 'dst') |

fs.copy('src', 'dst') |

| Move/Rename | os.rename('src', 'dst') |

fs.move('src', 'dst') |

Installation

pip install fs

For specific filesystems, you might need extra packages, but the core fs library is all you need for the most common ones.

# For S3, Google Cloud, Azure pip install fs-s3fs fs-gdrive fsazure # For FTP/SFTP pip install fs-ftp fs-sftp

Practical Examples

Working with a Local Directory (OSFS)

from fs.osfs import OSFS

# Open a directory

home_fs = OSFS('~') # The ~ is expanded to your home directory

# List files in the Downloads directory

downloads_fs = home_fs.opendir('Downloads')

print("Files in Downloads:")

for filename in downloads_fs.listdir():

print(f"- {filename}")

# Check if a file exists

if home_fs.exists('README.md'):

print("\nFound README.md in home directory.")

# Get its size

info = home_fs.getinfo('README.md', namespaces=['details'])

print(f"Size: {info.size} bytes")

Working with a ZIP File (ZipFS)

The magic here is that you can treat a ZIP archive like a normal directory.

from fs.zipfs import ZipFS

# Create a new zip archive and add files

with ZipFS('my_archive.zip', write=True) as zip_fs:

zip_fs.writetext('hello.txt', 'Hello from PyFileSystem!')

zip_fs.writetext('notes.txt', 'These are some notes.')

print("Created my_archive.zip and added files.")

# Now, read from the existing zip archive without extracting it

with ZipFS('my_archive.zip') as zip_fs:

print("\nContents of my_archive.zip:")

for path, info in zip_fs.walk.files():

print(f"- {path}")

# Read a specific file

content = zip_fs.readtext('hello.txt')

print(f"\nContent of hello.txt: '{content}'")

Working with In-Memory Files (MemoryFS)

Perfect for testing, temporary data, or when you don't want to touch the disk.

from fs.memoryfs import MemoryFS

# Create an in-memory filesystem

mem_fs = MemoryFS()

# Build a directory structure

mem_fs.makedir('data')

mem_fs.makedir('data/images')

# Write some files

mem_fs.writetext('data/info.txt', 'Project metadata')

mem_fs.writetext('data/images/logo.png', 'fake png data')

# Interact with it just like a real filesystem

print("Files in memory FS:")

for path in mem_fs.walk.files():

print(path)

# You can even get the raw bytes of the whole filesystem

# This is useful for creating a single download from multiple generated files.

import io

from fs.tarfs import TarFS

buffer = io.BytesIO()

with TarFS(buffer, write=True) as tar_fs:

mem_fs.copydir('data', tar_fs / 'backup')

print(f"\nCreated a tar archive in memory of size: {len(buffer.getvalue())} bytes")

Working with Cloud Storage (e.g., S3)

This is where PyFileSystem truly shines. The code is almost identical to working with a local directory.

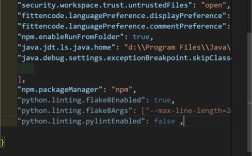

# First, install the S3 opener: pip install fs-s3fs

from fs_s3fs import S3FS

# You'll need your AWS credentials configured (e.g., via environment variables)

# AWS_ACCESS_KEY_ID=...

# AWS_SECRET_ACCESS_KEY=...

# AWS_DEFAULT_REGION=...

# Connect to an S3 bucket

# The URL format is s3://bucket-name

s3_fs = S3FS('my-awesome-bucket')

# List files in the bucket

print("Objects in S3 bucket:")

for path in s3_fs.files:

print(f"- {path}")

# Upload a local file

s3_fs.upload('local_file.txt', 'uploaded_file.txt')

# Download a file from S3

s3_fs.download('uploaded_file.txt', 'downloaded_from_s3.txt')

# List files again to confirm

print("\nObjects after upload:")

for path in s3_fs.files:

print(f"- {path}")

Advanced Features

- Info Namespaces: When you get file info, you can request specific "namespaces" of metadata to optimize performance. For example,

fs.getinfo(path, namespaces=['details', 'access'])will fetch size/mtime (details) and permissions (access). - Caching: PyFileSystem has built-in support for caching filesystems, which can dramatically speed up read-heavy operations on slow backends like network drives or cloud storage.

- Mirror and Operations: The library includes powerful tools for complex operations like

fs.copy.copy_fs()to copy entire filesystems andfs.mirror.mirror_fs()to synchronize them.

When to Use PyFileSystem

- When you need to support multiple storage backends (e.g., a local dev environment vs. a cloud production environment).

- For writing cleaner, more abstract code that isn't tied to

os.pathor specific libraries likeboto3for S3. - For unit testing: Swap out a real filesystem for a fast

MemoryFSin your tests. - When working with archive formats (ZIP, TAR, etc.) without needing to extract them first.

- For data pipelines that might read from a local disk, process the data, and write it to S3 or a database, all using a consistent interface.

When Maybe Not to Use It

- For very simple, one-off scripts that will only ever run on a local machine and never need to change. Using

osandshutildirectly might feel a bit lighter. - If you need features that are highly specific to one filesystem and not covered by the FS API (though this is rare).

Conclusion

PyFileSystem is a powerful, elegant, and Pythonic solution for abstracting away file system operations. It promotes writing more robust, testable, and portable code. Once you start using it, you'll wonder how you ever lived without it.