Of course! The term "Python text content" can mean several things, from handling simple strings to processing complex text files and performing natural language processing. I'll break it down into a comprehensive guide, starting from the basics and moving to more advanced topics.

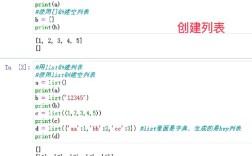

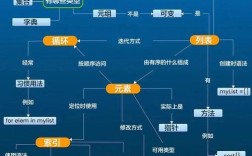

The Fundamental Unit: The String (str)

In Python, all text content is represented as a string, which is a sequence of Unicode characters. Strings are immutable, meaning they cannot be changed after they are created.

Creating Strings

You can create strings using single quotes (), double quotes (), or triple quotes ( or ).

# Single quotes single_quoted = 'Hello, world!' # Double quotes double_quoted = "Hello, world!" # Triple quotes for multi-line strings multi_line = """This is a string that spans across multiple lines.""" print(single_quoted) print(multi_line)

Common String Operations

Strings come with a rich set of built-in methods for manipulation.

text = " Python is Awesome! "

# Get the length of the string

length = len(text)

print(f"Length: {length}") # Output: Length: 21

# Convert to uppercase

upper_text = text.upper()

print(f"Uppercase: {upper_text}") # Output: Uppercase: PYTHON IS AWESOME!

# Convert to lowercase

lower_text = text.lower()

print(f"Lowercase: {lower_text}") # Output: Lowercase: python is awesome!

# Remove leading/trailing whitespace

stripped_text = text.strip()

print(f"Stripped: '{stripped_text}'") # Output: Stripped: 'Python is Awesome!'

# Replace a substring

replaced_text = text.replace("Awesome", "Great")

print(f"Replaced: {replaced_text}") # Output: Replaced: Python is Great!

# Check if a string contains a substring

contains_python = "Python" in text

print(f"Contains 'Python': {contains_python}") # Output: Contains 'Python': True

# Split a string into a list of words

words = text.split()

print(f"Split into words: {words}") # Output: Split into words: ['', 'Python', 'is', 'Awesome!', '']

Working with Text Files

Reading from and writing to files is a common task. The key is to use the with open() statement, which automatically handles closing the file, even if errors occur.

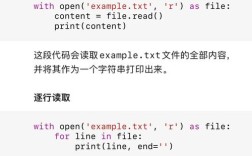

Reading a Text File

# Assume 'my_document.txt' contains:

# Hello, this is line one.

# This is line two.

# And this is the final line.

# Method 1: Read the entire file into a single string

with open('my_document.txt', 'r') as file:

all_content = file.read()

print("--- Reading entire file ---")

print(all_content)

# Method 2: Read the file line by line (memory efficient for large files)

with open('my_document.txt', 'r') as file:

print("\n--- Reading line by line ---")

for line in file:

# The 'line' variable includes the newline character '\n'

print(line.strip()) # Use .strip() to remove the newline

Writing to a Text File

# 'w' mode will overwrite the file if it exists.

# 'a' mode will append to the end of the file if it exists.

# 'x' mode will create a new file, but fail if the file already exists.

content_to_write = "This is a new line to be added.\n"

# Appending to a file

with open('my_document.txt', 'a') as file:

file.write(content_to_write)

print("\n--- File after appending ---")

with open('my_document.txt', 'r') as file:

print(file.read())

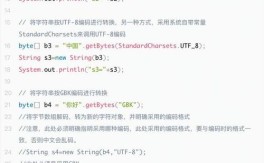

Specifying Encoding

It's crucial to specify an encoding, especially for non-English text. UTF-8 is the most common and recommended choice.

# Writing text with special characters

special_text = "Héllø Wørld! 你好世界"

with open('special_text.txt', 'w', encoding='utf-8') as file:

file.write(special_text)

# Reading it back

with open('special_text.txt', 'r', encoding='utf-8') as file:

read_text = file.read()

print(f"Read back: {read_text}")

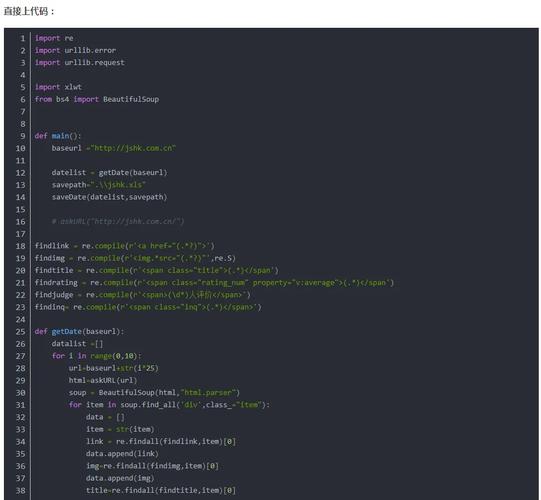

Advanced Text Processing with Regular Expressions (Regex)

Regular expressions are a powerful tool for searching, matching, and manipulating text patterns. Python's re module provides regex support.

import re

text = "The email addresses are user1@example.com and support@my-company.org. Call 123-456-7890."

# Find all email addresses

# \S+ matches one or more non-whitespace characters

# \. matches a literal dot

# \w+ matches one or more word characters (letters, digits, _)

emails = re.findall(r'\S+@\S+\.\S+', text)

print(f"Found emails: {emails}") # Output: Found emails: ['user1@example.com', 'support@my-company.org']

# Search for a phone number pattern

phone_pattern = r'\d{3}-\d{3}-\d{4}' # Matches exactly 3 digits, a dash, etc.

phone_match = re.search(phone_pattern, text)

if phone_match:

print(f"Found phone number: {phone_match.group()}") # Output: Found phone number: 123-456-7890

# Substitute text

new_text = re.sub(r'user1@example.com', 'new_user@example.com', text)

print(f"After substitution: {new_text}")

Natural Language Processing (NLP) with NLTK

For more advanced tasks like tokenization, stemming, and part-of-speech tagging, you can use libraries like NLTK (Natural Language Toolkit) or spaCy.

First, you need to install NLTK:

pip install nltk

Then, download the necessary data:

import nltk

nltk.download('punkt') # For tokenizers

nltk.download('averaged_perceptron_tagger') # For POS tagging

Basic NLP Tasks with NLTK

from nltk.tokenize import word_tokenize, sent_tokenize

from nltk.stem import PorterStemmer

paragraph = "NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources."

# Sentence Tokenization

sentences = sent_tokenize(paragraph)

print(f"Sentences: {sentences}")

# Word Tokenization

words = word_tokenize(paragraph)

print(f"\nWords: {words}")

# Stemming (reducing words to their root form)

stemmer = PorterStemmer()

stemmed_words = [stemmer.stem(word) for word in words]

print(f"\nStemmed Words: {stemmed_words}")

# Part-of-Speech (POS) Tagging

pos_tags = nltk.pos_tag(words)

print(f"\nPOS Tags: {pos_tags}")

Modern Text Generation with LLMs (Large Language Models)

A very popular use of "text content" today is generating it using models like GPT-3/4, Llama, etc. The openai library is a common way to interact with these models.

First, install the library:

pip install openai

Then, use it (you'll need an API key from OpenAI):

import openai

# Set your API key (best practice: use an environment variable)

# openai.api_key = "YOUR_API_KEY"

try:

response = openai.chat.completions.create(

model="gpt-3.5-turbo", # Or "gpt-4"

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in one simple sentence."}

],

max_tokens=50

)

generated_text = response.choices[0].message.content

print(f"Generated Text: {generated_text}")

except openai.errors.AuthenticationError:

print("Error: Invalid API key. Please set your openai.api_key.")

except Exception as e:

print(f"An error occurred: {e}")

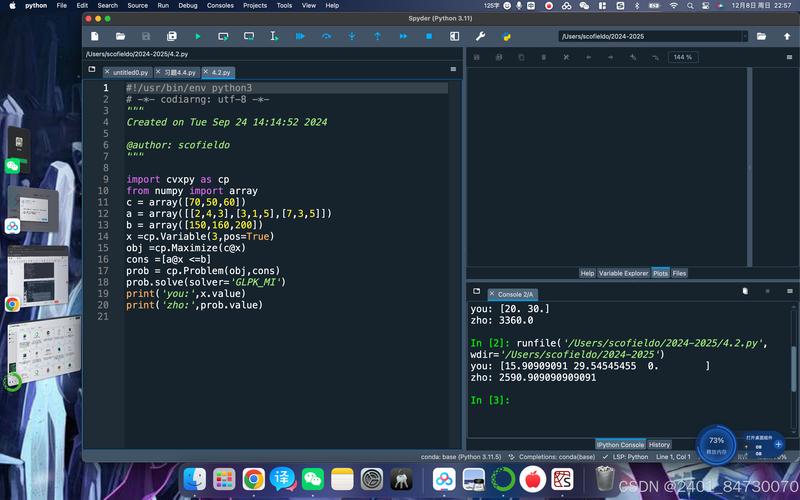

Summary: Which Approach to Use?

| Task | Recommended Tool/Method |

|---|---|

| Simple string manipulation (upper, lower, replace) | Python's built-in str methods. |

| Reading/Writing files | with open() statement and specifying encoding='utf-8'. |

| Searching for complex patterns | Regular expressions (re module). |

| Analyzing language structure (words, sentences, grammar) | NLP libraries like NLTK or spaCy. |

| Generating new text (stories, code, answers) | LLM APIs like OpenAI or local models via libraries. |