Of course. This is a very common and important topic in Python, especially for developers working with Chinese or other non-English text. Let's break it down from the basics to practical solutions.

The Core Problem: What is the gbk error?

The error message you'll most often see is:

UnicodeDecodeError: 'gbk' codec can't decode byte 0xXX in position XX: illegal multibyte sequenceThis error happens when Python tries to read a sequence of bytes and interpret them as text using the gbk encoding, but the bytes don't form a valid gbk character.

The fundamental misunderstanding that causes this error is treating bytes and strings as the same thing.

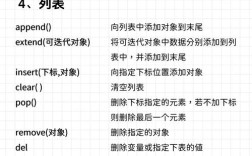

- Bytes (

bytes): A raw sequence of 8-bit values (e.g.,b'\xe4\xbd\xa0'). This is how data is actually stored on your disk or transmitted over a network. It has no inherent meaning until you decide how to interpret it. - String (

str): An abstract sequence of Unicode code points (e.g.,'你'). This is how Python wants to handle text internally. It's a universal representation.

Encoding is the process of converting a string to bytes. Decoding is the process of converting bytes to a string.

The gbk error is a UnicodeDecodeError. It means you have bytes (from a file, a network request, etc.) and you are trying to turn them into a string, but you told Python to use the wrong "decoder" (the gbk codec).

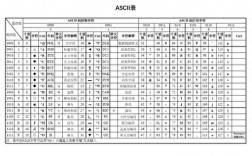

What is GBK?

- GBK (Guobiao Kuozhan) is a character encoding standard used for Simplified Chinese.

- It's an extension of the GB2312 standard, supporting more characters.

- It's primarily used in mainland China.

- It's a variable-width encoding, meaning one character can be represented by either 1 or 2 bytes. This is why the error message mentions "multibyte sequence."

What is Unicode?

- Unicode is a universal character set. Its goal is to assign a unique number (a "code point") to every character in every language in the world.

- Examples:

A->U+0041你->U+4F60- ->

U+20AC

- Python 3's

strtype uses Unicode internally. This is a huge improvement over Python 2, wherestrwas a sequence of bytes andunicodewas the text type.

The Bridge: UTF-8

Unicode is a giant list of characters, but it's not an encoding format. You need a way to store those code points as bytes. That's where encodings like UTF-8 come in.

- UTF-8 (Unicode Transformation Format - 8-bit) is the most common encoding in the world. It's the default on Linux, macOS, and the web.

- It's a variable-width encoding (1 to 4 bytes per character).

- It has a huge advantage: ASCII is valid UTF-8. This means files containing only English text (which uses ASCII) are also perfectly valid UTF-8 files, making it a great "universal" choice.

The Golden Rule of Python 3:

All text (

str) should be in Unicode internally. All text read from or written to files/networks should be encoded/decoded using an explicit encoding (UTF-8 is the best default choice).

Practical Scenarios and Solutions

Let's look at the most common situations where you'll encounter the gbk error.

Scenario 1: Reading a File with Chinese Text

Problem: You have a file named data.txt encoded in GBK, but you try to open it without specifying an encoding. Python defaults to the system's encoding (which might be utf-8 on Linux/macOS or cp1252 on Windows), causing a UnicodeDecodeError.

Incorrect Code (Causes the error):

# data.txt contains the GBK-encoded bytes for "你好世界"

try:

with open('data.txt', 'r') as f:

content = f.read() # Python tries to decode with system's default encoding (e.g., utf-8)

print(content)

except UnicodeDecodeError as e:

print(f"Error: {e}")

Correct Solution: Explicitly tell Python the file's encoding.

# Correctly open the file, specifying its GBK encoding

try:

with open('data.txt', 'r', encoding='gbk') as f:

content = f.read()

print(content) # This is now a proper Unicode string

print(type(content)) # <class 'str'>

except FileNotFoundError:

print("File not found.")

except UnicodeDecodeError as e:

print(f"Error: The file is not actually in GBK format. {e}")

Scenario 2: Writing a File with Chinese Text

Problem: You have a Unicode string in Python and want to save it to a file. You must explicitly encode it.

Incorrect Code (Can cause issues or create a file that's hard to read elsewhere):

my_string = "你好世界"

# This might work on a Chinese Windows system with its default encoding,

# but it's not portable and will fail on Linux/macOS.

# It's bad practice to rely on the system's default encoding for writing.

with open('output.txt', 'w') as f:

f.write(my_string)

Correct Solution: Explicitly encode the string when writing.

my_string = "你好世界"

# Best practice: Use UTF-8 for maximum compatibility

with open('output.txt', 'w', encoding='utf-8') as f:

f.write(my_string)

# If you specifically need a GBK file

with open('output_gbk.txt', 'w', encoding='gbk') as f:

f.write(my_string)

Scenario 3: Dealing with a Web Response (API, Scraper)

Problem: You receive data from a website or API. The response headers might tell you the encoding, but sometimes they are missing or wrong.

Incorrect Code:

import requests

response = requests.get('http://example.com/chinese-page')

# response.text tries to guess the encoding, which can fail.

# If the page is GBK and requests guesses wrong, you get an error.

print(response.text)

Correct Solution: Inspect the response and handle encoding explicitly.

import requests

response = requests.get('http://example.com/chinese-page')

# 1. Let requests try to determine encoding from headers (usually reliable)

# response.encoding will be set automatically.

response.encoding = 'gbk' # Force it if you know it's GBK but the header is wrong.

print(response.text)

# 2. If you have raw bytes and need to decode them manually

# raw_bytes = response.content # This is the raw bytes

# try:

# my_string = raw_bytes.decode('gbk')

# print(my_string)

# except UnicodeDecodeError:

# print("Failed to decode with GBK. Trying another encoding or handling error.")

Summary and Best Practices

| Do | Don't |

|---|---|

Be Explicit: Always specify encoding='...' when opening files. |

Never rely on defaults: Don't assume a file is utf-8 or that the system's default encoding is what you need. |

Use UTF-8: Make utf-8 your default encoding for files, internal communication, and APIs. It's the most portable and modern choice. |

Don't ignore errors: errors='ignore' or errors='replace' can hide problems. Only use them if you understand the trade-offs. |

Handle Errors Gracefully: Wrap file operations and decoding in try...except UnicodeDecodeError blocks. |

Don't mix str and bytes: Don't try to concatenate a string and bytes object. Decode bytes to string first. |

Check the Source: If you get a gbk error, the file or data stream is very likely encoded in GBK. Check its origin. |

Don't assume: A file with a .txt extension isn't guaranteed to be utf-8. Its content and encoding are what matter. |

Final Example: A Robust File Reader

def read_file_safely(filepath, encoding='utf-8'):

"""Reads a file with a specified encoding, with error handling."""

try:

with open(filepath, 'r', encoding=encoding) as f:

return f.read()

except FileNotFoundError:

print(f"Error: The file '{filepath}' was not found.")

return None

except UnicodeDecodeError:

print(f"Error: Failed to decode '{filepath}' with {encoding} encoding.")

print("The file might be in a different encoding, like GBK.")

# You could try a fallback here, e.g., return read_file_safely(filepath, 'gbk')

return None

# Example usage:

# content = read_file_safely('data.txt', encoding='gbk')

# if content:

# print(content)