Of course! This is a very common and powerful workflow in data analysis and business automation. Let's break down how to connect Python, Excel, and MySQL into a seamless data pipeline.

We'll cover three main scenarios:

- Excel → MySQL: Pushing data from an Excel file into a MySQL database.

- MySQL → Excel: Pulling data from a MySQL database into an Excel file.

- A Complete Example: A script that does both, demonstrating a full ETL (Extract, Transform, Load) process.

Prerequisites

Before you start, you need to have the following installed and configured:

-

Python: Make sure you have Python installed on your system.

-

MySQL Server: A running MySQL server (local or remote) with a database and user created.

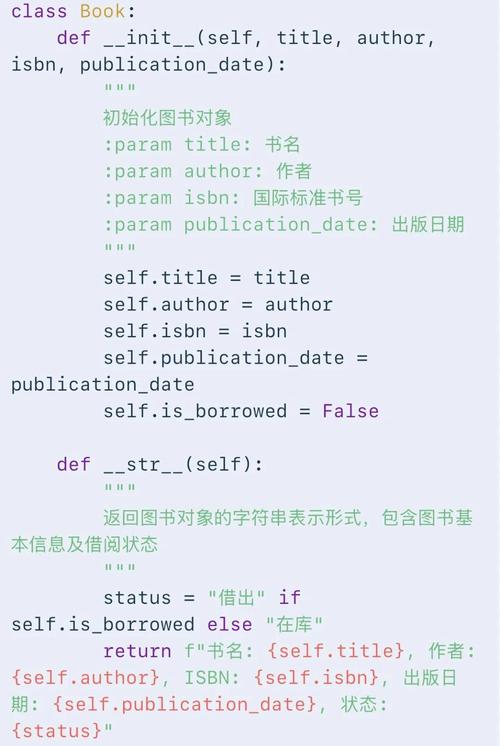

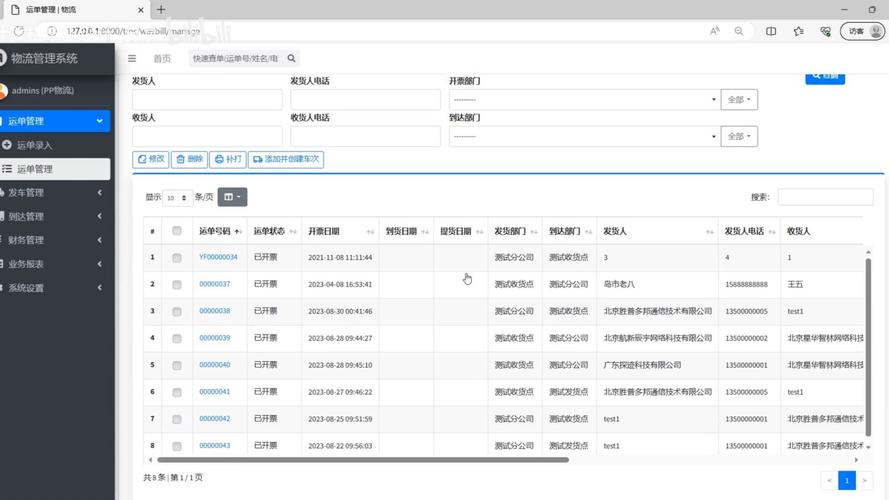

(图片来源网络,侵删)

(图片来源网络,侵删) -

Libraries: You'll need to install a few Python libraries. Open your terminal or command prompt and run:

pip install pandas openpyxl sqlalchemy pymysql

pandas: The core library for data manipulation. It can read/write Excel files and has built-in SQL capabilities.openpyxl: A library thatpandasuses to read and write.xlsxfiles.sqlalchemy: A powerful SQL toolkit that provides a unified interface to multiple SQL databases. It's the recommended way to connect to databases.pymysql: A pure-Python MySQL client library thatsqlalchemyuses to connect to MySQL.

Scenario 1: Excel → MySQL (Loading Data)

This is useful when you have data in a spreadsheet that you need to store in a database for long-term storage, querying, or integration with other systems.

Step 1: Prepare Your Excel File

Let's say you have an Excel file named sales_data.xlsx with a sheet named January_Sales.

sales_data.xlsx (Sheet: January_Sales)

| OrderID | ProductName | SaleDate | Amount |

|---|---|---|---|

| 101 | Laptop | 2025-01-05 | 1200 |

| 102 | Mouse | 2025-01-06 | 25 |

| 103 | Keyboard | 2025-01-07 | 75 |

Step 2: Create the Target Table in MySQL

Connect to your MySQL server (using a tool like MySQL Workbench, DBeaver, or the command line) and run the following SQL to create a table. It's crucial that the data types match your Excel data.

CREATE DATABASE IF NOT EXISTS company_db;

USE company_db;

CREATE TABLE IF NOT EXISTS sales (

OrderID INT PRIMARY KEY,

ProductName VARCHAR(255),

SaleDate DATE,

Amount DECIMAL(10, 2)

);

Step 3: The Python Script to Load Data

This script will read the Excel file and insert its data into the sales table.

import pandas as pd

from sqlalchemy import create_engine

# --- Configuration ---

# Replace with your MySQL database connection details

DB_USER = 'your_username'

DB_PASSWORD = 'your_password'

DB_HOST = 'localhost' # or your server's IP address

DB_PORT = '3306'

DB_NAME = 'company_db'

# Excel file details

EXCEL_FILE = 'sales_data.xlsx'

SHEET_NAME = 'January_Sales'

TABLE_NAME = 'sales'

# --- Script ---

# 1. Create a connection string for SQLAlchemy

# The format is: 'mysql+pymysql://user:password@host:port/database'

connection_string = f'mysql+pymysql://{DB_USER}:{DB_PASSWORD}@{DB_HOST}:{DB_PORT}/{DB_NAME}'

# 2. Create a SQLAlchemy engine

engine = create_engine(connection_string)

# 3. Read the Excel file into a pandas DataFrame

try:

df = pd.read_excel(EXCEL_FILE, sheet_name=SHEET_NAME)

print("Excel file read successfully!")

print(df)

except FileNotFoundError:

print(f"Error: The file {EXCEL_FILE} was not found.")

exit()

# 4. Write the DataFrame to the MySQL database

# if_exists='replace' will drop the table if it exists and create a new one.

# if_exists='append' will add the data to the existing table.

# if_exists='fail' will do nothing if the table already exists.

df.to_sql(

name=TABLE_NAME,

con=engine,

if_exists='append', # Use 'replace' to overwrite the table

index=False # Do not write the DataFrame index as a column

)

print(f"Data successfully loaded into the '{TABLE_NAME}' table in MySQL.")

To run this:

- Save the code as a Python file (e.g.,

load_to_mysql.py). - Make sure

sales_data.xlsxis in the same directory. - Update the configuration variables at the top of the script.

- Run from your terminal:

python load_to_mysql.py

Scenario 2: MySQL → Excel (Exporting Data)

This is useful for generating reports, sharing data with non-technical users, or creating backups of specific database tables.

Step 1: The Python Script to Export Data

We'll use the same sales table we just created. This script will query all data from it and save it to a new Excel file.

import pandas as pd

from sqlalchemy import create_engine

# --- Configuration ---

# Replace with your MySQL database connection details

DB_USER = 'your_username'

DB_PASSWORD = 'your_password'

DB_HOST = 'localhost'

DB_PORT = '3306'

DB_NAME = 'company_db'

# Excel file details

OUTPUT_EXCEL_FILE = 'sales_report.xlsx'

SHEET_NAME = 'Sales_Data'

TABLE_NAME = 'sales' # Or you can write a custom SQL query

# --- Script ---

# 1. Create a connection string and engine

connection_string = f'mysql+pymysql://{DB_USER}:{DB_PASSWORD}@{DB_HOST}:{DB_PORT}/{DB_NAME}'

engine = create_engine(connection_string)

# 2. Define your SQL query

# You can select from a table or write a more complex query

sql_query = f"SELECT * FROM {TABLE_NAME}"

# 3. Read the data from MySQL into a pandas DataFrame

try:

df = pd.read_sql(sql_query, con=engine)

print("Data successfully fetched from MySQL!")

print(df)

except Exception as e:

print(f"An error occurred: {e}")

exit()

# 4. Write the DataFrame to an Excel file

# The 'with' statement ensures the file is properly closed

with pd.ExcelWriter(OUTPUT_EXCEL_FILE, engine='openpyxl') as writer:

df.to_excel(writer, sheet_name=SHEET_NAME, index=False)

print(f"Data successfully exported to '{OUTPUT_EXCEL_FILE}'.")

To run this:

- Save the code as a Python file (e.g.,

export_to_excel.py). - Update the configuration variables.

- Run from your terminal:

python export_to_excel.py - You will find a new file named

sales_report.xlsxin your directory.

Scenario 3: A Complete ETL Example

Let's combine the two scenarios. We'll pull data from MySQL, perform a simple transformation (e.g., calculate a total revenue column), and then save the result to a new Excel sheet.

import pandas as pd

from sqlalchemy import create_engine

# --- Configuration ---

DB_USER = 'your_username'

DB_PASSWORD = 'your_password'

DB_HOST = 'localhost'

DB_PORT = '3306'

DB_NAME = 'company_db'

OUTPUT_EXCEL_FILE = 'sales_analysis_report.xlsx'

# --- Script ---

# 1. Connect to MySQL and extract data

connection_string = f'mysql+pymysql://{DB_USER}:{DB_PASSWORD}@{DB_HOST}:{DB_PORT}/{DB_NAME}'

engine = create_engine(connection_string)

print("Step 1: Extracting data from MySQL...")

sql_query = "SELECT * FROM sales"

df = pd.read_sql(sql_query, con=engine)

print("Data extracted successfully.")

print("Original Data:")

print(df)

# 2. Transform the data

print("\nStep 2: Transforming data...")

# Convert 'SaleDate' to datetime format if it's not already

df['SaleDate'] = pd.to_datetime(df['SaleDate'])

# Add a new column for the month

df['Month'] = df['SaleDate'].dt.strftime('%Y-%m')

# Calculate total revenue per product

product_revenue = df.groupby('ProductName')['Amount'].sum().reset_index()

product_revenue.rename(columns={'Amount': 'TotalRevenue'}, inplace=True)

print("Data transformed successfully.")

print("Transformed Data (Product Revenue):")

print(product_revenue)

# 3. Load the transformed data into an Excel file with multiple sheets

print("\nStep 3: Loading data into Excel...")

with pd.ExcelWriter(OUTPUT_EXCEL_FILE, engine='openpyxl') as writer:

# Write the original data to the 'RawData' sheet

df.to_excel(writer, sheet_name='RawData', index=False)

# Write the transformed data to the 'ProductRevenue' sheet

product_revenue.to_excel(writer, sheet_name='ProductRevenue', index=False)

print(f"\nETL process complete. Report saved to '{OUTPUT_EXCEL_FILE}'.")

This final script demonstrates a classic data pipeline: it Extracts raw data, Transforms it into a more useful format, and Loads it into a final output file.

Best Practices & Tips

- Security: Never hardcode credentials directly in your script. Use environment variables or a configuration file for sensitive information like passwords.

- Error Handling: Always wrap your database operations in

try...exceptblocks to handle potential connection errors or query failures gracefully. - Chunking: For very large Excel files or database tables, use

chunksizeinpd.read_excel()orpd.read_sql()to process the data in smaller, manageable pieces. - Data Types: Pay close attention to data types. Excel dates and numbers can sometimes be tricky. Explicitly convert them in pandas (e.g.,

pd.to_datetime(),pd.to_numeric()) to ensure they are stored correctly in MySQL. - Indexes: Use

index=Falseinto_sql()to avoid writing the pandas row index as a column in your database table. Useindex=Trueinto_excel()if you want to keep the index.