Of course! Here is a comprehensive guide to using GraphX in Python with PySpark.

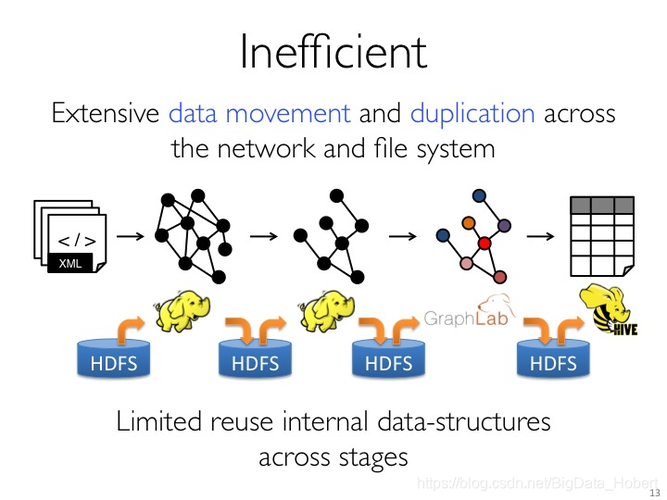

Important Note: GraphX is a Scala API

First, a crucial point: GraphX is not a native Python API. It is a core component of Apache Spark written in Scala. The primary way to use it in Python is through PySpark, which acts as a Python wrapper that translates your Python code into Scala operations that run on the Spark cluster.

This means you won't find a pyspark.graphx module. Instead, you'll use the standard pyspark library and construct the GraphX Graph object using PySpark's core DataFrames.

Core Concepts of GraphX

GraphX represents a graph as a pair of collections:

- Vertices (

RDD[VertexId, V]): A collection of vertices (or nodes). Each vertex has a uniqueVertexId(aLongtype) and an associated attribute of typeV(e.g., a string, integer, or a complex object). In PySpark, this is represented as an RDD of(vertex_id, attribute)tuples. - Edges (

RDD[Edge[T]]): A collection of edges (or connections) that link vertices. Each edge has asrcId(source vertex ID), adstId(destination vertex ID), and an attribute of typeT(e.g., a string like "follows" or a weight like 5). In PySpark, this is an RDD ofEdgeobjects.

The combination of these two RDDs forms a Graph[V, T] object.

Setting Up Your PySpark Environment

Before you start, make sure you have PySpark installed. If not, you can install it via pip:

pip install pyspark

You'll also need a SparkContext to work with. For local development, you can create one easily.

Creating a Graph in PySpark

Let's build a simple graph representing a social network. We'll create vertices for users and edges representing who follows whom.

Step 1: Initialize SparkSession

from pyspark import SparkContext

from pyspark.sql import SparkSession

# It's good practice to use a SparkSession

spark = SparkSession.builder \

.appName("GraphXPythonExample") \

.getOrCreate()

sc = spark.sparkContext

Step 2: Define Vertices

Vertices are an RDD of (id, attribute) pairs. The attribute can be anything. Let's use a dictionary to store user details.

# Define vertices: (vertex_id, attributes)

vertices_data = [

(1, {"name": "Alice", "age": 34}),

(2, {"name": "Bob", "age": 45}),

(3, {"name": "Charlie", "age": 30}),

(4, {"name": "David", "age": 25}),

(5, {"name": "Eve", "age": 28})

]

# Create an RDD for vertices

vertices_rdd = sc.parallelize(vertices_data)

Step 3: Define Edges

Edges are an RDD of Edge objects. You need to import Edge from pyspark.graphx.

from pyspark.graphx import Edge

# Define edges: Edge(srcId, dstId, [attribute])

edges_data = [

Edge(1, 2, "follows"), # Alice follows Bob

Edge(1, 3, "follows"), # Alice follows Charlie

Edge(2, 3, "follows"), # Bob follows Charlie

Edge(3, 4, "follows"), # Charlie follows David

Edge(4, 1, "follows"), # David follows Alice

Edge(5, 1, "follows"), # Eve follows Alice

Edge(5, 4, "follows") # Eve follows David

]

# Create an RDD for edges

edges_rdd = sc.parallelize(edges_data)

Step 4: Create the Graph Object

Now, combine the vertices and edges RDDs to form a Graph object.

from pyspark.graphx import Graph

# Create the graph

social_graph = Graph(vertices_rdd, edges_rdd)

print("Graph created successfully!")

print(f"Number of vertices: {social_graph.vertices.count()}")

print(f"Number of edges: {social_graph.edges.count()}")

Basic Graph Operations

GraphX provides powerful operations for transforming and querying graphs.

a) vertices and edges

These properties give you direct access to the vertex and edge RDDs.

# Get vertex RDD

vertex_rdd = social_graph.vertices

print("\nVertex RDD (first 3):")

print(vertex_rdd.take(3))

# Get edge RDD

edge_rdd = social_graph.edges

print("\nEdge RDD (first 3):")

print(edge_rdd.take(3))

b) triplets

This is one of the most useful operations. It joins the vertex attributes with the edges, creating an RDD of EdgeTriplet objects. Each triplet contains the source vertex, destination vertex, and the edge itself.

# Get edge triplets

triplets_rdd = social_graph.triplets

print("\nEdge Triplets (first 2):")

for triplet in triplets_rdd.take(2):

print(f"{triplet.srcAttr['name']} ({triplet.srcId}) --[{triplet.attr}]--> {triplet.dstAttr['name']} ({triplet.dstId})")

c) subgraph

You can filter the graph based on vertex and edge predicates. This is useful for cleaning or focusing on a specific part of the graph.

Let's create a subgraph containing only users older than 30 and the edges between them.

# Define the vertex predicate: keep vertices where age > 30

def vertex_filter(vertex):

return vertex[1]["age"] > 30

# Define the edge predicate: keep edges whose source and destination are in the filtered vertex set

def edge_filter(edge):

return True # We'll rely on the vertex filter to clean up the graph

subgraph = social_graph.subgraph(vertex_filter, edge_filter)

print("\nSubgraph (users older than 30):")

print(f"Number of vertices: {subgraph.vertices.count()}")

print(f"Number of edges: {subgraph.edges.count()}")

# The output will show vertices for Alice, Bob, Charlie and the edges between them.

d) collectNeighborIds and collectNeighbors

These operations aggregate information about a vertex's neighbors.

collectNeighborIds: Collects the IDs of neighbors.collectNeighbors: Collects the full(VertexId, V)tuples of neighbors.

# Collect neighbor IDs for each vertex

neighbor_ids = social_graph.collectNeighborIds(EdgeDirection.Out)

print("\nNeighbor IDs (Outgoing):")

print(neighbor_ids.collect())

# Collect full neighbor information

neighbors = social_graph.collectNeighbors(EdgeDirection.Out)

print("\nFull Neighbor Information:")

for vertex_id, neighbor_list in neighbors.collect():

if neighbor_list: # Only print vertices that have outgoing edges

print(f"Vertex {vertex_id}'s neighbors: {neighbor_list}")

Graph Algorithms

GraphX comes with several built-in graph algorithms. Let's look at PageRank and Connected Components.

a) PageRank

PageRank measures the importance of each vertex in the graph. Let's find the most "influential" users in our social network.

# Run PageRank

# The resetProb is the probability of jumping to a random vertex (usually 0.15)

pagerank_results = social_graph.pageRank(resetProb=0.15)

print("\nPageRank Results:")

# The vertices RDD of the result contains (vertex_id, rank)

for vertex_id, rank in pagerank_results.vertices.collect():

user_name = social_graph.vertices.filter(lambda v: v[0] == vertex_id).first()[1]["name"]

print(f"User: {user_name}, Rank: {rank:.4f}")

b) Connected Components

This algorithm finds sets of vertices that are connected to each other. Each component is assigned a unique component ID.

# Run Connected Components

# The maxIterations parameter controls how many iterations to run

connected_components = social_graph.connectedComponents(maxIterations=5)

print("\nConnected Components:")

# The vertices RDD of the result contains (vertex_id, component_id)

for vertex_id, component_id in connected_components.vertices.collect():

user_name = social_graph.vertices.filter(lambda v: v[0] == vertex_id).first()[1]["name"]

print(f"User: {user_name}, Component ID: {component_id}")

In our small example, all users are likely in one connected component.

Converting to/from DataFrames

While GraphX operations use RDDs, it's often convenient to work with DataFrames for integration with other Spark SQL features.

From Graph to DataFrames

# Convert vertices to DataFrame

vertices_df = social_graph.vertices.toDF(["id", "attributes"])

print("\nVertices DataFrame:")

vertices_df.show()

# Convert edges to DataFrame

# Note: Edge objects have src, dst, and attr fields

edges_df = social_graph.edges.toDF(["src", "dst", "relationship"])

print("\nEdges DataFrame:")

edges_df.show()

From DataFrames to Graph

You can also construct a graph from DataFrames.

# Assuming you have vertices_df and edges_df from above

# You need to convert them back to RDDs

vertices_rdd_from_df = vertices_df.rdd.map(lambda row: (row.id, row.attributes))

edges_rdd_from_df = edges_df.rdd.map(lambda row: Edge(row.src, row.dst, row.relationship))

# Create the new graph

graph_from_df = Graph(vertices_rdd_from_df, edges_rdd_from_df)

print("\nGraph created from DataFrames:")

print(f"Vertices: {graph_from_df.vertices.count()}, Edges: {graph_from_df.edges.count()}")

Cleaning Up

Always stop your SparkContext when you're done to free up resources.

sc.stop()

Summary and Best Practices

- Remember the Scala Origin: GraphX is a Scala API. PySpark is a wrapper. This means some advanced features might be less accessible in Python.

- Use

tripletsfor Rich Analysis: For any analysis that involves both vertex attributes and edge properties (e.g., "find all users Alice follows who are older than 30"),tripletsis your best friend. - Filter with

subgraph: Before running expensive algorithms, usesubgraphto reduce the size of your graph to only the relevant vertices and edges. - Leverage DataFrames: For ETL, data cleaning, and integration with Spark SQL, convert your graph components (vertices and edges) to and from DataFrames.

- PySpark is the Way: While you might find older examples using

pyspark.graphlib, the modern and correct approach is to construct theGraphobject directly from RDDs as shown in this guide.