Of course! The stats collector in Scrapy is a powerful and essential tool for monitoring your spider's performance, debugging, and collecting metrics. It acts as a dictionary where you can store and retrieve key-value pairs of statistics throughout the spider's lifecycle.

Here's a comprehensive guide to using Scrapy's stats, from the basics to advanced techniques.

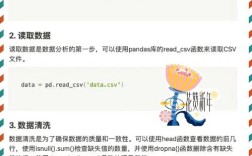

What are Scrapy Stats?

The stats collector is an instance of the scrapy.statscollectors.MemoryStatsCollector by default. It's a simple dictionary-like object that is initialized when the spider starts and is available throughout its execution.

Key Characteristics:

- Global per Spider: Each spider has its own

statsobject. Stats from one spider don't interfere with another. - Key-Value Store: It stores data as

{key: value}pairs. Values can be numbers, strings, booleans, lists, or other simple data types. - Thread-Safe: It's safe to use from multiple places in your code (like different callbacks or signals) without worrying about race conditions.

How to Access Stats

You can access the stats collector from anywhere in your spider code.

Method 1: In Spider Callbacks (Most Common)

Inside a spider's method (like parse), you can access it via self.stats.

import scrapy

class MySpider(scrapy.Spider):

name = "my_spider"

start_urls = ["http://quotes.toscrape.com"]

def parse(self, response):

# --- Accessing and Setting Stats ---

# 1. Setting a stat

self.stats['pages_scraped'] = 0

self.stats['total_quotes'] = 0

self.stats['failed_urls'] = []

# 2. Incrementing a stat (common pattern)

self.stats['pages_scraped'] += 1

# 3. Getting a stat

pages_done = self.stats.get('pages_scraped', 0)

self.logger.info(f"Scraped page {pages_done}: {response.url}")

# ... parsing logic ...

quotes = response.css('div.quote')

self.stats['total_quotes'] += len(quotes)

for quote in quotes:

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('small.author::text').get(),

}

Method 2: Using Signals (More Advanced)

You can also access stats from Scrapy signals, which is useful for code that's outside the spider class itself (like extensions or pipelines).

from scrapy.signals import spider_opened, spider_closed

from scrapy.crawler import CrawlerRunner

from twisted.internet import reactor

class MyExtension:

@classmethod

def from_crawler(cls, crawler):

# Get the stats collector from the crawler

extension = cls()

crawler.signals.connect(extension.spider_opened, signal=spider_opened)

crawler.signals.connect(extension.spider_closed, signal=spider_closed)

return extension

def spider_opened(self, spider, *args, **kwargs):

# Access stats via the spider object passed to the signal

spider.stats.set('start_time', spider.crawler.stats.get('start_time'))

print(f"Spider {spider.name} opened. Initial stats: {spider.stats.get_stats()}")

def spider_closed(self, spider, reason, *args, **kwargs):

# This is a great place to log final stats

final_stats = spider.stats.get_stats()

print(f"Spider {spider.name} closed. Reason: {reason}")

print("Final Stats:")

for key, value in final_stats.items():

print(f" {key}: {value}")

# To use this, you would add 'my_project.extensions.MyExtension' to your EXTENSIONS setting.

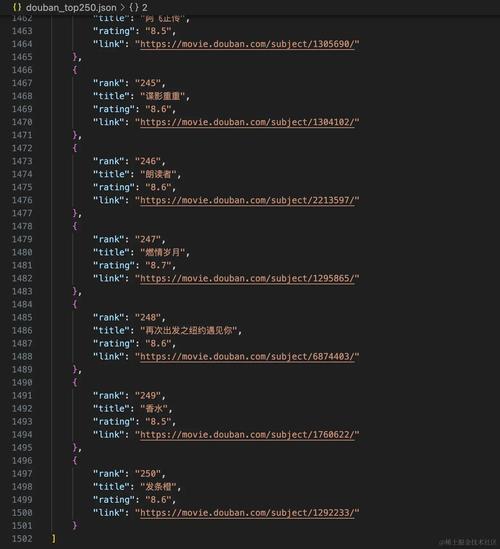

Common Built-in Stats

Scrapy automatically tracks many useful stats for you. You can see them all when your spider finishes.

Here are some of the most important built-in stats:

| Stat Key | Description |

|---|---|

item_scraped_count |

Total number of items scraped. |

item_dropped_count |

Total number of items dropped by pipelines. |

item_dropped_reasons_count |

A dictionary counting items dropped by reason (e.g., {'DropItem': 5}). |

downloader/request_count |

Total number of requests made by the downloader. |

downloader/response_count |

Total number of responses received. |

downloader/response_status_count/XXX |

A dictionary counting responses by their HTTP status code (e.g., {'200': 120, '404': 2}). |

finish_reason |

The reason the spider stopped (e.g., 'finished', 'shutdown'). |

start_time |

The timestamp when the spider started. |

elapsed_time_seconds |

The total time the spider ran. |

Example Output: When you run a spider, Scrapy prints a summary of these stats at the end:

2025-10-27 10:30:00 [scrapy.core.engine] INFO: Spider closed (finished)

{'downloader/request_bytes': 1234,

'downloader/request_count': 10,

'downloader/request_method_count/GET': 10,

'downloader/response_bytes': 5678,

'downloader/response_count': 10,

'downloader/response_status_count/200': 10,

'elapsed_time_seconds': 5.23,

'finish_reason': 'finished',

'item_scraped_count': 50,

'log_count/INFO': 15,

'start_time': datetime.datetime(2025, 10, 27, 10, 29, 54, 812345)}Best Practices for Using Stats

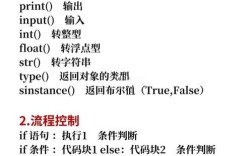

Use Descriptive Keys

Be clear and consistent with your stat keys. Use prefixes to group related stats.

# Good self.stats['quotes/total'] = 0 self.stats['quotes/authors_seen'] = set() self.stats['errors/timeout_count'] = 0 # Bad self.stats['q'] = 0 self.stats['a'] = set() self.stats['e'] = 0

Use get() and set() for Safety

While stats is a dictionary, it has .get() and .set() methods that are slightly more robust and recommended.

self.stats.get('key', default_value)is safer thanself.stats['key']to avoidKeyError.self.stats.set('key', value)is the canonical way to set a value.

Log Important Stats at the End

The best place to log your custom stats is in a spider_closed signal handler or directly in the spider's closed() method. This gives you a final summary.

class MySpider(scrapy.Spider):

# ... (other spider code) ...

def closed(self, reason):

self.logger.info(

f"Spider closed (reason: {reason}). "

f"Final stats: {self.stats.get_stats()}"

)

Use Stats for Debugging and Alerting

Stats are perfect for adding custom logic. For example, you could raise an error if a certain failure rate is exceeded.

def parse(self, response):

# ... some parsing logic ...

if some_critical_error_occurred:

self.stats['critical_errors'] = self.stats.get('critical_errors', 0) + 1

if self.stats['critical_errors'] > 5:

self.logger.error(f"Too many critical errors ({self.stats['critical_errors']}). Stopping spider.")

self.crawler.engine.close_spider(self, 'critical_error_limit_exceeded')

Custom Stats Collectors

The default MemoryStatsCollector is great, but it only keeps stats in memory. If your spider crashes or is stopped manually, these stats are lost.

For more robust scenarios, you can use other stats collectors. The most common one is the DummyStatsCollector, which does nothing (useful for performance tuning), but you can also create your own.

Example: Writing Stats to a File

You can create a custom stats collector that writes stats to a JSON file on spider_closed.

import json

from scrapy.statscollectors import StatsCollector

class JsonFileStatsCollector(StatsCollector):

def __init__(self, crawler, path='scrapy_stats.json'):

super().__init__(crawler)

self.path = path

def close_spider(self, spider, reason):

# When the spider closes, write the stats to a file

with open(self.path, 'w') as f:

json.dump(self.get_stats(), f, indent=4)

super().close_spider(spider, reason)

# To use this, you need to tell Scrapy to use it in your settings.py:

# STATS_CLASS = 'my_project.statscollectors.JsonFileStatsCollector'

How to View Stats During a Run

By default, you only see the final summary. To see stats continuously during the crawl, you can use the --stats command-line argument with a callback.

This is an advanced feature, but here's the idea:

- Define a function that takes the

statsobject and prints it. - Pass this function to the

--statsargument.

Example:

Let's say you have a file my_stats_printer.py:

# my_stats_printer.py

def print_stats(stats):

print("--- Current Stats ---")

print(f"Pages scraped: {stats.get('pages_scraped', 0)}")

print(f"Quotes found: {stats.get('total_quotes', 0)}")

print("---------------------")

Then, you run your spider like this:

scrapy crawl my_spider -s STATS=my_stats_printer:print_stats

This will call your print_stats function periodically, giving you a live view of your spider's progress. (Note: The exact mechanism for --stats can be complex; often, people use signals or logging within the spider itself for live updates). A simpler way is to log from the spider_closed signal as shown earlier.