Of course! In Python, "value counts" most commonly refers to the value_counts() method, which is a powerful and essential tool for data analysis, especially when working with tabular data.

Let's break it down.

The Short Answer: value_counts()

The value_counts() method is a built-in function for Pandas Series objects. Its primary job is to count the occurrences of each unique value in a Series and return the results in a new Series, sorted in descending order.

Basic Usage with Pandas

First, you need to have the Pandas library installed. If you don't, you can install it via pip:

pip install pandas

Now, let's look at how it works.

Example 1: Simple List of Values

Imagine you have a list of survey responses and you want to know how many people chose each option.

import pandas as pd

# Create a pandas Series from a list of categories

data = ['Red', 'Blue', 'Red', 'Green', 'Blue', 'Blue', 'Red']

colors = pd.Series(data)

# Use value_counts() to get the frequency of each color

color_counts = colors.value_counts()

print("Original Data:")

print(colors)

print("\nValue Counts:")

print(color_counts)

Output:

Original Data:

0 Red

1 Blue

2 Red

3 Green

4 Blue

5 Blue

6 Red

dtype: object

Value Counts:

Blue 3

Red 3

Green 1

dtype: int64As you can see, value_counts() automatically counted the occurrences and sorted them from most frequent (Blue and Red, both with 3) to least frequent (Green with 1).

Example 2: Real-world Scenario (DataFrame Column)

value_counts() is most often used on a single column of a DataFrame. Let's create a sample DataFrame.

import pandas as pd

import numpy as np

# Create a sample DataFrame

data = {

'City': ['New York', 'London', 'Paris', 'New York', 'London', 'Tokyo', 'Paris', 'New York'],

'Age': [25, 30, 25, 40, 30, 35, 25, np.nan], # Including a NaN value

'Product': ['A', 'B', 'A', 'A', 'C', 'B', 'A', 'C']

}

df = pd.DataFrame(data)

# Get the value counts for the 'City' column

city_counts = df['City'].value_counts()

print("Original DataFrame:")

print(df)

print("\nValue Counts for 'City':")

print(city_counts)

Output:

Original DataFrame:

City Age Product

0 New York 25.0 A

1 London 30.0 B

2 Paris 25.0 A

3 New York 40.0 A

4 London 30.0 C

5 Tokyo 35.0 B

6 Paris NaN A

7 New York NaN C

Value Counts for 'City':

New York 3

London 2

Paris 2

Tokyo 1

Name: City, dtype: int64Notice how New York appears 3 times, London and Paris appear twice, and Tokyo appears once.

Key Parameters of value_counts()

The real power of value_counts() comes from its parameters.

normalize: bool (Default: False)

If set to True, it returns the relative frequencies (proportions or percentages) instead of counts.

# Get the proportion of each city

city_proportions = df['City'].value_counts(normalize=True)

print("City Proportions:")

print(city_proportions)

Output:

City Proportions:

New York 0.375000

London 0.250000

Paris 0.250000

Tokyo 0.125000

Name: City, dtype: float64(Note: 3/8 = 0.375, 2/8 = 0.25, etc.)

sort: bool (Default: True)

By default, it's sorted. You can set sort=False to get the counts in the order of appearance.

# Get counts in the order they first appear in the data

city_counts_unsorted = df['City'].value_counts(sort=False)

print("Unsorted City Counts:")

print(city_counts_unsorted)

Output:

Unsorted City Counts:

New York 3

London 2

Paris 2

Tokyo 1

Name: City, dtype: int64(This might look the same in this specific case, but if the data was ['London', 'Paris', 'London'], the unsorted output would be London: 2, Paris: 1.)

ascending: bool (Default: False)

Controls the sort order. False is descending (default), True is ascending.

# Get counts sorted from least to most common

city_counts_ascending = df['City'].value_counts(ascending=True)

print("Ascending City Counts:")

print(city_counts_ascending)

Output:

Ascending City Counts:

Tokyo 1

London 2

Paris 2

New York 3

Name: City, dtype: int64dropna: bool (Default: True)

By default, NaN (Not a Number) values are excluded. You can set dropna=False to include them in the count.

# Get counts including NaN values

age_counts_with_nan = df['Age'].value_counts(dropna=False)

print("Age Counts (with NaN):")

print(age_counts_with_nan)

Output:

Age Counts (with NaN):

25.0 3

30.0 2

NaN 2

40.0 1

35.0 1

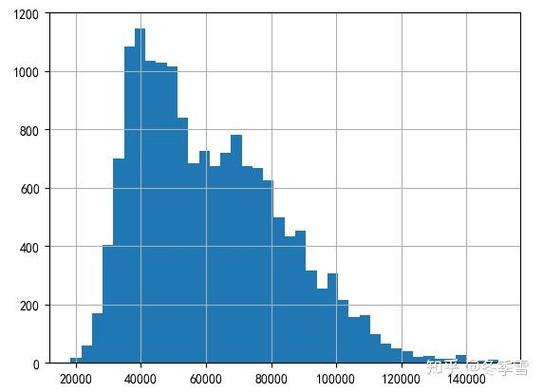

Name: Age, dtype: int64bins: int or sequence of scalars

This is a very useful feature for converting numerical data into categorical bins and then counting the occurrences in each bin. This is called binning or discretization.

# Create a Series with numerical data

ages = pd.Series([18, 22, 25, 27, 30, 35, 40, 45, 50, 55])

# Count how many people fall into age groups (bins)

# We'll create 3 bins: 18-30, 31-40, 41-55

age_group_counts = ages.value_counts(bins=3)

print("Age Group Counts:")

print(age_group_counts)

Output:

Age Group Counts:

(17.967, 36.333] 7

(36.333, 54.667] 2

(54.667, 73.0] 1

Name: Age, dtype: int64Pandas automatically calculated the bin edges. You can also define your own bins:

my_bins = [0, 25, 35, 100]

age_group_counts_custom = ages.value_counts(bins=my_bins)

print("\nCustom Age Group Counts:")

print(age_group_counts_custom)

Output:

Custom Age Group Counts:

(0.0, 25.0] 3

(25.0, 35.0] 3

(35.0, 100.0] 4

Name: Age, dtype: int64Alternatives (Without Pandas)

While value_counts() is the standard, it's good to know how to do it with Python's built-in tools.

Using collections.Counter

The Counter class from the collections module is designed for exactly this purpose.

from collections import Counter

data = ['Red', 'Blue', 'Red', 'Green', 'Blue', 'Blue', 'Red']

# Counter returns a dictionary-like object

counts = Counter(data)

print(counts)

print(f"Most common: {counts.most_common()}") # .most_common() sorts for you

Output:

Counter({'Blue': 3, 'Red': 3, 'Green': 1})

Most common: [('Blue', 3), ('Red', 3), ('Green', 1)]Using Pure Python (Dictionary)

You can also achieve this with a simple loop and a dictionary.

data = ['Red', 'Blue', 'Red', 'Green', 'Blue', 'Blue', 'Red']

counts = {}

for item in data:

counts[item] = counts.get(item, 0) + 1

print(counts)

Output:

{'Red': 3, 'Blue': 3, 'Green': 1}Summary: When to Use What?

| Method | Best For | Pros | Cons |

|---|---|---|---|

pandas.Series.value_counts() |

Data analysis on tabular data (DataFrames/Series). | Extremely fast, integrates with the rest of the Pandas ecosystem, powerful parameters (normalize, bins, dropna), returns a Series which is easy to work with. |

Requires the Pandas library. |

collections.Counter |

Counting items in any iterable (lists, tuples, etc.). | Part of the standard library, very fast, simple and intuitive API (.most_common()). |

Less flexible than Pandas for data analysis tasks (e.g., no direct bins parameter). |

| Pure Python Dictionary | Simple counting or when you can't use external libraries. | No dependencies, great for learning the logic. | More verbose, slower for large datasets, requires manual implementation of sorting. |

For any serious data manipulation in Python, pandas.value_counts() is the undisputed champion and the tool you should be using.