Of course! In Python, the term LabeledPoint is most famously associated with Apache Spark's MLlib library. It's a fundamental data structure used for supervised machine learning tasks.

Let's break down what it is, why it's used, and how to work with it.

What is a LabeledPoint?

A LabeledPoint is a simple, but crucial, data structure in Spark MLlib. It represents a single data point for a machine learning model. As the name suggests, it has two main components:

- Label: The target or outcome variable you want to predict. This is the "answer" for that specific data point.

- Features: A vector of numerical values that represent the input variables or attributes used to make the prediction.

In short, it's a container for (label, features).

Why is it Useful?

Spark is designed for distributed computing on massive datasets. A simple Python tuple or dictionary isn't optimized for being sent across a cluster. LabeledPoint is a specialized data structure that:

- Is Serializable: It can be efficiently converted into a format that can be sent over the network to different nodes in the Spark cluster.

- Is Optimized: It's part of Spark's MLlib ecosystem, which is designed to work seamlessly with other ML algorithms and data transformations.

- Provides a Standard Format: It enforces a consistent

(label, features)structure, making it easier to feed data into various Spark ML algorithms.

How to Use LabeledPoint

Here's a practical guide with code examples.

Importing the Class

First, you need to import it from pyspark.mllib.regression.

from pyspark.mllib.regression import LabeledPoint

Creating a LabeledPoint

You create an instance of LabeledPoint by providing the label and the features. The features must be a vector. There are two main types of vectors in Spark:

- Dense Vector: Stores all values, including zeros. Good for smaller, denser feature sets.

- Sparse Vector: Stores only non-zero values and their indices. Extremely memory-efficient for high-dimensional data with many zeros (common in text data).

Example: Creating Dense and Sparse Vectors

# You also need to import the vector types

from pyspark.mllib.linalg import Vectors, DenseVector, SparseVector

# --- Example 1: Dense Vector ---

# Imagine predicting house prices.

# Label: Price ($100,000s)

# Features: [Square Footage, Number of Bedrooms, Age of House (years)]

dense_point = LabeledPoint(

label=3.5, # Price is $350,000

features=Vectors.dense([2100, 4, 15]) # 2100 sqft, 4 bedrooms, 15 years old

)

# --- Example 2: Sparse Vector ---

# Imagine text classification (e.g., spam detection) with a 100,000-word vocabulary.

# Most words won't appear in a single email.

# Label: 1 for spam, 0 for not spam

# Features: Word counts for a 100,000-dimensional vector.

# Only indices 10, 500, and 9999 have non-zero counts.

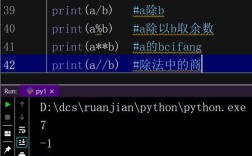

sparse_point = LabeledPoint(

label=1, # This is spam

features=Vectors.sparse(100000, {10: 5, 500: 2, 9999: 1}) # 100k dim, word '10' appears 5 times, etc.

)

print("--- Dense LabeledPoint ---")

print(dense_point)

print("\n--- Sparse LabeledPoint ---")

print(sparse_point)

Accessing the Label and Features

Once you have a LabeledPoint object, you can easily access its components.

# Accessing components from the dense_point example

print(f"Label: {dense_point.label}")

print(f"Features (as a list): {list(dense_point.features)}")

print(f"Feature at index 1 (Bedrooms): {dense_point.features[1]}")

Using LabeledPoint in a Spark RDD

The real power of LabeledPoint comes when you use it with Spark's Resilient Distributed Datasets (RDDs). You can create an RDD of LabeledPoint objects to train a model.

from pyspark import SparkContext

# Assume sc is your SparkContext

# sc = SparkContext("local", "LabeledPointExample")

# Create a list of raw data

# Each item is a tuple: (label, [feature1, feature2, ...])

raw_data = [

(1.0, [10.0, 15.0, 20.0]),

(0.0, [5.0, 8.0, 12.0]),

(1.0, [22.0, 18.0, 25.0]),

(0.0, [8.0, 11.0, 15.0]),

]

# Convert the raw data into an RDD of LabeledPoint objects

# Using a list comprehension is a clean way to do this

labeled_points_rdd = sc.parallelize(raw_data).map(lambda x: LabeledPoint(x[0], Vectors.dense(x[1])))

# Now you have an RDD of LabeledPoint, ready for a machine learning algorithm

print("\n--- RDD of LabeledPoint ---")

labeled_points_rdd.foreach(lambda lp: print(lp))

Using LabeledPoint with a DataFrame (Modern Spark)

While RDDs are the foundation, modern Spark (2.0+) primarily uses the DataFrame API. The MLlib algorithms in pyspark.ml (note the .ml instead of .mllib) expect a DataFrame with specific columns, typically named label and features.

You can create this DataFrame from an RDD of LabeledPoints.

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName("LabeledPointToDF").getOrCreate()

# Use the RDD of LabeledPoint from the previous step

# The toDF() method will automatically create columns named "label" and "features"

df = labeled_points_rdd.toDF()

print("\n--- DataFrame from LabeledPoint RDD ---")

df.show()

# You can now use this DataFrame with pyspark.ml algorithms

# For example, to train a Logistic Regression model:

# from pyspark.ml.classification import LogisticRegression

# lr = LogisticRegression()

# model = lr.fit(df)

Summary: LabeledPoint vs. DataFrame

| Feature | LabeledPoint (in pyspark.mllib) |

DataFrame (in pyspark.ml) |

|---|---|---|

| API | Low-level, based on RDDs | High-level, built on RDDs but more user-friendly |

| Data Structure | A single Python object for one data point | A distributed collection of organized rows and columns |

| Primary Use | Foundational, used internally by MLlib algorithms, or for custom algorithm development. | The standard, recommended way to use MLlib. Easier to use with Pipelines. |

| Typical Workflow | LabeledPoint -> RDD[LabeledPoint] -> RDD-based algorithm |

Raw Data -> DataFrame -> VectorAssembler -> DataFrame with features col -> Pipeline -> DataFrame-based algorithm |

Conclusion:

For most modern Spark ML tasks, you will work with DataFrames. However, understanding LabeledPoint is very valuable because:

- It's the core concept that underpins how Spark represents training data.

- You might encounter it in older code examples or when diving deeper into the RDD-based API.

- The transition from an RDD of

LabeledPointto a DataFrame is a common and useful pattern.