Of course! Here is a comprehensive guide to using the Java HBase client, covering the basics, core components, and best practices.

This guide will walk you through:

- Prerequisites: What you need before you start.

- Maven Dependencies: How to add the necessary libraries to your project.

- Connecting to HBase: Establishing a connection to your HBase cluster.

- Core Operations: The fundamental CRUD (Create, Read, Update, Delete) operations.

- Scanning vs. Getting: The two primary ways to read data.

- Best Practices: Critical advice for building robust and efficient applications.

- Complete Example: A runnable code snippet demonstrating the concepts.

Prerequisites

- Java Development Kit (JDK): HBase requires Java 8 or newer.

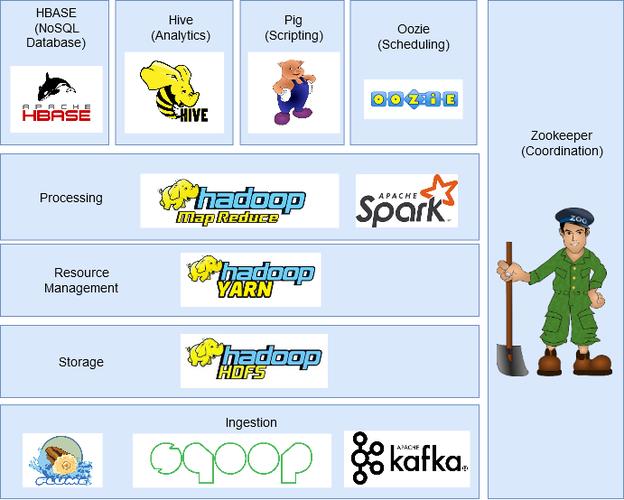

- Running HBase Cluster: You need an accessible HBase cluster. This can be a standalone setup for development or a full distributed cluster.

- HBase Configuration: You need to know the location of your HBase cluster's configuration, typically defined in the

hbase-site.xmlfile. The client needs this to know the location of theZooKeeperquorum.

Maven Dependencies

The easiest way to manage HBase client libraries is with Maven or Gradle. You'll need the hbase-client and hbase-common dependencies. The version should match the version of your HBase server.

<dependencies>

<!-- HBase Client Library -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<!-- IMPORTANT: Use the same version as your HBase server -->

<version>2.4.11</version>

</dependency>

<!-- HBase Common Utilities -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>2.4.11</version>

</dependency>

<!-- Optional: For connecting to HBase via its REST or Thrift API,

but the Java API is the standard and most performant. -->

</dependencies>

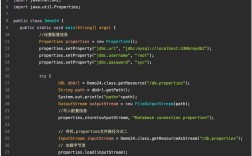

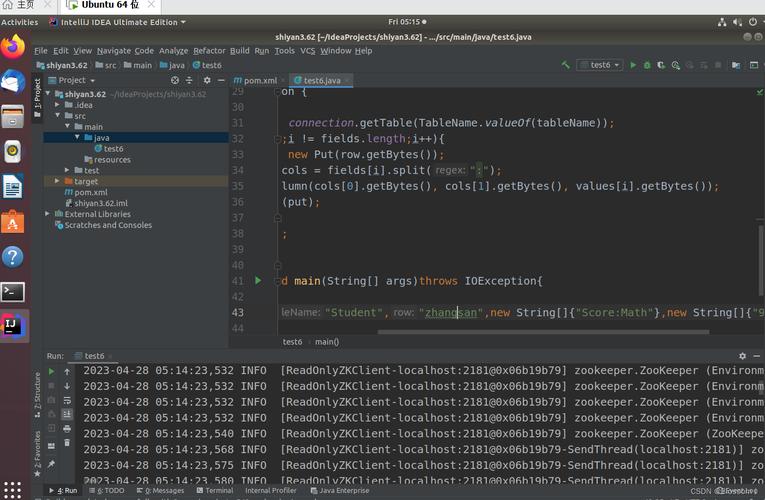

Connecting to HBase

The connection to HBase is a heavyweight object and should be managed carefully. The standard practice is to create a single Connection instance for your application and reuse it. The Connection object is thread-safe.

The connection is established using a ConnectionFactory.

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

public class HBaseConnectionManager {

private static final String ZOOKEEPER_QUORUM = "localhost"; // Your ZK host

private static final int ZOOKEEPER_CLIENT_PORT = 2181; // Your ZK port

private static Connection connection = null;

// Get a singleton connection to HBase

public static Connection getConnection() throws IOException {

if (connection == null || connection.isClosed()) {

// 1. Create a configuration object

Configuration config = HBaseConfiguration.create();

// 2. Set Zookeeper properties

config.set("hbase.zookeeper.quorum", ZOOKEEPER_QUORUM);

config.set("hbase.zookeeper.property.clientPort", String.valueOf(ZOOKEEPER_CLIENT_PORT));

// 3. Create a connection

connection = ConnectionFactory.createConnection(config);

}

return connection;

}

// Close the connection when the application shuts down

public static void closeConnection() throws IOException {

if (connection != null && !connection.isClosed()) {

connection.close();

}

}

}

Note on hbase-site.xml: Instead of setting properties programmatically, you can place the hbase-site.xml file from your HBase cluster's conf directory in your project's classpath. The HBaseConfiguration.create() method will automatically load it.

Core Components: Table, Put, Get, Delete

Connection: Represents a connection to the HBase cluster. (Obtained fromConnectionFactory)Table: Represents a specific table in HBase. You get an instance from theConnectionobject. It's lightweight and not thread-safe, so you should get a new instance for each thread or operation.Put: Represents aputoperation to insert or update data. You specify the row key and add column families, column qualifiers, and values.Get: Represents agetoperation to fetch a single row by its key.Delete: Represents adeleteoperation to remove a column, a version of a column, or an entire row.Scan: Represents ascanoperation to retrieve multiple rows from a table.

CRUD Operations

Let's assume we have a table named user_data with a column family info.

A. Create (Put Data)

This operation inserts a new row or updates an existing one.

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.util.Bytes;

// ...

try (Connection connection = HBaseConnectionManager.getConnection();

Table table = connection.getTable(TableName.valueOf("user_data"))) {

// Create a Put object with the row key

Put put = new Put(Bytes.toBytes("user123"));

// Add column family, column qualifier, and value

// Bytes.toBytes() is used to convert Java types to byte arrays

put.addColumn(

Bytes.toBytes("info"),

Bytes.toBytes("name"),

Bytes.toBytes("Alice Smith")

);

put.addColumn(

Bytes.toBytes("info"),

Bytes.toBytes("email"),

Bytes.toBytes("alice.smith@example.com")

);

// Add a timestamp to the value (optional, defaults to current time)

// put.addColumn(..., ..., ..., System.currentTimeMillis());

// Execute the put operation

table.put(put);

System.out.println("Row 'user123' created/updated successfully.");

} catch (IOException e) {

e.printStackTrace();

}

B. Read (Get Data)

This operation retrieves a single row.

// ...

try (Connection connection = HBaseConnectionManager.getConnection();

Table table = connection.getTable(TableName.valueOf("user_data"))) {

// Create a Get object with the row key

Get get = new Get(Bytes.toBytes("user123"));

// To get specific columns, add them to the Get object

// get.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"));

// Execute the get operation

org.apache.hadoop.hbase.client.Result result = table.get(get);

// Check if the row was found

if (!result.isEmpty()) {

// Retrieve values by column family and qualifier

String name = Bytes.toString(result.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));

String email = Bytes.toString(result.getValue(Bytes.toBytes("info"), Bytes.toBytes("email")));

System.out.println("Retrieved Row 'user123':");

System.out.println(" Name: " + name);

System.out.println(" Email: " + email);

} else {

System.out.println("Row 'user123' not found.");

}

} catch (IOException e) {

e.printStackTrace();

}

C. Update (Put Data)

HBase doesn't have a separate "update" command. You simply perform a Put operation on an existing row key with the same column family and qualifier. The old value is overwritten.

// This code is identical to the "Create" example.

// If 'user123' exists, its 'name' and 'email' will be updated.

Put update = new Put(Bytes.toBytes("user123"));

update.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("Alice Williams"));

table.put(update);

System.out.println("Row 'user123' updated successfully.");

D. Delete Data

This operation removes data from a row.

// ...

try (Connection connection = HBaseConnectionManager.getConnection();

Table table = connection.getTable(TableName.valueOf("user_data"))) {

// Create a Delete object with the row key

Delete delete = new Delete(Bytes.toBytes("user123"));

// Option 1: Delete a specific column

delete.addColumn(Bytes.toBytes("info"), Bytes.toBytes("email"));

// Option 2: Delete all versions of a specific column

// delete.addColumn(..., ...);

// Option 3: Delete the entire row

// delete.addFamily(Bytes.toBytes("info"));

// delete.addFamily(...) // to delete another family

// Execute the delete operation

table.delete(delete);

System.out.println("Column 'email' for row 'user123' deleted successfully.");

} catch (IOException e) {

e.printStackTrace();

}

Scanning vs. Getting

Get: Use this when you know the exact row key. It's a direct lookup and is very fast. It's like a primary key lookup in a relational database.Scan: Use this to retrieve multiple rows that match certain criteria. You can specify a start row, stop row, and filters. Scans can be memory-intensive if they return a lot of data, so they should be used with care.

Example: Using a Scan

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Result;

// ...

try (Connection connection = HBaseConnectionManager.getConnection();

Table table = connection.getTable(TableName.valueOf("user_data"))) {

// Create a Scan object

Scan scan = new Scan();

// Set a start row (inclusive)

scan.withStartRow(Bytes.toBytes("user100"));

// Set a stop row (exclusive)

scan.withStopRow(Bytes.toBytes("user200"));

// Add a filter to get only rows where the 'name' column starts with "Alice"

// scan.setFilter(new PrefixFilter(Bytes.toBytes("Alice")));

// Execute the scan

ResultScanner scanner = table.getScanner(scan);

// Iterate over the results

for (Result result : scanner) {

String rowKey = Bytes.toString(result.getRow());

String name = Bytes.toString(result.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));

System.out.println("Row: " + rowKey + ", Name: " + name);

}

// Always close the scanner

scanner.close();

} catch (IOException e) {

e.printStackTrace();

}

Best Practices

- Manage Connections: Crucially, create only one

Connectioninstance per JVM/application and reuse it. It's a heavyweight object that manages connection pools and background threads. Closing it is expensive. - Use

try-with-resources: Always usetry-with-resourcesblocks forConnection,Table,ResultScanner, and any otherCloseableobjects. This ensures they are automatically and safely closed, preventing resource leaks. - Batch Operations: For bulk inserts or updates, use the

Table.put(List<Put> puts)method. This sends the operations in a single RPC call, which is much more efficient than sending them one by one. - Design Your Row Keys: The row key is the most important part of your HBase schema design. A good row key is:

- Unique: Identifies a single row.

- Meaningful: Enables efficient lookups.

- Sorted-friendly: Data is stored sorted by row key. Use patterns like "reversed_timestamp_entity_id" or "region_id_entity_id" to distribute load and enable efficient range scans.

- Use Object Pools for

Table: WhileConnectionis thread-safe,Tableis not. If you are in a multi-threaded environment, don't share a singleTableinstance. Instead, get a newTableinstance from theConnectionfor each thread or operation. Some applications use an object pool forTableinstances, but getting a new one each time is often simpler and sufficient. - Enable Compression: Enable compression on your column families. It significantly reduces disk I/O and network traffic. Snappy or Gzip are common choices.

Complete Example

Here is a full, runnable example that puts everything together.

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class HBaseJavaClientExample {

private static final String TABLE_NAME = "people";

private static final String COLUMN_FAMILY = "personal";

private static final String ZOOKEEPER_QUORUM = "localhost";

private static final int ZOOKEEPER_CLIENT_PORT = 2181;

public static void main(String[] args) {

// Setup configuration

Configuration config = HBaseConfiguration.create();

config.set("hbase.zookeeper.quorum", ZOOKEEPER_QUORUM);

config.set("hbase.zookeeper.property.clientPort", String.valueOf(ZOOKEEPER_CLIENT_PORT));

// Use try-with-resources to ensure the connection is closed

try (Connection connection = ConnectionFactory.createConnection(config)) {

System.out.println("--- 1. Creating Table ---");

createTable(connection, TABLE_NAME, COLUMN_FAMILY);

System.out.println("\n--- 2. Inserting Data ---");

insertData(connection, TABLE_NAME);

System.out.println("\n--- 3. Getting a Single Row ---");

getRow(connection, TABLE_NAME, "person_1");

System.out.println("\n--- 4. Scanning the Table ---");

scanTable(connection, TABLE_NAME);

System.out.println("\n--- 5. Deleting Data ---");

deleteRow(connection, TABLE_NAME, "person_2");

System.out.println("\n--- 6. Scanning After Deletion ---");

scanTable(connection, TABLE_NAME);

} catch (IOException e) {

e.printStackTrace();

}

}

private static void createTable(Connection connection, String tableName, String columnFamily) throws IOException {

// Check if table exists

try (Admin admin = connection.getAdmin()) {

if (admin.tableExists(TableName.valueOf(tableName))) {

System.out.println("Table '" + tableName + "' already exists.");

return;

}

TableDescriptorBuilder tableDescriptorBuilder = TableDescriptorBuilder.newBuilder(TableName.valueOf(tableName));

ColumnFamilyDescriptorBuilder columnFamilyDescriptorBuilder = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(columnFamily));

// Optional: Set compression

// columnFamilyDescriptorBuilder.setCompressionType(Compression.Algorithm.SNAPPY);

tableDescriptorBuilder.setColumnFamily(columnFamilyDescriptorBuilder.build());

admin.createTable(tableDescriptorBuilder.build());

System.out.println("Table '" + tableName + "' created successfully.");

}

}

private static void insertData(Connection connection, String tableName) throws IOException {

try (Table table = connection.getTable(TableName.valueOf(tableName))) {

List<Put> puts = new ArrayList<>();

Put put1 = new Put(Bytes.toBytes("person_1"));

put1.addColumn(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("name"), Bytes.toBytes("Alice"));

put1.addColumn(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("age"), Bytes.toBytes(30));

puts.add(put1);

Put put2 = new Put(Bytes.toBytes("person_2"));

put2.addColumn(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("name"), Bytes.toBytes("Bob"));

put2.addColumn(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("age"), Bytes.toBytes(25));

puts.add(put2);

table.put(puts);

System.out.println("Inserted data for person_1 and person_2.");

}

}

private static void getRow(Connection connection, String tableName, String rowKey) throws IOException {

try (Table table = connection.getTable(TableName.valueOf(tableName))) {

Get get = new Get(Bytes.toBytes(rowKey));

Result result = table.get(get);

if (!result.isEmpty()) {

String name = Bytes.toString(result.getValue(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("name")));

int age = Bytes.toInt(result.getValue(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("age")));

System.out.println("Found row '" + rowKey + "': Name=" + name + ", Age=" + age);

} else {

System.out.println("Row '" + rowKey + "' not found.");

}

}

}

private static void scanTable(Connection connection, String tableName) throws IOException {

try (Table table = connection.getTable(TableName.valueOf(tableName));

ResultScanner scanner = table.getScanner(new Scan())) {

System.out.println("Scanning table '" + tableName + "':");

for (Result result : scanner) {

String rowKey = Bytes.toString(result.getRow());

String name = Bytes.toString(result.getValue(Bytes.toBytes(COLUMN_FAMILY), Bytes.toBytes("name")));

System.out.println(" Row: " + rowKey + ", Name: " + name);

}

}

}

private static void deleteRow(Connection connection, String tableName, String rowKey) throws IOException {

try (Table table = connection.getTable(TableName.valueOf(tableName))) {

Delete delete = new Delete(Bytes.toBytes(rowKey));

table.delete(delete);

System.out.println("Deleted row '" + rowKey + "'.");

}

}

}