Of course! Here is a comprehensive guide on how to use a Kafka Producer with Python, covering everything from setup and basic usage to best practices and a complete, runnable example.

Table of Contents

- What is a Kafka Producer?

- Prerequisites

- Installation

- A Simple "Hello World" Example

- Key Configuration Parameters

- Producing JSON Messages

- Best Practices

- Asynchronous Production with Callbacks

- Handling Retries and Timeouts

- Serializers

- Compression

- Complete Example (Python Producer + Java Consumer)

What is a Kafka Producer?

A Kafka Producer is a client application that publishes (writes) records to Kafka topics. Each record consists of:

- Key: An optional value used to determine which partition the record will be sent to. If no key is provided, records are distributed to partitions in a round-robin fashion.

- Value: The actual data payload you want to send. This can be a string, a JSON object, bytes, etc.

- Headers: Optional key-value pairs for custom metadata.

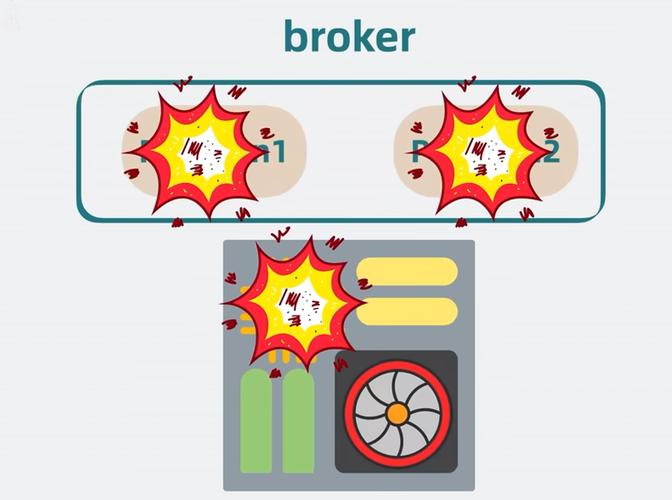

The producer's job is to take the data from your application and reliably send it to one or more Kafka brokers.

Prerequisites

- Python 3 installed.

- A running Kafka cluster. If you don't have one, the easiest way to get started is with Docker Compose using the official

confluentinc/cp-kafkaimage. You can find many tutorials online for this. - A topic to produce messages to. You can create one using the Kafka CLI:

# Replace with your Kafka broker address if different kafka-topics --bootstrap-server localhost:9092 --create --topic python-test-topic --partitions 3 --replication-factor 1

Installation

The most popular and feature-rich Kafka client for Python is the confluent-kafka library, developed by Confluent (the company behind Kafka).

Install it using pip:

pip install confluent-kafka

A Simple "Hello World" Example

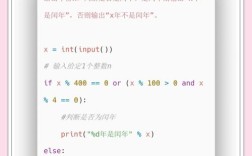

This is the most basic example of producing a string message to a topic.

from confluent_kafka import Producer

# --- Configuration ---

# The 'bootstrap.servers' config is a comma-separated list of Kafka broker addresses.

# This is the only mandatory config.

conf = {

'bootstrap.servers': 'localhost:9092',

}

# --- Create a Producer instance ---

# The producer is responsible for sending messages to Kafka.

producer = Producer(conf)

# --- Topic to send messages to ---

topic = 'python-test-topic'

# --- Produce a message ---

# The produce() method sends a message asynchronously.

# It takes the topic name, the message value, and an optional key.

# The key and value must be bytes, so we encode the string.

producer.produce(topic, key='my-key', value='Hello, Kafka from Python!')

# --- Flush the producer ---

# This is a crucial step. It waits for any outstanding messages to be delivered

# and ensures they are sent to the broker before the program exits.

# A timeout of 0 means it will wait indefinitely.

producer.flush()

print("Message sent successfully!")

To run this:

- Make sure your Kafka broker is running.

- Create the topic as described in the prerequisites.

- Run the Python script:

python your_script_name.py

How to check if it worked? Use the Kafka console consumer to listen for messages on the topic:

kafka-console-consumer --bootstrap-server localhost:9092 --topic python-test-topic --from-beginning

You should see the output: Hello, Kafka from Python!

Key Configuration Parameters

The conf dictionary is where you configure your producer. Here are some of the most important parameters:

| Parameter | Description | Example |

|---|---|---|

bootstrap.servers |

Required. Comma-separated list of host:port pairs for the Kafka broker(s). | 'localhost:9092' |

client.id |

An ID string to pass to the server when making requests. Helps identify the client. | 'my-python-producer' |

acks |

Crucial for reliability. The number of acknowledgments the producer requires the leader to have received before considering a request complete. | 0 (No acknowledgment), 1 (Leader only), 'all' (All in-sync replicas). |

retries |

How many times to retry sending a message that failed due to a transient error (like a broker timeout). | 3 |

linger.ms |

The producer groups together any records that arrive in between request transmissions into a single batched request. This setting specifies how long to wait before sending a batch. 0 means send immediately. |

5 |

compression.type |

The compression algorithm to use for message batches. Can reduce network overhead. | 'gzip', 'snappy', 'lz4', 'zstd' |

batch.size |

The producer will attempt to batch records together into fewer requests whenever multiple records are being sent to the same partition. This setting gives the size of the batch in bytes. | 16384 (16KB) |

Producing JSON Messages

It's very common to send structured data like JSON. The process is simple: convert your Python dictionary to a JSON string, then encode it to bytes.

import json

from confluent_kafka import Producer

conf = {'bootstrap.servers': 'localhost:9092'}

producer = Producer(conf)

topic = 'json-messages-topic'

# Create a Python dictionary

user_data = {

"user_id": 123,

"username": "johndoe",

"action": "login",

"timestamp": "2025-10-27T10:00:00Z"

}

# Convert the dictionary to a JSON string, then encode to bytes

# Using ensure_ascii=False is good practice for international characters

json_message = json.dumps(user_data, ensure_ascii=False).encode('utf-8')

print(f"Sending JSON message: {json_message}")

producer.produce(topic, key=str(user_data['user_id']).encode('utf-8'), value=json_message)

producer.flush()

print("JSON message sent successfully!")

Best Practices

Asynchronous Production with Callbacks

By default, producer.produce() is asynchronous. This is highly efficient for performance. However, you should always provide a delivery callback to know if the message was successfully delivered or if it failed.

import confluent_kafka

from confluent_kafka import KafkaException

# ... (conf and producer setup from before) ...

# Define the delivery callback function

def delivery_report(err, msg):

""" Called once for each message to indicate delivery result. """

if err is not None:

print(f'Message delivery failed: {err}')

else:

# msg.topic() is the topic name

# msg.partition() is the partition number

# msg.offset() is the offset in the partition

print(f'Message delivered to {msg.topic()} [{msg.partition()}] at offset {msg.offset()}')

# Produce a message and register the callback

# The callback is called when the message is acknowledged by the broker

producer.produce(topic, value='My message with callback!', callback=delivery_report)

# The flush() method will still wait for all callbacks to be called.

producer.flush()

Handling Retries and Timeouts

The retries config handles transient network issues. For more robust applications, you should also handle KafkaException which can be raised for other reasons, like a message being too large.

from confluent_kafka import KafkaException

# ... (conf and producer setup) ...

conf['retries'] = 3 # Set retries in config

producer = Producer(conf)

try:

for i in range(10):

producer.produce(topic, value=f'Message {i}')

# The poll() method is needed to handle delivery callbacks and other events

# It's good practice to call it periodically.

producer.poll(0)

producer.flush()

except BufferError:

# This happens if the producer queue is full (e.g., messages are produced faster than they can be sent)

print(f"Local queue is full! {len(producer)} messages waiting.")

except KafkaException as e:

print(f"Kafka error occurred: {e}")

Serializers

For complex objects, you can create a custom serializer function instead of manually calling json.dumps().encode() every time.

def json_serializer(obj):

""" A serializer that converts a Python dict to a JSON byte string. """

return json.dumps(obj).encode('utf-8')

# Use it in your produce call

user_data = {"name": "Jane", "id": 456}

producer.produce(topic, value=json_serializer(user_data))

Compression

Enabling compression is a great way to reduce network I/O and storage costs, especially for large messages or high-throughput scenarios.

# Add