Of course! Let's dive deep into XGBClassifier, the go-to implementation of the Gradient Boosting algorithm in Python using the popular XGBoost library.

What is XGBClassifier?

XGBClassifier stands for XGBoost Classifier. It's an implementation of the Gradient Boosting algorithm, which is an ensemble learning technique.

- Ensemble Learning: It builds a model by combining the predictions of several simpler models (called "weak learners," typically decision trees).

- Gradient Boosting: It builds these models sequentially. Each new model tries to correct the errors made by the previous ones. It does this by focusing on the training instances that the previous models got wrong.

XGBoost is not just a standard implementation; it's highly optimized and includes several features that make it incredibly fast and powerful, often winning machine learning competitions.

Key Advantages of XGBoost

- Performance & Accuracy: It's one of the most effective and widely used algorithms for structured/tabular data.

- Speed & Efficiency: It's written in C++ and is highly optimized for performance, with features like parallel processing and hardware acceleration (like GPUs).

- Regularization: It includes L1 (Lasso) and L2 (Ridge) regularization to prevent overfitting, which is a common problem with standard Gradient Boosting.

- Handling Missing Values: XGBoost can automatically learn the best imputation value for missing data during training.

- Flexibility: It offers a wide range of hyperparameters to tune the model for specific problems.

Step-by-Step Guide to Using XGBClassifier

Here's a complete, practical example from installation to making predictions.

Step 1: Installation

If you don't have the XGBoost library installed, open your terminal or command prompt and run:

pip install xgboost

It's also highly recommended to have scikit-learn and pandas installed, as they are commonly used alongside XGBoost.

pip install scikit-learn pandas matplotlib

Step 2: Import Libraries

import xgboost as xgb from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score, classification_report, confusion_matrix import pandas as pd import numpy as np

Step 3: Load and Prepare Data

We'll use the famous Iris dataset, which is conveniently available in scikit-learn.

# Load the Iris dataset

from sklearn.datasets import load_iris

iris = load_iris()

X = iris.data

y = iris.target

# For a more realistic example, let's create a DataFrame

df = pd.DataFrame(X, columns=iris.feature_names)

df['target'] = y

print("First 5 rows of the dataset:")

print(df.head())

# Define features (X) and target (y)

X = df.drop('target', axis=1)

y = df['target']

Step 4: Split Data into Training and Testing Sets

This is a crucial step to evaluate your model's performance on unseen data.

# Split the data into training and testing sets (80% train, 20% test)

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.2,

random_state=42, # for reproducibility

stratify=y # ensures the split has the same proportion of classes

)

print(f"\nTraining set shape: {X_train.shape}")

print(f"Testing set shape: {X_test.shape}")

Step 5: Initialize and Train the XGBClassifier

This is the core of the process. We'll create an instance of the classifier and fit it to our training data.

# Initialize the XGBClassifier

# You can start with default parameters

xgb_classifier = xgb.XGBClassifier(

objective='multi:softmax', # Specify multi-class classification

num_class=3, # Number of classes in the dataset

use_label_encoder=False, # Suppress a future warning

eval_metric='mlogloss' # Evaluation metric

)

# Train the model

print("\nTraining the XGBoost model...")

xgb_classifier.fit(X_train, y_train)

print("Training complete.")

Step 6: Make Predictions

Now that the model is trained, we can use it to predict the class labels for our test set.

# Make predictions on the test set y_pred = xgb_classifier.predict(X_test)

Step 7: Evaluate the Model's Performance

Let's see how well our model did using common classification metrics.

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

print(f"\nAccuracy: {accuracy:.4f}")

# Display a detailed classification report

print("\nClassification Report:")

print(classification_report(y_test, y_pred, target_names=iris.target_names))

# Display the confusion matrix

print("\nConfusion Matrix:")

print(confusion_matrix(y_test, y_pred))

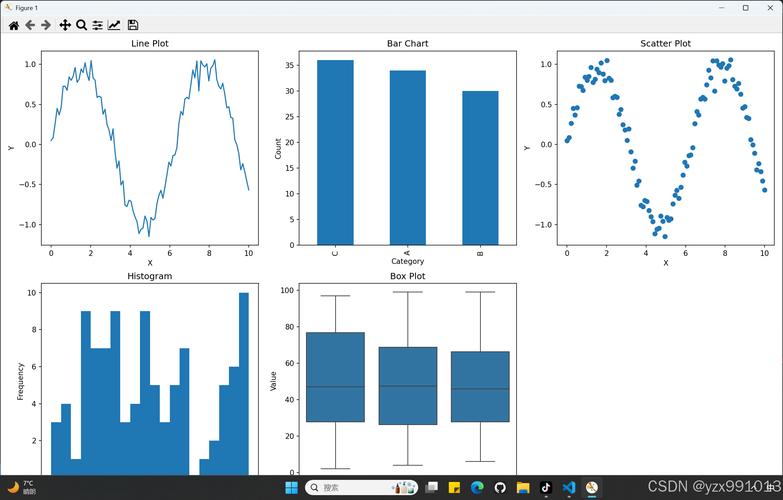

Step 8: Feature Importance

A great feature of tree-based models is that they can tell you which features were most important for making predictions.

import matplotlib.pyplot as plt

# Get feature importance

importance = xgb_classifier.feature_importances_

# Create a pandas Series for easy plotting

feature_importance = pd.Series(importance, index=iris.feature_names)

feature_importance.sort_values(ascending=False, inplace=True)

# Plot feature importance

plt.figure(figsize=(10, 6))

feature_importance.plot(kind='bar')'Feature Importance')

plt.ylabel('Importance Score')

plt.show()

Key Hyperparameters to Tune

The default parameters are a good start, but for optimal performance, you'll need to tune them. Here are the most important ones:

| Hyperparameter | Description | Common Values |

|---|---|---|

n_estimators |

The number of boosting rounds (trees) to build. | 100, 200, 500, 1000 (higher is better, but watch for overfitting). |

learning_rate (or eta) |

The step size shrinkage used to prevent overfitting. | 01, 1, 2. A lower value requires more trees (n_estimators). |

max_depth |

The maximum depth of a tree. Controls model complexity. | 3, 6, 10. Deeper trees can lead to overfitting. |

subsample |

The fraction of samples to be used for fitting the individual base learners. | 8, 9, 0. Values less than 1.0 introduce randomness, helping to prevent overfitting. |

colsample_bytree |

The fraction of features to be used for fitting each tree. | 8, 9, 0. Similar to subsample but for features. |

reg_alpha (L1) |

L1 regularization term on weights. | 0, 01, 1, 1. Higher values lead to more regularization. |

reg_lambda (L2) |

L2 regularization term on weights. | 0, 1, 1, 10. Higher values lead to more regularization. |

Example of Tuning with GridSearchCV

from sklearn.model_selection import GridSearchCV

# Define the parameter grid

param_grid = {

'n_estimators': [100, 200],

'max_depth': [3, 5, 7],

'learning_rate': [0.01, 0.1],

'subsample': [0.8, 1.0]

}

# Initialize the classifier

xgb_clf = xgb.XGBClassifier(use_label_encoder=False, eval_metric='mlogloss')

# Set up GridSearchCV

grid_cv = GridSearchCV(

estimator=xgb_clf,

param_grid=param_grid,

scoring='accuracy',

cv=5, # 5-fold cross-validation

verbose=1,

n_jobs=-1 # Use all available cores

)

# Fit GridSearchCV to the data

print("\nPerforming Grid Search...")

grid_cv.fit(X_train, y_train)

# Print the best parameters and best score

print(f"\nBest parameters found: {grid_cv.best_params_}")

print(f"Best cross-validation accuracy: {grid_cv.best_score_:.4f}")

# Evaluate the best model on the test set

best_model = grid_cv.best_estimator_

y_pred_best = best_model.predict(X_test)

print(f"\nTest accuracy of best model: {accuracy_score(y_test, y_pred_best):.4f}")

Important: use_label_encoder=False

You will often see the use_label_encoder=False parameter. This is because in newer versions of XGBoost, the library stopped automatically converting labels into an integer format (e.g., 0, 1, 2) to avoid potential data leakage and provide more control. By setting it to False, you ensure that you are handling the label encoding explicitly or relying on scikit-learn's handling, which