Of course! Working with string data in Python's Pandas DataFrames is a fundamental task. Pandas provides a powerful and efficient set of tools for this, primarily through the .str accessor.

Here’s a comprehensive guide covering everything from basic operations to more advanced techniques.

The Foundation: The .str Accessor

When you have a Pandas DataFrame or Series with string data, you can access a collection of string methods using the .str accessor. This allows you to perform vectorized string operations, which are much faster than iterating through the data with a standard Python loop.

Key Concept: You don't call methods like .upper() directly on the Series. You call them on the .str accessor: series.str.upper().

Setup: Let's create a sample DataFrame

import pandas as pd

import numpy as np # For NaN values

data = {

'first_name': ['John', 'Jane', 'Peter', 'Emily', np.nan],

'last_name': ['Doe', 'Smith', 'Jones', 'Brown', 'Davis'],

'email': ['john.doe@example.com', 'jane.smith@work.com', 'peter.jones@blog.net', 'EMILY@BROWN.ORG', None]

}

df = pd.DataFrame(data)

print("Original DataFrame:")

print(df)

Output:

Original DataFrame:

first_name last_name email

0 John Doe john.doe@example.com

1 Jane Smith jane.smith@work.com

2 Peter Jones peter.jones@blog.net

3 Emily Brown EMILY@BROWN.ORG

4 NaN Davis NoneCommon String Operations

Here are the most frequently used string methods.

A. Case Conversion

These methods are used to standardize the case of your strings.

.str.lower(): Converts all characters to lowercase..str.upper(): Converts all characters to uppercase..str.title(): Converts the first character of each word to uppercase and the rest to lowercase..str.capitalize(): Converts the first character of the string to uppercase and the rest to lowercase..str.swapcase(): Swaps the case of each character.

# Create a new column with lowercase emails

df['email_lower'] = df['email'].str.lower()

# Standardize first names to title case

df['first_name_standard'] = df['first_name'].str.title()

print("\nDataFrame with Case Conversion:")

print(df[['email', 'email_lower', 'first_name', 'first_name_standard']])

Output:

DataFrame with Case Conversion:

email email_lower first_name first_name_standard

0 john.doe@example.com john.doe@example.com John John

1 jane.smith@work.com jane.smith@work.com Jane Jane

2 peter.jones@blog.net peter.jones@blog.net Peter Peter

3 EMILY@BROWN.ORG emily@brown.org Emily Emily

4 None None NaN NaNB. Splitting Strings

The .str.split() method is incredibly useful for parsing strings.

.str.split(sep): Splits a string around a given separator. It returns a Series of lists..str.split(sep, expand=True): Splits the string and expands it into multiple columns. This is extremely powerful.

# Split the email into username and domain

split_emails = df['email'].str.split('@', expand=True)

# Add new columns to the DataFrame

df['username'] = split_emails[0]

df['domain'] = split_emails[1]

print("\nDataFrame after Splitting:")

print(df[['email', 'username', 'domain']])

Output:

DataFrame after Splitting:

email username domain

0 john.doe@example.com john.doe example.com

1 jane.smith@work.com jane.smith work.com

2 peter.jones@blog.net peter.jones blog.net

3 EMILY@BROWN.ORG EMILY BROWN.ORG

4 None None NoneC. Concatenating Strings

You can combine strings from different columns.

.str.cat(others=None, sep=None, na_rep=None): Concatenates strings.

# Combine first and last names into a full name

df['full_name'] = df['first_name'].str.cat(df['last_name'], sep=' ')

# Handle NaN values by replacing them with a placeholder like 'Unknown'

df['full_name_no_nan'] = df['first_name'].str.cat(df['last_name'], sep=' ', na_rep='Unknown')

print("\nDataFrame after Concatenation:")

print(df[['first_name', 'last_name', 'full_name', 'full_name_no_nan']])

Output:

DataFrame after Concatenation:

first_name last_name full_name full_name_no_nan

0 John Doe John Doe John Doe

1 Jane Smith Jane Smith Jane Smith

2 Peter Jones Peter Jones Peter Jones

3 Emily Brown Emily Brown Emily Brown

4 NaN Davis NaN Unknown DavisD. Replacing and Removing Substrings

.str.replace(pat, repl, regex=False): Replaces a pattern (pat) with another string (repl)..str.strip(),.str.lstrip(),.str.rstrip(): Remove leading/trailing whitespace (or other characters).

# Remove 'example.com' from the email and replace with 'placeholder.com'

df['email_cleaned'] = df['email'].str.replace('example.com', 'placeholder.com')

# Clean up extra spaces from first names

df['first_name_clean'] = df['first_name'].str.strip()

print("\nDataFrame after Replacing and Stripping:")

print(df[['email', 'email_cleaned', 'first_name', 'first_name_clean']])

Output:

DataFrame after Replacing and Stripping:

email email_cleaned first_name first_name_clean

0 john.doe@example.com john.doe@placeholder.com John John

1 jane.smith@work.com jane.smith@work.com Jane Jane

2 peter.jones@blog.net peter.jones@blog.net Peter Peter

3 EMILY@BROWN.ORG EMILY@BROWN.ORG Emily Emily

4 None None NaN NaNExtracting Information with Regular Expressions (Regex)

This is one of the most powerful features of the .str accessor. You can use regex to find and extract complex patterns.

.str.extract(pat, flags=0, expand=True): Extracts capture groups from the strings in the Series. Ifexpand=True, it returns a DataFrame. Ifexpand=False, it returns a Series.

# Extract the top-level domain (e.g., com, net, org)

# The regex r'@([a-zA-Z]+)\.' captures the group of letters after the @ and before the .

df['tld'] = df['email'].str.extract(r'@([a-zA-Z]+)\.', expand=False)

# Extract numbers from a string column

data_with_numbers = {'id': ['ID-123', 'ID-456', 'ID-789', 'ID-ABC']}

df_numbers = pd.DataFrame(data_with_numbers)

df_numbers['number'] = df_numbers['id'].str.extract(r'(\d+)', expand=False)

print("\nDataFrame with Regex Extraction:")

print(df[['email', 'tld']])

print("\nDataFrame with Number Extraction:")

print(df_numbers)

Output:

DataFrame with Regex Extraction:

email tld

0 john.doe@example.com com

1 jane.smith@work.com com

2 peter.jones@blog.net net

3 EMILY@BROWN.ORG ORG

4 None None

DataFrame with Number Extraction:

id number

0 ID-123 123

1 ID-456 456

2 ID-789 789

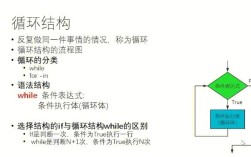

3 ID-ABC NoneChecking String Conditions (Boolean Masking)

You can create boolean masks (True/False Series) to filter your DataFrame based on string properties.

.str.contains(pat, case=True, flags=0, na=False): Checks if a pattern is contained in each string..str.startswith(prefix): Checks if a string starts with a specific prefix..str.endswith(suffix): Checks if a string ends with a specific suffix..str.match(pat): Checks if the beginning of the string matches a pattern (similar tore.match).

# Find all emails from a specific domain

is_gmail_user = df['email'].str.contains('@gmail.com')

df_gmail_users = df[is_gmail_user]

# Find all last names that start with 'D'

starts_with_d = df['last_name'].str.startswith('D')

df_d