Of course! The term "Python model update" can mean several different things depending on the context. Let's break it down into the most common scenarios, from simple data updates in a script to complex machine learning model retraining.

I'll cover the following scenarios:

- Updating a Python Model Object in a Script: Modifying the attributes or data of a model you've already created in your code.

- Updating a Python Package/Module: Upgrading a library you've installed (like

scikit-learnorpandas). - Updating a Machine Learning Model: Retraining a model with new data to improve its performance.

- Saving and Loading Updated Models: Best practices for persisting your model changes.

Scenario 1: Updating a Python Model Object (In-Memory)

This is the most straightforward case. You have a model instance in your script, and you want to change its properties or update its internal data.

Example: Updating a Custom Class Model

Let's say you have a simple class that represents a data model.

class CustomerModel:

def __init__(self, customer_id, name, status):

self.customer_id = customer_id

self.name = name

self.status = status # e.g., 'active', 'inactive'

def display_info(self):

print(f"ID: {self.customer_id}, Name: {self.name}, Status: {self.status}")

# --- Initial Model ---

customer1 = CustomerModel(101, "Alice", "active")

print("Initial state:")

customer1.display_info()

# --- UPDATE the model object ---

# You can directly assign new values to its attributes.

customer1.status = "inactive"

customer1.name = "Alice Smith" # Name change due to marriage

print("\nUpdated state:")

customer1.display_info()

Output:

Initial state:

ID: 101, Name: Alice, Status: active

Updated state:

ID: 101, Name: Alice Smith, Status: inactiveThis is common for simple data containers or objects whose state changes within a single program's execution.

Scenario 2: Updating a Python Package/Module (e.g., scikit-learn)

This refers to upgrading the libraries you use to build your models. It's crucial for getting bug fixes, new features, and performance improvements.

Using pip

The standard tool for managing Python packages is pip.

-

Check the current version:

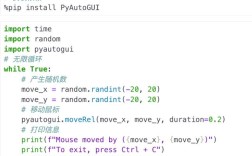

(图片来源网络,侵删)

(图片来源网络,侵删)pip show scikit-learn # or pip show pandas

-

Update the package to the latest version:

pip install --upgrade scikit-learn # or pip install --upgrade pandas

-

Update a package to a specific version:

pip install scikit-learn==1.2.2

Using conda (if you use Anaconda/Miniconda)

If you manage your environment with conda, it's often better to use it for updates to avoid dependency conflicts.

-

Update a package:

conda update scikit-learn

-

Update all packages in your environment:

conda update --all

Warning: When updating major machine learning libraries, be aware that the API might change. Always check the library's changelog after an update.

Scenario 3: Updating a Machine Learning Model (Retraining)

This is a very common and important task in machine learning. "Updating" a model usually means training it further on new data to incorporate recent trends or patterns.

Let's use a scikit-learn example.

The Process:

- Load the old, trained model.

- Load the new data.

- (Optional but Recommended) Validate the new data.

- Update the model. This can mean two things:

- Incremental Learning: Some models support learning from new data without retraining on all the old data. This is very efficient.

- Full Retraining: Combine the old data with the new data and retrain from scratch. This is simpler but can be computationally expensive and requires storing all historical data.

Example: Full Retraining with scikit-learn

This is the most common and robust approach.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

import joblib # For saving/loading models

# --- 1. Setup Initial Data and Model ---

# Let's pretend this is our historical data

initial_data = {

'feature1': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10],

'feature2': [0, 1, 0, 1, 0, 1, 0, 1, 0, 1],

'target': [0, 1, 0, 1, 0, 1, 0, 1, 1, 1]

}

X_initial = pd.DataFrame(initial_data[['feature1', 'feature2']])

y_initial = initial_data['target']

# Train initial model

model_v1 = LogisticRegression()

model_v1.fit(X_initial, y_initial)

print(f"Initial model accuracy on initial data: {model_v1.score(X_initial, y_initial):.2f}")

# Save the initial model

joblib.dump(model_v1, 'model_v1.pkl')

print("Model v1 saved.")

# --- 2. Get New Data and Update the Model ---

# This is the new data that has come in since the last training

new_data = {

'feature1': [11, 12, 13, 14],

'feature2': [1, 0, 1, 0],

'target': [1, 0, 1, 0] # Let's say the pattern is changing slightly

}

X_new = pd.DataFrame(new_data[['feature1', 'feature2']])

y_new = new_data['target']

# Combine old and new data for retraining

X_updated = pd.concat([X_initial, X_new], ignore_index=True)

y_updated = pd.concat([y_initial, pd.Series(y_new)], ignore_index=True)

# Retrain the model on the combined dataset

model_v2 = LogisticRegression() # Start fresh with a new model instance

model_v2.fit(X_updated, y_updated)

print(f"Updated model accuracy on combined data: {model_v2.score(X_updated, y_updated):.2f}")

# Save the updated model

joblib.dump(model_v2, 'model_v2.pkl')

print("Model v2 saved.")

Example: Incremental Learning with SGDClassifier

Some models, like SGDClassifier in scikit-learn, support the partial_fit method, which allows for true incremental learning.

from sklearn.linear_model import SGDClassifier

from sklearn.preprocessing import StandardScaler

import numpy as np

# Data must be in batches for partial_fit

# Batch 1

X_batch1 = np.array([[1, 0], [2, 1], [3, 0], [4, 1]])

y_batch1 = np.array([0, 1, 0, 1])

# Batch 2 (new data)

X_batch2 = np.array([[5, 0], [6, 1], [7, 0], [8, 1]])

y_batch2 = np.array([0, 1, 1, 1]) # Slightly different pattern

# Initialize model and scaler

# Note: Scaler must also be fitted incrementally

scaler = StandardScaler()

model_incremental = SGDClassifier()

# Fit on first batch

X_scaled_batch1 = scaler.fit_transform(X_batch1)

model_incremental.partial_fit(X_scaled_batch1, y_batch1, classes=np.unique(y_batch1))

# Update with second batch

X_scaled_batch2 = scaler.transform(X_batch2) # Use the *same* scaler

model_incremental.partial_fit(X_scaled_batch2, y_batch2)

print(f"Model updated incrementally. Accuracy on new batch: {model_incremental.score(X_scaled_batch2, y_batch2):.2f}")

Scenario 4: Saving and Loading Updated Models (Best Practice)

When you update a model, you'll almost always want to save it for later use in an application or for future retraining.

Best Practice: Save the Model and its Dependencies

Don't just save the model object. Save any preprocessing steps (like scalers) and the version of the libraries you used.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.preprocessing import StandardScaler

import joblib

import datetime

# 1. Prepare Data

data = {

'feature1': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10],

'feature2': [0, 1, 0, 1, 0, 1, 0, 1, 0, 1],

'target': [0, 1, 0, 1, 0, 1, 0, 1, 1, 1]

}

X = pd.DataFrame(data[['feature1', 'feature2']])

y = data['target']

# 2. Preprocess Data

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# 3. Train and Save the Model

model = RandomForestClassifier(random_state=42)

model.fit(X_scaled, y)

# Create a metadata dictionary

metadata = {

'model_version': '1.0',

'date_created': datetime.datetime.now().isoformat(),

'libraries': {

'scikit-learn': '1.3.0', # Example version

'pandas': '2.0.3'

},

'preprocessing': 'StandardScaler was used.'

}

# Save everything into a single dictionary

model_package = {

'model': model,

'scaler': scaler,

'metadata': metadata

}

joblib.dump(model_package, 'production_model_v1.0.joblib')

print("Model package saved successfully!")

print("Metadata:", model_package['metadata'])

To load and use the model later:

# Load the model package

loaded_package = joblib.load('production_model_v1.0.joblib')

loaded_model = loaded_package['model']

loaded_scaler = loaded_package['scaler']

metadata = loaded_package['metadata']

# Use the loaded model for prediction

new_data = pd.DataFrame({'feature1': [2.5], 'feature2': [0.5]})

new_data_scaled = loaded_scaler.transform(new_data)

prediction = loaded_model.predict(new_data_scaled)

print(f"\nPrediction for new data {new_data.values}: {prediction[0]}")

print(f"Loaded model metadata: {metadata}")

Summary: Which "Update" do you need?

| Your Goal | Solution |

|---|---|

| Change data in my custom object | Directly assign new values to the object's attributes (e.g., my_model.name = "New Name"). |

Upgrade a library like pandas or sklearn |

Use pip install --upgrade <library_name> or conda update <library_name>. |

| Improve my ML model with new data | Retrain the model by combining old and new data, or use incremental learning with partial_fit. |

| Save my updated model for later use | Use joblib or pickle to save the model object, and also save any preprocessors and metadata. |