Of course! This is a fundamental and crucial topic in Java development. Let's break down the relationship between UTF-8, Unicode, and how Java handles them.

The Core Concepts: An Analogy

Think of it like this:

- Unicode: This is a giant, universal catalog. It assigns a unique number (a "code point") to every character in every language, including symbols, emojis, and even ancient scripts. For example,

AisU+0041,你isU+4F60, and isU+1F60A. This catalog is the goal—a universal way to represent text. - UTF-8: This is a shipping container or encoding scheme. It's a set of rules for how to pack those Unicode code points into actual bytes for storage or transmission. It's a "variable-width" encoding, meaning it uses 1, 2, 3, or 4 bytes per character depending on the code point. It's the most popular encoding on the web and in modern systems.

Java's Role: Java was designed from the very beginning to be "Unicode-friendly." Its char type was originally intended to hold a single Unicode character. However, the evolution of Unicode revealed a key complexity that Java had to handle.

Unicode in Java: The char Data Type

In Java, the char data type is a 16-bit unsigned integer. It's designed to store a single UTF-16 code unit.

- What is a UTF-16 code unit? UTF-16 is another encoding scheme, like UTF-8, but it's fixed-width (2 bytes per character for the most common characters).

Here's the critical distinction:

- Code Point: A unique number in the Unicode catalog (e.g.,

U+1F60Afor the grinning face emoji). - Code Unit: The 16-bit chunk used by UTF-16 to represent a character.

For the first 65,536 characters in Unicode (the "Basic Multilingual Plane" or BMP), a single char can hold a character perfectly. For example:

char a = 'A'; // The char 'A' is stored as the 16-bit value 0x0041.

The Problem: Supplementary Characters

What about characters outside the BMP, like emojis (, which is U+1F60A) or some rare Chinese/Japanese/Korean characters?

These characters have code points higher than U+FFFF. UTF-16 represents these characters using a surrogate pair: a pair of char values.

- The first

charis the high surrogate. - The second

charis the low surrogate.

Example: The Emoji '😊' (U+1F60A)

- Its code point is

U+1F60A. - In UTF-16, this is represented by the surrogate pair:

- High Surrogate:

U+D83D(decimal:55357) - Low Surrogate:

U+DE0A(decimal:56842)

- High Surrogate:

- In Java, you must use a

Stringto hold this. A singlecharis not enough.

// This is the WRONG way! It will only store the first part of the pair.

// char emoji = '😊'; // This actually works in modern Java due to compiler magic,

// but it's stored internally as two chars.

// The correct way to think about it:

String emojiString = "😊";

// How it's stored internally:

// String is an array of char: ['\uD83D', '\uDE0A']

// It takes TWO Java 'char' objects to represent ONE Unicode character.

System.out.println(emojiString.length()); // Output: 2 (because it's an array of 2 char units)

System.out.println(emojiString.codePointCount(0, emojiString.length())); // Output: 1 (the actual number of Unicode characters)

Key Takeaway for char:

- A Java

charholds a 16-bit UTF-16 code unit, not necessarily a full Unicode character. - For characters outside the BMP (like emojis), you need a surrogate pair (two

chars) to represent a single character. - Always use

String.codePointCount()to get the actual number of characters, notString.length().

UTF-8 in Java: The Practical Reality

While Java's internal string representation is UTF-16, UTF-8 is the de-facto standard for I/O (Input/Output): reading from files, writing to the network, reading HTTP requests, etc.

Java provides robust tools to handle UTF-8 seamlessly, but you must configure them correctly. The biggest pitfall is relying on the platform's default encoding.

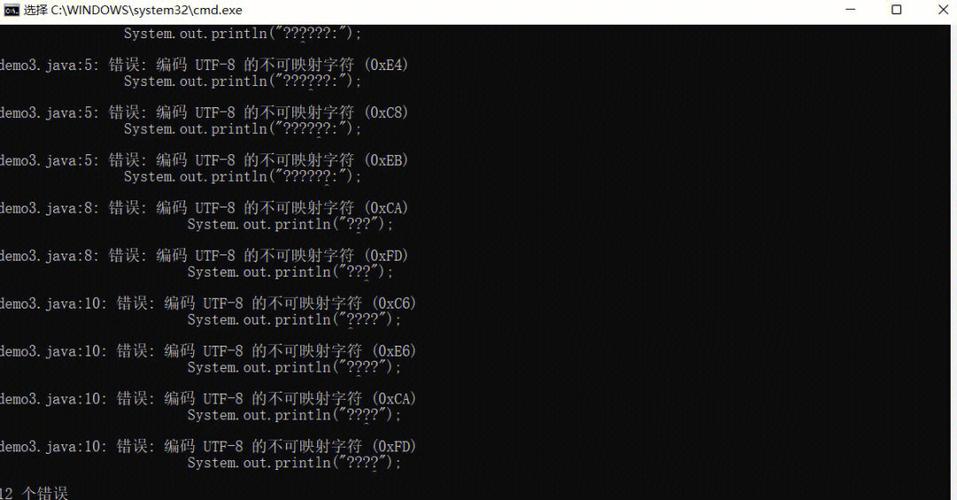

The Pitfall: Default Charset

If you don't specify an encoding, Java will use the platform's default. This is a major source of bugs.

- On a US Windows machine, it might be

Cp1252. - On a Linux/macOS machine, it's often

UTF-8. - This means code that works on your machine might break on a server or a colleague's machine.

Always specify the encoding explicitly!

Best Practices for UTF-8 in Java

Reading and Writing Files

Use InputStreamReader and OutputStreamWriter and pass StandardCharsets.UTF_8 to them.

import java.io.*;

import java.nio.charset.StandardCharsets;

public class FileExample {

public static void main(String[] args) {

String content = "Hello Java! 你好世界! 😊";

String fileName = "test.txt";

// --- Writing to a file in UTF-8 ---

try (BufferedWriter writer = new BufferedWriter(

new OutputStreamWriter(new FileOutputStream(fileName), StandardCharsets.UTF_8))) {

writer.write(content);

System.out.println("File written successfully with UTF-8.");

} catch (IOException e) {

e.printStackTrace();

}

// --- Reading from a file in UTF-8 ---

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(new FileInputStream(fileName), StandardCharsets.UTF_8))) {

String line;

while ((line = reader.readLine()) != null) {

System.out.println("Read from file: " + line);

System.out.println("Length of string: " + line.length()); // Will be 16

System.out.println("Number of code points: " + line.codePointCount(0, line.length())); // Will be 13

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

Reading from Standard Input (Console)

The Scanner class can take a Charset.

import java.util.Scanner;

import java.nio.charset.StandardCharsets;

public class ScannerExample {

public static void main(String[] args) {

System.out.println("Enter some text with accents or emojis:");

// Use try-with-resources to auto-close the scanner

try (Scanner scanner = new Scanner(System.in, StandardCharsets.UTF_8.name())) {

String userInput = scanner.nextLine();

System.out.println("You entered: " + userInput);

}

}

}

Command-Line Arguments

The JVM decodes command-line arguments using the platform's default encoding. To ensure they are UTF-8, you must tell the JVM explicitly when you run the program:

# On Linux/macOS java -Dfile.encoding=UTF-8 MyProgram "你好世界" # On Windows (Command Prompt) chcp 65001 java -Dfile.encoding=UTF-8 MyProgram "你好世界"

Setting the file.encoding system property is the most reliable way to handle arguments, file paths, and standard streams consistently.

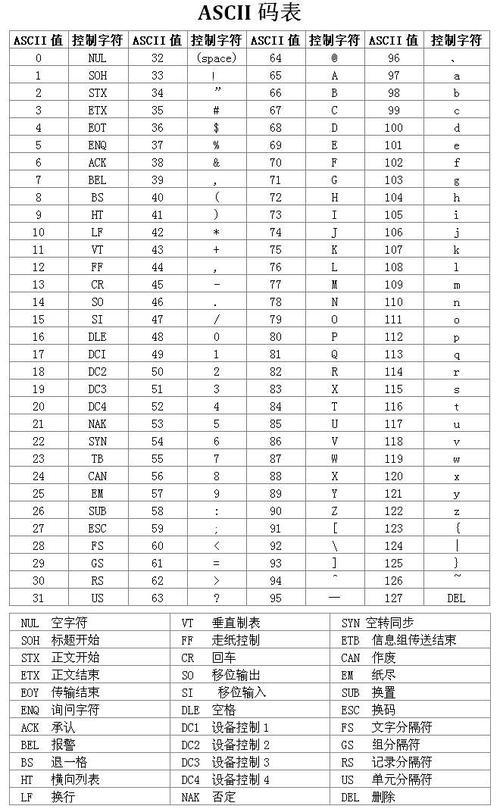

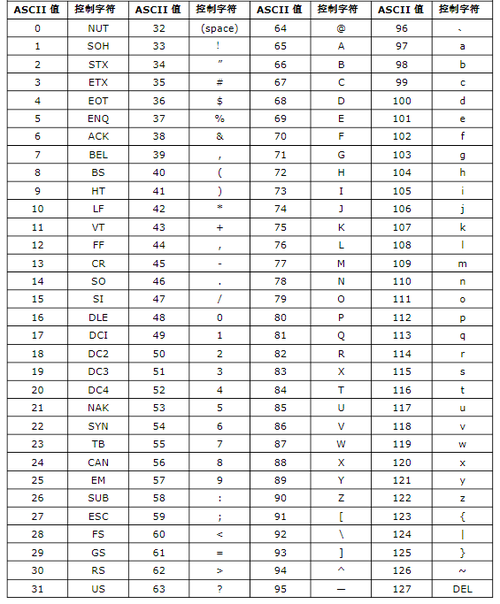

Summary Table

| Concept | Description | Java Implementation |

|---|---|---|

| Unicode | A universal character set that assigns a unique number (code point) to every character. | It's the standard Java aims for. The char type was designed based on it. |

| UTF-16 | An encoding scheme that uses 2 bytes per character for most characters. It's Java's internal representation for String and char. |

- String is a sequence of UTF-16 code units.- char is a 16-bit UTF-16 code unit.- Characters outside the BMP (e.g., emojis) are represented by a surrogate pair (two chars). |

| UTF-8 | A variable-width encoding (1-4 bytes per character). It's the standard for I/O (files, network). | - Use InputStreamReader/OutputStreamWriter with StandardCharsets.UTF_8.- Never rely on the default charset. - Set -Dfile.encoding=UTF-8 for consistent command-line and file handling. |

Final Recommendations

- Internal Logic: Trust Java's

Stringandcharhandling. For most application logic, you don't need to worry about the internal UTF-16 representation. Just useStringmethods. - I/O is Key: The most important place to be explicit about encoding is when your code interacts with the outside world (files, network, user input).

- Be Explicit: Always specify

StandardCharsets.UTF_8when reading or writing. Avoid constructors that don't take a charset. - Handle Surrogates: If you are processing text at a low level (e.g., iterating through a

String), use code point methods (codePointAt(),codePointCount(),offsetByCodePoints()) instead ofcharAt()andlength()to correctly handle supplementary characters.