Of course! In Python, the value_counts() method is an essential tool for data analysis, primarily used with Pandas Series objects. It provides a quick and efficient way to count the occurrences of unique values in a dataset.

Here’s a comprehensive guide covering what it is, how to use it, and common use cases.

What is value_counts()?

value_counts() is a Pandas method that returns a new Series containing the counts of unique values. The resulting Series is sorted in descending order by default, making it easy to see the most frequent values first.

Basic Usage on a Pandas Series

Let's start with the simplest case: a list of items.

Setup

First, make sure you have Pandas installed:

pip install pandas

Now, let's import Pandas and create a sample Series.

import pandas as pd

import numpy as np # Often used for creating sample data

# Create a Pandas Series from a list of fruits

fruits = pd.Series(['apple', 'banana', 'apple', 'orange', 'banana', 'apple', 'grape'])

print("Original Series:")

print(fruits)

Output:

Original Series:

0 apple

1 banana

2 apple

3 orange

4 banana

5 apple

6 grape

dtype: objectThe Simplest Case

Now, let's apply value_counts() to our fruits Series.

# Count the occurrences of each unique value

fruit_counts = fruits.value_counts()

print("\nValue Counts:")

print(fruit_counts)

Output:

Value Counts:

apple 3

banana 2

orange 1

grape 1

dtype: int64As you can see, Pandas has automatically:

- Identified the unique values (

apple,banana,orange,grape). - Counted how many times each one appeared.

- Sorted the results in descending order.

Key Parameters and Their Use

value_counts() is powerful because of its flexible parameters.

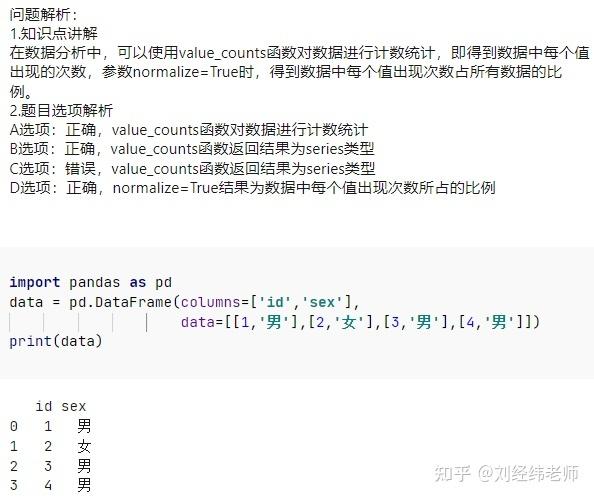

normalize: bool (Default: False)

Instead of raw counts, return the relative frequencies (proportions or percentages).

# Get the proportion of each fruit

fruit_proportions = fruits.value_counts(normalize=True)

print("\nNormalized Value Counts (Proportions):")

print(fruit_proportions)

Output:

Normalized Value Counts (Proportions):

apple 0.428571

banana 0.285714

orange 0.142857

grape 0.142857

dtype: float64This is useful for understanding the distribution of your data as a percentage.

sort: bool (Default: True)

If False, the result will be ordered by the order of appearance in the original data, not by frequency.

# Count without sorting

fruit_counts_unsorted = fruits.value_counts(sort=False)

print("\nUnsorted Value Counts:")

print(fruit_counts_unsorted)

Output:

Unsorted Value Counts:

apple 3

banana 2

orange 1

grape 1

dtype: int64(In this specific case, it looks the same because the first unique value was the most frequent. If the first value was 'orange', it would appear first in the output.)

ascending: bool (Default: False)

If True, the result is sorted in ascending order (smallest to largest).

# Count and sort in ascending order

fruit_counts_asc = fruits.value_counts(ascending=True)

print("\nAscending Value Counts:")

print(fruit_counts_asc)

Output:

Ascending Value Counts:

grape 1

orange 1

banana 2

apple 3

dtype: int64dropna: bool (Default: True)

By default, NaN (Not a Number) values are excluded from the count. Set dropna=False to include them.

# Create a Series with missing values (NaN)

data_with_nan = pd.Series(['A', 'B', np.nan, 'A', np.nan, 'C'])

# Default behavior (dropna=True)

print("\nDefault Value Counts (NaNs dropped):")

print(data_with_nan.value_counts())

# Include NaNs in the count

print("\nValue Counts with NaNs included:")

print(data_with_nan.value_counts(dropna=False))

Output:

Default Value Counts (NaNs dropped):

A 2

B 1

C 1

dtype: int64

Value Counts with NaNs included:

A 2

NaN 2

B 1

C 1

dtype: int64Common Use Cases

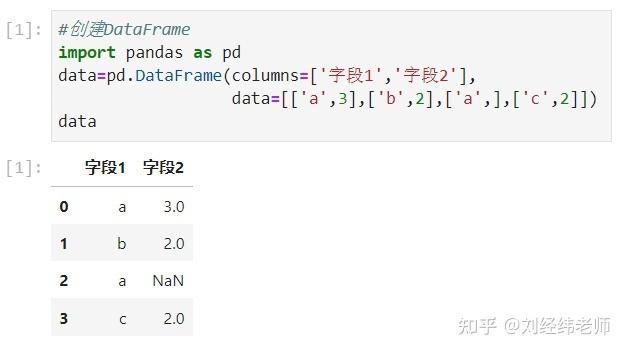

Use Case 1: Exploring a Categorical Column in a DataFrame

This is the most common use case. Imagine you have a dataset of survey responses.

# Create a sample DataFrame

df = pd.DataFrame({

'country': ['USA', 'UK', 'Germany', 'USA', 'Japan', 'UK', 'USA', 'Germany', 'USA'],

'age': [25, 30, 45, 22, 38, 29, 35, 50, 28]

})

# Count the responses for each country

country_counts = df['country'].value_counts()

print("Survey Responses by Country:")

print(country_counts)

Output:

Survey Responses by Country:

USA 4

UK 2

Germany 2

Japan 1

Name: country, dtype: int64This immediately tells you that the USA was the most common response.

Use Case 2: Finding and Handling Outliers or Errors

Let's say you have a column that should only contain 'Yes' or 'No', but you suspect there are typos.

# DataFrame with potential data entry errors

df_responses = pd.DataFrame({

'subscription_status': ['Yes', 'No', 'Yes', 'yes', 'Maybe', 'No', 'N/A', 'Yes', 'no']

})

# See the unique values and their frequencies

status_counts = df_responses['subscription_status'].value_counts()

print("\nSubscription Status Counts:")

print(status_counts)

Output:

Subscription Status Counts:

Yes 3

No 2

yes 1

no 1

Maybe 1

N/A 1

Name: subscription_status, dtype: int64The output clearly reveals the issues:

- Case sensitivity: 'Yes' and 'yes' are counted separately.

- Invalid entries: 'Maybe' and 'N/A' are not valid statuses.

You can now use this information to clean your data (e.g., convert everything to lowercase, or replace invalid values with NaN).

Use Case 3: Quick Data Profiling

value_counts() is a fantastic first step to understand any categorical column in your dataset. It gives you a sense of the distribution, most common values, and potential data quality issues at a glance.

Important Considerations

NaN vs. None

np.nan(Not a Number) is a special float value in NumPy/Pandas used to represent missing data. It is treated as a unique value.Nonein a Pandas Series is typically converted toNaNautomatically.- Both are excluded by default (

dropna=True).

Performance on Large Data

For extremely large datasets, value_counts() can be memory-intensive because it creates a new object in memory. If you only need the top N values, you can use the head() method to get a smaller summary:

# Get only the top 2 most frequent countries top_2_countries = df['country'].value_counts().head(2) print(top_2_countries)

Output:

USA 4

UK 2

Name: country, dtype: int64Summary Table

| Parameter | Default Value | Description |

|---|---|---|

normalize |

False |

If True, returns proportions instead of counts. |

sort |

True |

If False, sorts by order of appearance, not frequency. |

ascending |

False |

If True, sorts the result in ascending order. |

dropna |

True |

If False, includes NaN values in the count. |

In conclusion, value_counts() is a simple yet incredibly powerful method that should be in every data analyst's and data scientist's toolkit for quickly understanding and exploring categorical data.