Of course! statsmodels is a powerful and essential Python library for statistical modeling. It's the go-to tool for anyone who needs to perform traditional statistical analysis, econometrics, or explore relationships between variables in a statistically rigorous way.

Let's break down what statsmodels is, why it's important, and how to use it with clear examples.

What is statsmodels?

statsmodels is a Python module that provides classes and functions for the estimation of many different statistical models, as well as for conducting statistical tests and exploring statistical data.

Key Philosophy:

statsmodels is built on top of NumPy and SciPy. Its main goal is to provide a comprehensive and well-documented library for statistical modeling, closely mirroring the functionality of popular statistical packages like R, Stata, and SAS. It emphasizes providing rich statistical output, including p-values, confidence intervals, and model diagnostics.

How is it Different from scikit-learn?

This is a very common and important question. While both are machine learning libraries, they serve different primary purposes.

| Feature | statsmodels |

scikit-learn |

|---|---|---|

| Primary Goal | Statistical Inference: Understanding the relationship between variables. Why did a variable affect the outcome? Is the relationship significant? | Predictive Modeling: Building the most accurate model to predict outcomes. How well can we predict Y from X? |

| Output | Rich statistical summaries: coefficients, standard errors, t-values, p-values, R-squared, AIC, BIC, etc. | Focus on prediction metrics: accuracy, precision, recall, F1-score, Mean Squared Error (MSE), etc. |

| API | More traditional, often requires manual steps (e.g., adding a constant for the intercept). | Unified, object-oriented fit() and predict() API across all models. |

| Models | Linear Regression, GLMs (Generalized Linear Models), Time Series (ARIMA), ANOVA, Non-parametric tests. | Wide array: SVMs, Random Forests, Gradient Boosting, K-Means Clustering, etc. |

In short: Use statsmodels when you care about the "why" behind the relationship. Use scikit-learn when you care about the "what" of the prediction.

Installation

If you don't have it installed, you can get it via pip:

pip install statsmodels

You'll also need numpy, pandas, and matplotlib for most workflows.

Core Functionality and Examples

statsmodels is organized into several modules. Let's explore the most common ones.

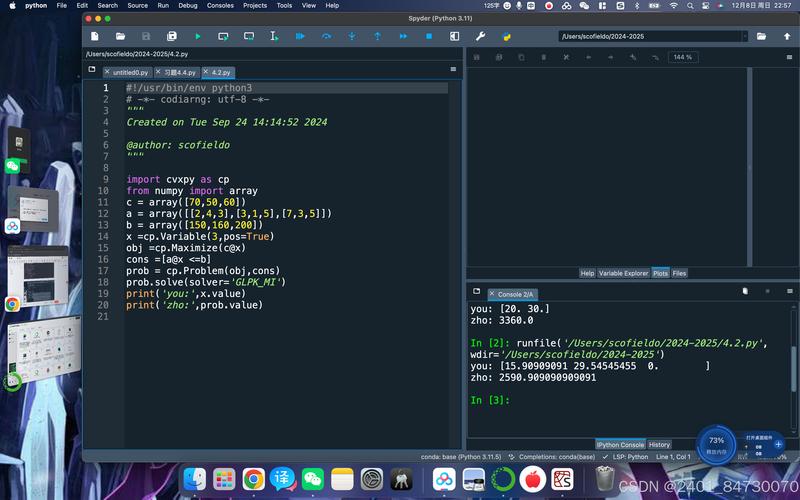

Linear Regression (statsmodels.api)

This is the most fundamental model. The standard way to do it in statsmodels is to use the statsmodels.api.OLS (Ordinary Least Squares) class.

Key Point: Unlike scikit-learn, statsmodels does not automatically add a constant (intercept) term to your data. You must add it manually using sm.add_constant().

Example: Predicting House Prices

import numpy as np

import pandas as pd

import statsmodels.api as sm

import matplotlib.pyplot as plt

# 1. Create some sample data

# Let's say we have data on house prices (y) based on square footage (x1)

# and the number of bedrooms (x2).

np.random.seed(42)

n = 100

sq_footage = np.random.normal(1500, 500, n)

bedrooms = np.random.randint(1, 6, n)

# The true relationship: price = 50000 + 150 * sq_footage + 10000 * bedrooms + noise

price = 50000 + 150 * sq_footage + 10000 * bedrooms + np.random.normal(0, 25000, n)

data = pd.DataFrame({

'price': price,

'sq_footage': sq_footage,

'bedrooms': bedrooms

})

# 2. Define independent (X) and dependent (y) variables

X = data[['sq_footage', 'bedrooms']]

y = data['price']

# 3. Add a constant (intercept) to the independent variables

# This is a crucial step!

X_with_const = sm.add_constant(X)

# 4. Create and fit the OLS model

model = sm.OLS(y, X_with_const)

results = model.fit()

# 5. Print the comprehensive summary

print(results.summary())

Interpreting the Output:

The results.summary() table is the heart of statsmodels. Here's what to look for:

- R-squared:

938. This means ~94% of the variance in house prices is explained by our model. Very high! - coef (Coefficient): This is the estimated effect of each variable.

const: ~50,000. This is the base price of a house with 0 sq ft and 0 bedrooms (the intercept).sq_footage: ~150. For every additional square foot, the price increases by ~$150.bedrooms: ~10,000. For each additional bedroom, the price increases by ~$10,000.

- P>|t| (p-value): This tells you if the coefficient is statistically significant.

- A common threshold is 0.05. If the p-value is less than 0.05, we can say the variable is a statistically significant predictor of the outcome.

- In our example, all p-values are very close to 0, meaning both

sq_footageandbedroomsare highly significant predictors.

Generalized Linear Models (statsmodels.genmod)

GLMs extend linear regression to response variables that have error distributions other than a normal distribution (e.g., binary, count data).

Example: Logistic Regression for Binary Classification

Let's predict if a house is "expensive" (price > median price) based on its features.

# 1. Create the binary target variable data['is_expensive'] = (data['price'] > data['price'].median()).astype(int) # 2. Define X and y X = data[['sq_footage', 'bedrooms']] y = data['is_expensive'] # 3. Add constant X_with_const = sm.add_constant(X) # 4. Create and fit the Logit model logit_model = sm.Logit(y, X_with_const) logit_results = logit_model.fit() # 5. Print summary print(logit_results.summary())

Interpreting Logistic Regression Output:

- The coefficients are in log-odds. To make them more interpretable, we exponentiate them to get Odds Ratios.

- coef: A positive coefficient means that as the predictor increases, the log-odds of the outcome (being

is_expensive) also increase. - P>|t|: We interpret p-values just like in OLS. A low p-value indicates a significant predictor.

# Get the Odds Ratios

print("\nOdds Ratios:")

print(np.exp(logit_results.params))

An odds ratio greater than 1 for sq_footage means that for every additional square foot, the odds of the house being expensive increase by a factor of that ratio.

Time Series Analysis (statsmodels.tsa)

statsmodels has excellent tools for time series analysis, including ARIMA models for forecasting.

Example: ARIMA Model

Let's create a simple time series and fit an ARIMA(1,1,1) model.

# 1. Create a simple time series np.random.seed(42) dates = pd.date_range(start='2025-01-01', periods=50, freq='D') ts = pd.Series(np.cumsum(np.random.randn(50)), index=dates) # 2. Plot the data ts.plot(figsize=(12, 6))'Sample Time Series Data') plt.show() # 3. Fit an ARIMA(1,1,1) model # (p=1, d=1, q=1) from statsmodels.tsa.arima.model import ARIMA # The 'order' is (p, d, q) arima_model = ARIMA(ts, order=(1, 1, 1)) arima_results = arima_model.fit() # 4. Print summary print(arima_results.summary())

The summary will provide information about the AR and MA terms, their coefficients, and statistical significance, helping you understand the underlying structure of the time series.

Statistical Tests (statsmodels.stats)

statsmodels provides a wide array of statistical tests.

Example: T-test for Independent Samples

Let's test if the average price of houses with 3+ bedrooms is significantly different from those with fewer.

# Split the data

high_bed = data[data['bedrooms'] >= 3]['price']

low_bed = data[data['bedrooms'] < 3]['price']

# Perform the t-test

from statsmodels.stats.weightstats import ttest_ind

t_stat, p_value, df = ttest_ind(high_bed, low_bed, alternative='two-sided')

print(f"T-statistic: {t_stat:.4f}")

print(f"P-value: {p_value:.4f}")

if p_value < 0.05:

print("\nThe difference in means is statistically significant.")

else:

print("\nThe difference in means is not statistically significant.")

Summary and When to Use statsmodels

Use statsmodels when your goal is to:

- Understand relationships between variables and quantify their significance.

- Perform hypothesis testing (e.g., "Is this coefficient different from zero?").

- Conduct statistical inference (e.g., calculate confidence intervals).

- Analyze time series data with classical models like ARIMA.

- Perform ANOVA, or other general linear models.

- Build models where interpretability of coefficients is more important than pure predictive accuracy.

For most standard statistical analysis tasks in Python, statsmodels is an indispensable tool.