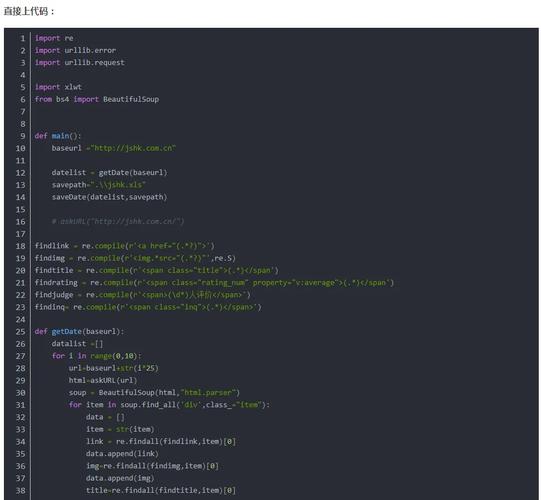

Of course! Let's dive deep into SoupStrainer in Python's BeautifulSoup library. It's a powerful and often overlooked feature that can significantly improve performance.

What is a SoupStrainer?

A SoupStrainer is a simple filter class that you can pass to the BeautifulSoup constructor. It tells BeautifulSoup to only parse a specific part of the document and ignore everything else.

Think of it like a fishing net with a specific mesh size. You can throw the net into a large lake (the HTML document) and only catch the fish (the HTML tags) you're interested in, leaving all the other fish and debris behind.

Why Use SoupStrainer? (The Benefits)

- Speed: This is the biggest advantage. If you have a massive HTML file (e.g., 10MB) but only need data from a small

<div>,SoupStrainerwill parse only that<div>. This can be orders of magnitude faster than parsing the entire document. - Memory Efficiency: By not loading the entire document into the parse tree, you use significantly less RAM. This is crucial when working with very large files or in memory-constrained environments.

- Simplicity: It simplifies your code. You don't have to write complex

find()orselect()calls after parsing the whole document. You get the exact structure you need right from the start.

How to Use SoupStrainer

You create a SoupStrainer object by passing it the arguments you would normally use with soup.find() or soup.select(). The constructor can take a string, a regular expression, a list, or a function as a filter.

Let's look at the different ways to create one.

Setup: Example HTML

We'll use this sample HTML for all our examples.

<html>

<head>My Awesome Page</title>

<style>body { color: blue; }</style>

</head>

<body>

<h1 id="main-heading">Welcome to the Page</h1>

<div class="content">

<p class="intro">This is the first paragraph.</p>

<p class="intro">This is the second paragraph.</p>

<ul>

<li>Item 1</li>

<li>Item 2</li>

</ul>

</div>

<footer>

<p>Copyright 2025</p>

</footer>

</body>

</html>

Example 1: Filtering by Tag Name

You can pass a single tag name as a string.

from bs4 import BeautifulSoup, SoupStrainer

html_doc = """

<html>

<head><title>My Awesome Page</title></head>

<body>

<h1 id="main-heading">Welcome to the Page</h1>

<div class="content">

<p class="intro">This is the first paragraph.</p>

<p class="intro">This is the second paragraph.</p>

</div>

<footer><p>Copyright 2025</p></footer>

</body>

</html>

"""

# Create a SoupStrainer for all <p> tags

only_p_tags = SoupStrainer('p')

# Parse the document, telling BeautifulSoup to only look for <p> tags

soup = BeautifulSoup(html_doc, 'html.parser', parse_only=only_p_tags)

# Now, the soup object only contains the <p> tags

print(soup)

# Output:

# <p class="intro">This is the first paragraph.</p><p class="intro">This is the second paragraph.</p>

# You can operate on it normally

print(soup.find_all('p'))

# Output:

# [<p class="intro">This is the first paragraph.</p>, <p class="intro">This is the second paragraph.</p>

Example 2: Filtering by CSS Selector (Most Common)

This is where SoupStrainer truly shines. You can use a CSS selector string, just like in soup.select().

from bs4 import BeautifulSoup, SoupStrainer

html_doc = """ (same as above) """

# Create a SoupStrainer for a specific ID

only_main_heading = SoupStrainer(id='main-heading')

soup1 = BeautifulSoup(html_doc, 'html.parser', parse_only=only_main_heading)

print(soup1)

# Output:

# <h1 id="main-heading">Welcome to the Page</h1>

# Create a SoupStrainer for a class

only_intro_paragraphs = SoupStrainer('p', class_='intro')

soup2 = BeautifulSoup(html_doc, 'html.parser', parse_only=only_intro_paragraphs)

print(soup2)

# Output:

# <p class="intro">This is the first paragraph.</p><p class="intro">This is the second paragraph.</p>

# Create a SoupStrainer for a more complex selector

# This will find the <ul> tag that is a descendant of a <div> with class 'content'

only_list_in_content = SoupStrainer('div.content > ul')

soup3 = BeautifulSoup(html_doc, 'html.parser', parse_only=only_list_in_content)

print(soup3)

# Output:

# <ul>

# <li>Item 1</li>

# <li>Item 2</li>

# </ul>

Example 3: Filtering with a Function

For maximum flexibility, you can provide a function. The function will be called for every tag, and the tag will be included in the parse tree only if the function returns True.

from bs4 import BeautifulSoup, SoupStrainer

html_doc = """ (same as above) """

# A function that includes tags only if they have an 'id' attribute

def has_id(tag):

return tag.has_attr('id')

only_tags_with_id = SoupStrainer(has_id)

soup = BeautifulSoup(html_doc, 'html.parser', parse_only=only_tags_with_id)

print(soup)

# Output:

# <h1 id="main-heading">Welcome to the Page</h1>

Example 4: Filtering with a Regular Expression

You can use a compiled regular expression to match tag names or attribute values.

from bs4 import BeautifulSoup, SoupStrainer

import re

html_doc = """ (same as above) """

# Match any tag whose name starts with 'h'

heading_tags = SoupStrain er(re.compile('^h'))

soup = BeautifulSoup(html_doc, 'html.parser', parse_only=heading_tags)

print(soup)

# Output:

# <h1 id="main-heading">Welcome to the Page</h1>

# Match any tag that has a class attribute containing the word 'intro'

intro_class = SoupStrainer(class_=re.compile('intro'))

soup = BeautifulSoup(html_doc, 'html.parser', parse_only=intro_class)

print(soup)

# Output:

# <p class="intro">This is the first paragraph.</p><p class="intro">This is the second paragraph.</p>

Practical Example: Scraping Product Prices from an E-commerce Page

Imagine you have a huge product page, but you only care about the prices.

Hypothetical HTML:

<html>

<!-- ... lots of navigation, header, footer scripts ... -->

<body>

<div class="product-list">

<article class="product-card">

<h3>Super Widget</h3>

<p class="price">$19.99</p>

</article>

<article class="product-card">

<h3>Mega Gadget</h3>

<p class="price">$49.50</p>

</article>

<!-- ... 1000 more product cards ... -->

</div>

<!-- ... more footer content ... -->

</body>

</html>

Without SoupStrainer (Slower):

import requests

from bs4 import BeautifulSoup

url = "https://example.com/huge-product-page"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser') # Parses the WHOLE page

prices = soup.select('p.price')

print(f"Found {len(prices)} prices.")

With SoupStrainer (Much Faster & More Efficient):

import requests

from bs4 import BeautifulSoup, SoupStrainer

url = "https://example.com/huge-product-page"

response = requests.get(url)

# Create a strainer to find ONLY the price tags

price_strainer = SoupStrainer('p', class_='price')

# Parse the document, but only look for the price tags

soup = BeautifulSoup(response.text, 'html.parser', parse_only=price_strainer)

# The soup object now is incredibly small and focused

print(soup)

# Output:

# <p class="price">$19.99</p><p class="price">$49.50</p>

prices = soup.find_all('p', class_='price')

print(f"Found {len(prices)} prices.")

Key Takeaways

- Use

SoupStrainerwhen you know exactly what part of the HTML you need. - It provides a massive performance boost for large documents.

- It reduces memory usage.

- The syntax is intuitive: just pass the same arguments you would to

find()orselect()to theSoupStrainerconstructor. - The most common and useful way to use it is with a CSS selector string (

SoupStrainer('div.content > ul')).