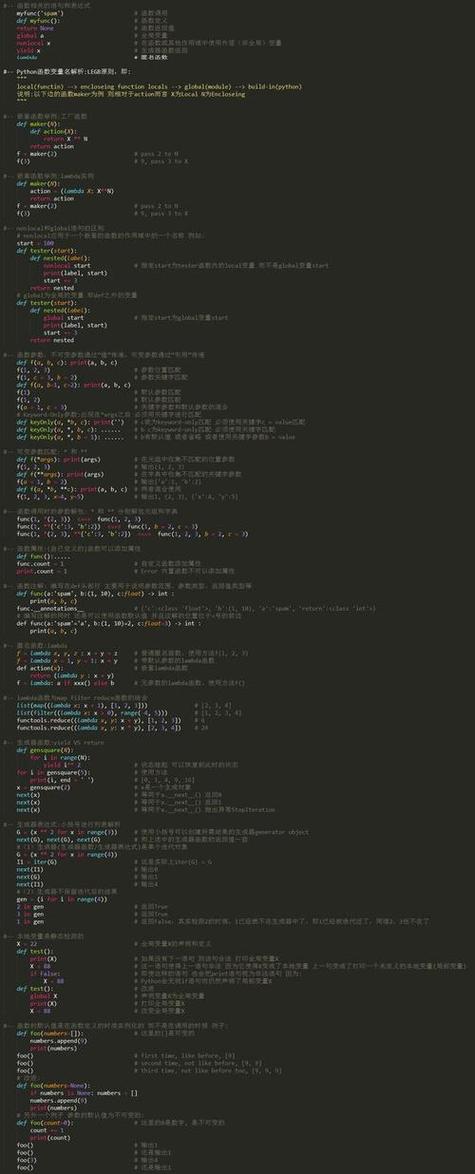

Of course. Here is a comprehensive guide covering the key aspects of using Python 3.6.1 with Scrapy.

This guide will cover:

- Compatibility: Is Python 3.6.1 still supported by Scrapy?

- Installation: How to install Scrapy on Python 3.6.1.

- A Simple Project: A step-by-step tutorial to create and run your first Scrapy spider.

- Important Considerations: Key things to be aware of when using this older version.

Compatibility: Is Python 3.6.1 Still Supported?

This is the most important question to answer first.

Short Answer: No, modern versions of Scrapy no longer officially support Python 3.6.

Detailed Explanation:

- Scrapy's Official Support: The Scrapy team follows a support policy aligned with Python's own lifecycle. Python 3.6 reached its official end-of-life (EOL) on December 23, 2025. This means it no longer receives security updates or bug fixes.

- Consequences for Scrapy:

- Installation Issues: You cannot install the latest versions of Scrapy (e.g., Scrapy 2.11+) on Python 3.6. The

pipinstall will fail because of version constraints in Scrapy'ssetup.pyfile. - Security Vulnerabilities: Any Scrapy version that does run on Python 3.6 is old and contains known security vulnerabilities. You should not use it for any production or sensitive data scraping.

- Bugs and Instability: Old versions of Scrapy may have bugs that have long since been fixed in newer releases.

- Installation Issues: You cannot install the latest versions of Scrapy (e.g., Scrapy 2.11+) on Python 3.6. The

Recommendation: While you can get an old version of Scrapy to run on Python 3.6.1, it is highly discouraged. The best practice is to upgrade your Python version to a supported one (e.g., Python 3.8, 3.9, 3.10, or 3.11).

If you absolutely cannot upgrade, you must use a specific, older version of Scrapy.

Installation on Python 3.6.1

Since you can't use pip install scrapy, you need to specify a version that is compatible with Python 3.6. The last version of Scrapy to officially support Python 3.6 was Scrapy 2.5.x.

Here is the step-by-step process:

Step 1: Verify Your Python Version

First, make sure you are in the correct Python 3.6.1 environment.

python --version # Expected output: Python 3.6.1

Step 2: Install Scrapy 2.5.x

Use pip to install the last compatible version. It's a good idea to use pip to install the latest wheel package first, as it speeds up installation.

# Upgrade pip and install wheel first (good practice) python -m pip install --upgrade pip wheel # Install the last Scrapy version compatible with Python 3.6 python -m pip install scrapy==2.5.1

You can also check for other compatible versions by searching the PyPI Scrapy page for versions that list Python 3.6 as a supported version.

Step 3: Verify the Installation

Check if Scrapy was installed correctly and see its version.

scrapy version # Expected output: Scrapy 2.5.1 ...

A Simple Scrapy Project (Tutorial)

Now that you have a working (but old) installation, let's create a basic spider to scrape quotes from http://quotes.toscrape.com/.

Step 1: Create a New Scrapy Project

Run the startproject command in your terminal.

scrapy startproject tutorial

This will create a tutorial directory with the following structure:

tutorial/

scrapy.cfg # deploy configuration file

tutorial/ # project's Python module, you'll import your code from here

__init__.py

items.py # project items definition file

middlewares.py # project middlewares file

pipelines.py # project pipelines file

settings.py # project settings file

spiders/ # a directory where you'll put your spiders

__init__.pyStep 2: Define an Item

An "Item" is a Python class that defines the fields you want to scrape. Open tutorial/items.py and define the fields for a quote: text, author, and tags.

# tutorial/items.py

import scrapy

class QuoteItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

text = scrapy.Field()

author = scrapy.Field()

tags = scrapy.Field()

Step 3: Create a Spider

A Spider is a class that you define, and Scrapy uses it to scrape information from a website (or a group of websites).

- Create a new file named

quotes_spider.pyinside thetutorial/spiders/directory. - Add the following code to the file.

# tutorial/spiders/quotes_spider.py

import scrapy

from tutorial.items import QuoteItem # Import the Item we defined

class QuotesSpider(scrapy.Spider):

name = "quotes" # Unique name for the spider

allowed_domains = ["quotes.toscrape.com"]

start_urls = [

"http://quotes.toscrape.com/page/1/",

]

def parse(self, response):

"""

This method is called for each response downloaded.

It's where the parsing logic goes.

"""

# The CSS selector to find all quote containers on the page

quotes = response.css('div.quote')

for quote in quotes:

# Create an instance of our Item

item = QuoteItem()

# Extract data using CSS selectors and extract_first()

# extract_first() is safer than extract()[0] as it handles empty lists

item['text'] = quote.css('span.text::text').get().strip('"')

item['author'] = quote.css('small.author::text').get()

item['tags'] = quote.css('div.tags a.tag::text').getall()

yield item

# Follow the "Next" button to scrape the next page

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

# Construct the full URL and follow it

yield response.follow(next_page, self.parse)

Step 4: Run the Spider

Navigate to the project's root directory (tutorial/) in your terminal and run the spider using its name.

cd tutorial scrapy crawl quotes

You should see the scraped data being printed to your console in JSON-like format. It will scrape the first page, find the "Next" link, and continue until there are no more pages.

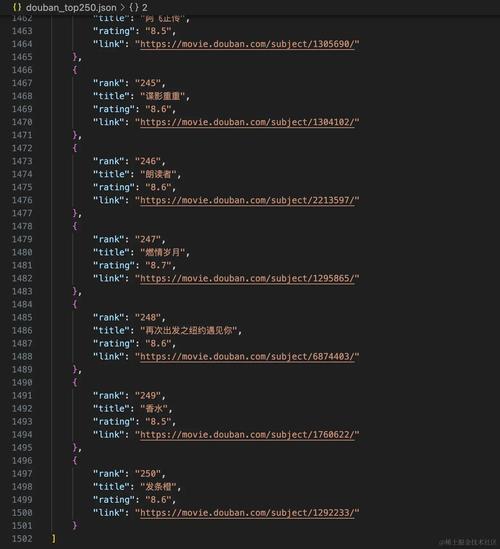

Step 5: Save the Output

To save the scraped data to a file (e.g., a JSON file), use the -o or --output option.

scrapy crawl quotes -o quotes.json

This will create a quotes.json file in your tutorial directory with the results.

Important Considerations and Best Practices

Using an old Python/Scrapy stack comes with significant caveats.

| Feature | Modern Scrapy (Python 3.8+) | Your Setup (Python 3.6.1 + Scrapy 2.5) |

|---|---|---|

| Security | High. Receives regular security updates. | Very Low. Contains known, unpatched vulnerabilities. |

| Bugs | Few. Actively maintained and bugs are fixed. | Likely. Many bugs from that era have been fixed. |

| Features | Latest. Supports new features like asyncio for better performance. |

Outdated. Missing years of improvements. |

| Dependencies | Can use modern, faster libraries. | Stuck with older, slower versions of dependencies like Twisted. |

| Documentation | All documentation and tutorials assume modern versions. | Some documentation may not apply, and you may encounter outdated advice. |

| Community Support | Active community on forums, Discord, etc. | Community focus has moved on. You'll have a harder time getting help for old-version-specific issues. |

Final Recommendation:

While this guide shows you how to make it work, the responsible and most effective approach is to upgrade your Python environment. A simple pyenv or venv setup can allow you to have multiple Python versions on your machine, letting you use a modern version for Scrapy projects while keeping 3.6.1 for other legacy tasks if absolutely necessary.