Of course! "Learning curve" in Python can mean two main things, and it's crucial to understand the difference:

- The Concept: The general difficulty and progress path for someone learning Python.

- The Tool: A specific Python library called

learning_curvefromscikit-learnused to evaluate machine learning models.

I'll cover both in detail.

Part 1: The Concept - The Python Learning Curve for Beginners

This is about the journey you take when learning Python. Python is famous for having a gentle learning curve, especially for beginners, but it gets progressively steeper as you advance.

The Shape of the Curve (A General Model)

-

The Initial Slope (Very Gentle):

- Why it's easy: Python's syntax is clean, readable, and often resembles plain English. You can write a functional program with very little code.

- What you learn:

- Basic syntax (indentation, variables, data types like strings, numbers, booleans).

- Simple data structures (lists, dictionaries).

- Basic control flow (

if/else,for/whileloops).

- Result: You feel productive quickly. You can write small scripts to automate simple tasks (e.g., rename files, perform simple calculations).

-

The Plateau (Intermediate Level):

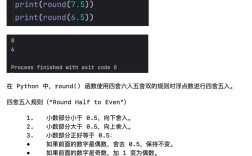

(图片来源网络,侵删)

(图片来源网络,侵删)- Why it gets harder: The concepts become more abstract. You need to think about how different parts of your program interact.

- What you learn:

- Functions: Reusing code and breaking problems down.

- Object-Oriented Programming (OOP): Classes, objects, inheritance, and encapsulation. This is a major mental shift for many.

- Error Handling (

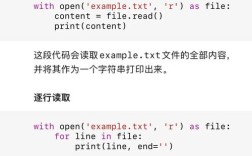

try...except): Making your programs robust. - Working with Libraries: Using

pipto install packages and importing them (e.g.,pandasfor data,requestsfor web). - File I/O: Reading from and writing to files.

- Result: Progress feels slower. You're not just writing code; you're designing small applications. This is where many people get stuck and need consistent practice.

-

The Steep Ascent (Advanced Level):

- Why it's very steep: You're no longer just using Python; you're understanding its internals and the systems it runs on.

- What you learn:

- Advanced OOP: Magic methods (

__init__,__str__), decorators, metaclasses. - Concurrency & Parallelism:

threading,multiprocessing,asyncio. - Metaprogramming: Code that writes code.

- C-Extensions: Writing performance-critical code in C/C++ and using it from Python.

- Web Frameworks: Deep dives into Django or Flask.

- System Architecture: Designing large, scalable, maintainable systems.

- Advanced OOP: Magic methods (

- Result: You become an expert. The learning is deep and specialized. You're solving complex problems, not just implementing solutions.

Tips for Navigating Your Learning Curve

- Consistency over Intensity: 30 minutes of coding every day is far more effective than a 5-hour cram session once a week.

- Build Projects: Don't just follow tutorials. Apply what you learn to a project you care about. This is the single best way to solidify your knowledge.

- Embrace the "Plateau": It's a normal part of learning. Don't get discouraged. Keep building, and you will eventually break through.

- Read Other People's Code: Look at open-source projects on GitHub to see how experienced programmers structure their code.

- Join a Community: Ask questions on forums like Stack Overflow, Reddit (r/learnpython), or Discord. Explaining your problem to someone else often helps you solve it.

Part 2: The Tool - sklearn.model_selection.learning_curve

This is a function from the popular scikit-learn library. It's a diagnostic tool used in machine learning to understand how a model's performance changes as it is trained on more data.

What is it?

The learning_curve function helps you visualize the relationship between:

- Training Set Size: The amount of data used to train the model.

- Model Performance: Measured by a score (e.g., accuracy, F1-score, R²).

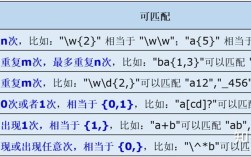

It plots two curves:

- Training Score: How well the model performs on the data it was just trained on.

- Validation Score: How well the model performs on unseen data (a validation set).

Why is it Useful?

By looking at these two curves, you can diagnose common problems in your machine learning model:

-

High Bias (Underfitting):

- What it looks like: Both the training and validation scores are low, and they converge quickly.

- What it means: The model is too simple to capture the underlying patterns in the data. It's not learning well from the training data.

- Solution: Use a more complex model, add more features, or reduce regularization.

-

High Variance (Overfitting):

- What it looks like: The training score is very high, but the validation score is much lower. The gap between the two curves is large.

- What it means: The model has memorized the training data, including its noise, and fails to generalize to new, unseen data.

- Solution: Get more training data, use a simpler model, or increase regularization.

-

Good Fit:

- What it looks like: Both the training and validation scores are high, and they converge to a similar value.

- What it means: The model has learned the underlying patterns in the data and can generalize well to new data.

How to Use It (Code Example)

Here is a complete, runnable example.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import learning_curve

from sklearn.svm import SVC

from sklearn.datasets import make_classification

# 1. Generate a sample dataset

# We'll create a dataset that is somewhat complex to make overfitting likely

X, y = make_classification(

n_samples=1000,

n_features=20,

n_informative=10,

n_redundant=5,

n_classes=2,

random_state=42

)

# 2. Define the model

# We'll use a Support Vector Classifier with a high C value, which can lead to overfitting

# A high C value means the model will try hard to classify every training point correctly.

model = SVC(kernel='rbf', C=100, gamma='scale', random_state=42)

# 3. Generate the learning curve data

# We specify the number of training sizes to evaluate and the number of cross-validation folds

train_sizes, train_scores, val_scores = learning_curve(

estimator=model,

X=X,

y=y,

train_sizes=np.linspace(0.1, 1.0, 10), # 10 different training set sizes from 10% to 100%

cv=5, # 5-fold cross-validation

scoring='accuracy',

n_jobs=-1 # Use all available CPU cores

)

# 4. Calculate mean and standard deviation for plotting

# The scores from each fold are stored, so we average them

train_mean = np.mean(train_scores, axis=1)

train_std = np.std(train_scores, axis=1)

val_mean = np.mean(val_scores, axis=1)

val_std = np.std(val_scores, axis=1)

# 5. Plot the learning curve

plt.figure(figsize=(10, 6))

plt.plot(train_sizes, train_mean, color='blue', marker='o', label='Training Accuracy')

plt.fill_between(train_sizes, train_mean + train_std, train_mean - train_std, alpha=0.15, color='blue')

plt.plot(train_sizes, val_mean, color='red', marker='s', label='Validation Accuracy')

plt.fill_between(train_sizes, val_mean + val_std, val_mean - val_std, alpha=0.15, color='red')

'Learning Curve for an SVC Model')

plt.xlabel('Number of Training Samples')

plt.ylabel('Accuracy Score')

plt.legend(loc='best')

plt.grid()

plt.show()

Interpreting the Output Plot

When you run the code above, you'll see a plot that clearly shows overfitting:

- The blue line (Training Accuracy) starts very high and stays high. The model is performing almost perfectly on the data it sees.

- The red line (Validation Accuracy) is significantly lower than the training accuracy. It also plateaus at a much lower score.

- The large gap between the two curves is a classic sign of high variance/overfitting. The model has learned the training data too well and cannot generalize.