Of course! The term "apply async" in Python can mean a few different things, but it most commonly refers to applying a function to a sequence of items (like a list) concurrently, rather than sequentially.

Let's break down the concept and explore the best ways to achieve it in Python.

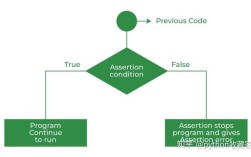

The Core Idea: Synchronous vs. Asynchronous "Apply"

Imagine you have a list of items and you want to process each one with a function.

- Synchronous

apply(The Old Way): You call the function for the first item, wait for it to finish, then call it for the second item, and so on. If processing one item takes 1 second, processing 100 items will take at least 100 seconds. - Asynchronous

apply(The Modern Way): You start processing all 100 items at roughly the same time. If they are independent tasks (like making network requests or downloading files), you can process them concurrently. The total time will be closer to the time it takes to process the slowest single item (e.g., ~1 second, not 100 seconds).

Python's standard library provides excellent tools for this. The best tool for the job depends on whether your tasks are I/O-bound (waiting for network, disk, etc.) or CPU-bound (heavy calculations).

Scenario 1: I/O-Bound Tasks (e.g., API calls, web scraping)

This is where modern async/await with asyncio truly shines. The asyncio library allows you to run many operations concurrently without using multiple threads. When an I/O-bound task is waiting (e.g., for a server to respond), asyncio can pause that task and run another one that is ready.

The modern equivalent of "apply" is asyncio.gather().

Example: Fetching Web Pages Concurrently

Let's say we want to fetch the titles of several web pages.

import asyncio

import aiohttp # A popular async HTTP client/server library

# The async function we want to "apply" to each URL

async def fetch_url_title(url: str, session: aiohttp.ClientSession):

"""Fetches a URL and returns its title."""

try:

async with session.get(url, timeout=10) as response:

response.raise_for_status() # Raise an exception for bad status codes

html = await response.text()

# A simple way to get the title (not robust, but for demonstration)

start = html.find("<title>") + len("<title>")

end = html.find("</title>")

title = html[start:end]

return f"{url}: {title}"

except Exception as e:

return f"{url}: Error - {e}"

# The "apply async" function using asyncio.gather

async def apply_async_fetch(urls: list[str]):

"""Applies fetch_url_title to a list of URLs concurrently."""

async with aiohttp.ClientSession() as session:

# Create a list of tasks, one for each URL

tasks = [fetch_url_title(url, session) for url in urls]

# asyncio.gather runs all tasks concurrently and waits for them all to complete

results = await asyncio.gather(*tasks)

return results

# --- How to run it ---

if __name__ == "__main__":

urls_to_fetch = [

"https://www.python.org",

"https://github.com",

"https://www.wikipedia.org",

"https://httpbin.org/delay/2" # This one will artificially take 2 seconds

]

# For modern Python (3.7+), you can use asyncio.run()

print("Starting concurrent fetches...")s = asyncio.run(apply_async_fetch(urls_to_fetch))

for title in titles:

print(title)

Why asyncio.gather() is the right tool here:

- Conciseness: It's the idiomatic way to run a list of async functions.

- Efficiency: It doesn't create new threads for each task, avoiding the overhead of thread management.

- Readability: The code clearly expresses the intent: "gather results from all these async operations."

Scenario 2: CPU-Bound Tasks (e.g., complex math, data processing)

If your task involves heavy calculations, asyncio won't help because Python's Global Interpreter Lock (GIL) prevents multiple threads from executing Python bytecode at the exact same time. For CPU-bound tasks, you need true parallelism, which is best achieved with the concurrent.futures module and its ProcessPoolExecutor.

This uses multiple processes, each with its own Python interpreter and memory space, allowing you to bypass the GIL and achieve true parallelism.

Example: Calculating Factorials

import concurrent.futures

import math

import time

# The function we want to "apply" to each number

def calculate_factorial(n: int):

"""Calculates the factorial of a number (a CPU-bound task)."""

# print(f"Calculating factorial for {n}...")

result = math.factorial(n)

# print(f"Finished factorial for {n}.")

return result

# The "apply async" function using a ProcessPoolExecutor

def apply_async_factorial(numbers: list[int], max_workers: int = None):

"""Applies calculate_factorial to a list of numbers in parallel."""

# We use a context manager to ensure processes are cleaned up

with concurrent.futures.ProcessPoolExecutor(max_workers=max_workers) as executor:

# map() is the direct equivalent of the old functional 'apply'

# It applies the function to every item in the iterable.

# It returns an iterator, so we convert it to a list to get all results.

results = list(executor.map(calculate_factorial, numbers))

return results

# --- How to run it ---

if __name__ == "__main__":

# Note: On Windows/macOS, you need this `if __name__ == "__main__":` guard

# to protect code that creates new processes from running when imported.

numbers_to_process = [20000, 25000, 30000, 35000, 40000]

print("Starting parallel factorial calculations...")

# Measure the time

start_time = time.time()

factorials = apply_async_factorial(numbers_to_process)

end_time = time.time()

print(f"\nCalculated factorials for: {numbers_to_process}")

# print(f"Results (first 10 digits): {[str(f)[:10] for f in factorials]}")

print(f"Total time taken: {end_time - start_time:.4f} seconds")

Why ProcessPoolExecutor.map() is the right tool here:

- Parallelism: It uses multiple CPU cores, providing a significant speedup for CPU-bound tasks.

- Simplicity: The

map()function is a direct and intuitive replacement for a synchronous loop. - Robustness: It handles the creation and management of worker processes for you.

Summary: Which One Should You Use?

| Feature | asyncio + gather() |

concurrent.futures + ProcessPoolExecutor |

|---|---|---|

| Best For | I/O-Bound tasks (network, disk, database). | CPU-Bound tasks (math, data crunching). |

| Mechanism | Concurrency (single-threaded, cooperative multitasking). | Parallelism (multi-process, true parallel execution). |

| Key Concept | async/await coroutines. |

Threads/Processes managed by an executor. |

| Main Function | asyncio.gather() |

executor.map() or executor.submit() |

| Overhead | Low. No new OS processes are created. | High. Creating processes is expensive. |

| Example Use | Fetching data from 100 different APIs. | Processing 10,000 rows of data with a complex formula. |

Bonus: The "Classic" ThreadPoolExecutor

For completeness, there's also ThreadPoolExecutor. It's similar to ProcessPoolExecutor but uses threads instead of processes. It's useful for I/O-bound tasks if you have code that is not async-compatible (e.g., many older libraries). Threads share memory, so they have less overhead than processes, but they are still subject to the GIL and are not suitable for CPU-bound tasks.

# Example for a blocking I/O task with a thread pool

def fetch_url_blocking(url):

import requests # A classic, blocking HTTP library

try:

response = requests.get(url, timeout=10)

return f"{url}: Status {response.status_code}"

except Exception as e:

return f"{url}: Error - {e}"

def apply_async_threaded(urls):

with concurrent.futures.ThreadPoolExecutor(max_workers=5) as executor:

results = list(executor.map(fetch_url_blocking, urls))

return results

Final Recommendation

- For I/O-Bound Tasks: Use

asynciowithaiohttpandasyncio.gather(). It's the most modern, efficient, and scalable solution. - For CPU-Bound Tasks: Use

concurrent.futures.ProcessPoolExecutorwith itsmap()method. It's the standard way to achieve parallelism in Python. - For Blocking I/O in Legacy Code: Use

concurrent.futures.ThreadPoolExecutoras a bridge to run old, blocking code in a concurrent manner.