Of course! The term "parse string" in Python is very broad. It can mean anything from simply extracting a piece of text to converting a string into a complex data structure.

Let's break it down from the simplest to the most common and advanced scenarios.

What is "Parsing"?

In programming, parsing means to analyze a string of data to understand its structure and extract meaningful information from it. Think of it like reading a sentence and identifying the subject, verb, and object.

The Simplest Case: Extracting Substrings (Slicing)

If your goal is to get a specific part of a string, you can use slicing. This is the most fundamental form of "parsing."

Python strings are sequences, so you can access them with indices.

my_string = "Hello, Python World!"

# Get the first 5 characters

print(f"First 5 chars: '{my_string[0:5]}'") # Output: 'Hello'

# Get everything from index 7 to the end

print(f"From index 7: '{my_string[7:]}'") # Output: 'Python World!'

# Get the last 6 characters

print(f"Last 6 chars: '{my_string[-6:]}'") # Output: 'World!'

# Get every second character

print(f"Every 2nd char: '{my_string[::2]}'") # Output: 'HloPtoWrd'

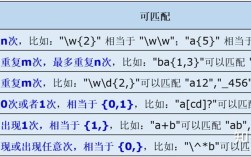

Finding Text with Patterns: Regular Expressions (re module)

When you need to find patterns of text (like an email address, a phone number, or a specific word), Python's built-in re module is your most powerful tool.

This is the most common and flexible way to parse strings.

Example: Extracting an Email

Let's say you have a block of text and you want to find all email addresses in it.

import re

text = """

Contact us at support@example.com for help.

Sales can be reached at sales.my-company.co.uk.

For personal matters, try user.name+alias@sub.domain.io.

Invalid email: user@.com

"""

# The pattern looks for: one or more word characters, @, one or more word characters, ., one or more word characters

pattern = r"[\w\.-]+@[\w\.-]+\.\w+"

# re.findall() returns a list of all non-overlapping matches

emails = re.findall(pattern, text)

print(emails)

# Output: ['support@example.com', 'sales.my-company.co.uk', 'user.name+alias@sub.domain.io']

# To get more details about the match, use re.finditer()

for match in re.finditer(pattern, text):

print(f"Found email: {match.group()} at position {match.span()}")

# Output:

# Found email: support@example.com at position (16, 34)

# Found email: sales.my-company.co.uk at position (58, 82)

# Found email: user.name+alias@sub.domain.io at position (109, 138)

Common re functions:

re.findall(pattern, string): Finds all matches and returns them as a list.re.search(pattern, string): Finds the first match and returns a match object (orNone).re.match(pattern, string): Only checks for a match at the beginning of the string.re.split(pattern, string): Splits the string by the matches and returns a list.re.sub(pattern, replacement, string): Replaces all matches with the replacement string.

Parsing Structured Data Formats

This is a very common task. You have a string that represents a specific data format (like JSON, CSV, or XML) and you want to convert it into a native Python object (like a dictionary or a list).

Example: Parsing a JSON String

The json module is perfect for this. It's used extensively in web APIs.

import json

# A string that represents a JSON object

json_string = '{"name": "Alice", "age": 30, "is_student": false, "courses": ["History", "Math"]}'

# json.loads() (load string) converts the JSON string into a Python dictionary

data = json.loads(json_string)

print(f"Type of parsed data: {type(data)}")

# Output: Type of parsed data: <class 'dict'>

print(f"Name: {data['name']}")

# Output: Name: Alice

print(f"First course: {data['courses'][0]}")

# Output: First course: History

# To convert a Python object back to a JSON string, use json.dumps()

new_json_string = json.dumps(data, indent=2)

print("\nConverted back to JSON:")

print(new_json_string)

Example: Parsing a CSV String

The csv module is great for handling comma-separated values.

import csv

from io import StringIO # Needed to treat a string as a file

csv_string = """name,age,city

Charlie,25,New York

Diana,34,London

Eve,29,Tokyo

"""

# Use csv.reader to parse the string

csv_reader = csv.reader(StringIO(csv_string))

# Skip the header

header = next(csv_reader)

print(f"Header: {header}")

# Process each row

people = []

for row in csv_reader:

people.append({"name": row[0], "age": int(row[1]), "city": row[2]})

print("\nParsed data as a list of dictionaries:")

print(people)

# Output:

# [{'name': 'Charlie', 'age': 25, 'city': 'New York'}, ...]

Parsing with String Methods

For simple, predictable strings, you can use built-in string methods.

Example: Splitting a String by a Delimiter

Let's say you have a log entry and want to extract the timestamp and the message.

log_entry = "[2025-10-27 10:00:00] User logged in successfully"

# Split the string by the space after the timestamp

parts = log_entry.split("] ")

print(f"Parts after split: {parts}")

# Output: Parts after split: ['[2025-10-27 10:00:00', 'User logged in successfully']

timestamp = parts[0].strip("[]") # Remove the brackets

message = parts[1]

print(f"Timestamp: {timestamp}")

# Output: Timestamp: 2025-10-27 10:00:00

print(f"Message: {message}")

# Output: Message: User logged in successfully

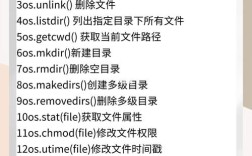

Other useful string methods:

.find("text"): Returns the index of the first occurrence of "text", or -1 if not found..index("text"): Similar to.find()but raises aValueErrorif not found..startswith("prefix")/.endswith("suffix"): Checks the beginning/end of a string..strip(): Removes leading/trailing whitespace.

Advanced Parsing: Using a Third-Party Library

For very complex formats like HTML or XML, using a dedicated library is much more robust than regular expressions.

Example: Parsing HTML with BeautifulSoup

First, you need to install it:

pip install beautifulsoup4

BeautifulSoup can parse HTML even if it's not perfectly formatted, which is a huge advantage over re.

from bs4 import BeautifulSoup

html_string = """

<html>

<head><title>A Test Page</title></head>

<body>

<h1>Welcome!</h1>

<p class="main">This is the first paragraph.</p>

<p class="main">This is the second paragraph.</p>

<a href="https://example.com">Link to Example</a>

</body>

</html>

"""

# Create a BeautifulSoup object

soup = BeautifulSoup(html_string, 'html.parser')

# Find the title tagtag = soup.find('title')

print(f"Page Title: {title_tag.string}") # Output: Page Title: A Test Page

# Find all paragraph tags

all_paragraphs = soup.find_all('p')

print(f"\nFound {len(all_paragraphs)} paragraphs:")

for p in all_paragraphs:

print(f"- {p.string}")

# Find a specific element by its class

first_main_paragraph = soup.find('p', class_='main')

print(f"\nFirst main paragraph: {first_main_paragraph.string}")

# Find an element by its attribute

link_tag = soup.find('a', href=True)

print(f"\nLink text: '{link_tag.string}', URL: '{link_tag['href']}'")

# Output: Link text: 'Link to Example', URL: 'https://example.com'

Summary: Which Method to Choose?

| Your Goal | Recommended Method / Module | Why? |

|---|---|---|

| Get a fixed part of a string (e.g., first 5 chars). | String Slicing (my_string[0:5]) |

Simple, fast, and built-in. |

| Find a pattern (email, phone number, custom rule). | Regular Expressions (re module) |

Extremely powerful and flexible for pattern matching. |

| Parse a string that is JSON data. | json module (json.loads()) |

The standard, safe, and easy way to handle JSON. |

| Parse a string that is CSV data. | csv module (csv.reader) |

The standard way to handle comma-separated values. |

| Parse a string that is HTML/XML. | Third-Party Library (e.g., BeautifulSoup) |

Much more robust and easier than regex for complex, real-world HTML. |

| Extract text based on a simple delimiter. | String Methods (.split(), .find(), .strip()) |

Quick and easy for simple, predictable formats. |