Of course! Here is a comprehensive guide to using the urllib2 API in Python.

Important Note: urllib2 vs. urllib in Python 3

First, a crucial point for modern Python users:

- In Python 2: The library was called

urllib2. - In Python 3: The

urllib2module was merged and reorganized intourllib. The functionality is now split across three modules:urllib.request: For opening and reading URLs (the direct replacement forurllib2).urllib.error: Contains exception classes for errors raised byurllib.request.urllib.parse: For parsing URLs (this was part ofurlibin Python 2).

This guide will focus on the Python 2 urllib2 API, as that's what your question specifically asked for. I will include a "Python 3 Equivalent" section for each major concept.

Core Concepts of urllib2

The urllib2 library is a standard library for fetching URLs. Its main components are:

urllib2.urlopen(url[, data[, timeout]]): The primary function for opening a URL. It returns a file-like object.urllib2.Request: A class that represents a request to a URL. It allows you to add headers, set data, and use different HTTP methods.urllib2.build_opener([handler, ...]): A function to create an "opener" object, which is more advanced thanurlopen. It allows you to install handlers for things as cookies, HTTP authentication, and proxies.urllib2.install_opener(opener): Installs the opener you built as the default opener for the module.urllib2.HTTPError&urllib2.URLError: Exception classes for handling network and HTTP errors.

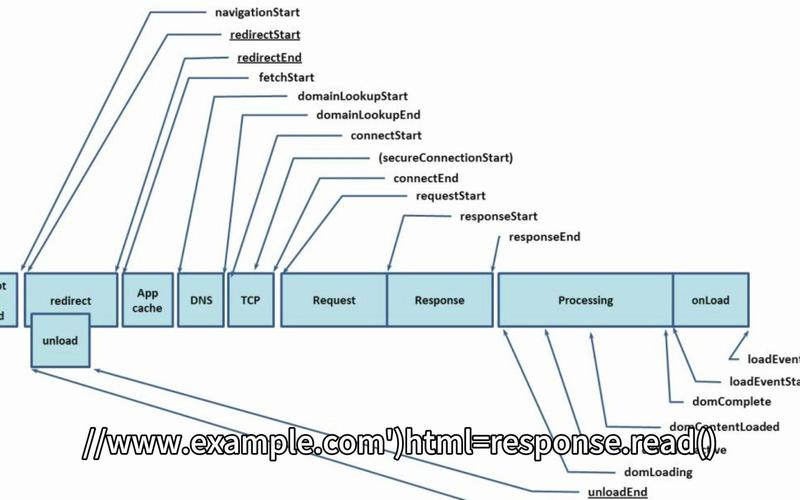

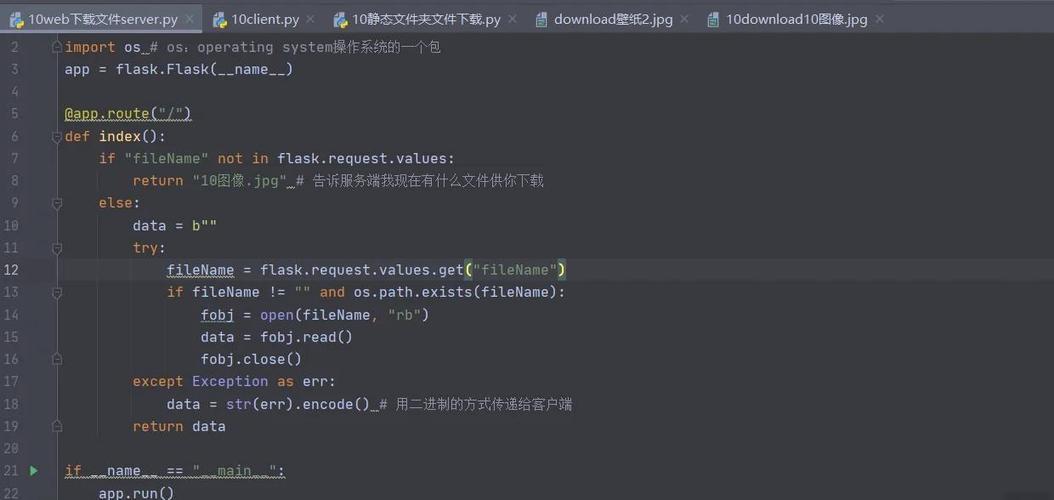

Basic URL Fetching (urlopen)

This is the simplest way to get the content of a webpage. urlopen returns a file-like object, so you can read from it just like a file.

import urllib2

try:

# The URL you want to open

url = "http://www.example.com"

# Open the URL

response = urllib2.urlopen(url)

# Get the HTTP response status code (e.g., 200 for OK)

print "Response Status Code:", response.getcode()

# Read the content of the response

# .read() returns the entire content as a string

html_content = response.read()

# Print the first 200 characters of the HTML

print "Content (first 200 chars):", html_content[:200]

# It's good practice to close the response

response.close()

except urllib2.URLError as e:

print "Failed to reach a server."

print "Reason:", e.reason

except urllib2.HTTPError as e:

print "The server couldn't fulfill the request."

print "Error code:", e.code

print "Page:", e.read() # You can read the error page's content

Python 3 Equivalent:

# In Python 3, you use urllib.request

from urllib.request import urlopen

from urllib.error import URLError, HTTPError

try:

url = "http://www.example.com"

with urlopen(url) as response: # The 'with' statement handles closing

html_content = response.read()

print("Response Status Code:", response.status)

print("Content (first 200 chars):", html_content[:200].decode('utf-8')) # Decode bytes to string

except URLError as e:

print("Failed to reach a server.")

print("Reason:", e.reason)

except HTTPError as e:

print("The server couldn't fulfill the request.")

print("Error code:", e.code)

print("Page:", e.read().decode('utf-8'))

Adding Headers to a Request

Many websites require specific headers, like a User-Agent, to allow requests from scripts.

You must create a Request object to add headers.

import urllib2

url = "http://www.example.com"

# Create a Request object

req = urllib2.Request(url)

# Add a User-Agent header

# This makes the request look like it's coming from a standard browser

req.add_header('User-Agent', 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36')

# Add another header, e.g., for Accept-Language

req.add_header('Accept-Language', 'en-US,en;q=0.9')

# Now open the Request object, not the URL directly

response = urllib2.urlopen(req)

html_content = response.read()

print "User-Agent header added successfully."

print "Content (first 200 chars):", html_content[:200]

response.close()

Python 3 Equivalent:

from urllib.request import Request, urlopen

url = "http://www.example.com"

req = Request(url)

req.add_header('User-Agent', 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36')

with urlopen(req) as response:

html_content = response.read()

print("User-Agent header added successfully.")

print("Content (first 200 chars):", html_content[:200].decode('utf-8'))

Sending Data (POST Request)

To send data to a server (like a form submission), you use the data argument of urlopen. The data must be in a specific format: application/x-www-form-urlencoded. The urllib.urlencode function is perfect for this.

import urllib2

import urllib # Note: we need the 'urllib' module for urlencode

url = "http://www.example.com/submit-form"

# The data to be sent, as a dictionary

form_data = {

'username': 'john_doe',

'password': 'secret123'

}

# Encode the data into the correct format

# e.g., "username=john_doe&password=secret123"

encoded_data = urllib.urlencode(form_data)

# Note: When data is passed, urlopen automatically makes a POST request

# The request object is created internally by urlopen

req = urllib2.Request(url, data=encoded_data)

# You can still add headers

req.add_header('User-Agent', 'MyCoolScript/1.0')

try:

response = urllib2.urlopen(req)

response_content = response.read()

print "POST request successful."

print "Response:", response_content[:200]

response.close()

except urllib2.HTTPError as e:

print "Error during POST:", e.code, e.reason

Python 3 Equivalent:

from urllib.request import Request, urlopen

from urllib.parse import urlencode # urlencode is in urllib.parse in Python 3

url = "http://www.example.com/submit-form"

form_data = {

'username': 'john_doe',

'password': 'secret123'

}

encoded_data = urlencode(form_data).encode('utf-8') # Data must be bytes in Python 3

req = Request(url, data=encoded_data, method='POST') # Explicitly setting method is good practice

req.add_header('User-Agent', 'MyCoolScript/1.0')

try:

with urlopen(req) as response:

response_content = response.read()

print("POST request successful.")

print("Response:", response_content[:200].decode('utf-8'))

except HTTPError as e:

print("Error during POST:", e.code, e.reason)

Handling Cookies

urllib2 has built-in support for cookies. The HTTPCookieProcessor handler manages them.

import urllib2 import cookielib # The module for cookie handling # Create a cookie jar to store cookies cookie_jar = cookielib.CookieJar() # Create an opener that will handle cookies for us opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cookie_jar)) # Install this opener as the default opener for the module urllib2.install_opener(opener) # Now, any request made with urlopen will automatically handle cookies url = "http://www.example.com/login-page" # A hypothetical login page response = urllib2.urlopen(url) print "Visited login page. Cookies received:", cookie_jar # Now visit another page that requires a session url2 = "http://www.example.com/protected-page" response2 = urllib2.urlopen(url2) print "Visited protected page. Cookies are now in the jar." print "Content:", response2.read()[:200] response.close() response2.close()

Python 3 Equivalent:

from urllib.request import build_opener, install_opener, Request, urlopen

from urllib.error import HTTPError

from http.cookiejar import CookieJar # CookieJar is in http.cookiejar

cookie_jar = CookieJar()

opener = build_opener(HTTPCookieProcessor(cookie_jar))

install_opener(opener)

url = "http://www.example.com/login-page"

try:

with urlopen(url) as response:

print("Visited login page. Cookies received:", cookie_jar)

url2 = "http://www.example.com/protected-page"

with urlopen(url2) as response2:

print("Visited protected page. Cookies are now in the jar.")

print("Content:", response2.read()[:200].decode('utf-8'))

except HTTPError as e:

print("Error accessing protected page:", e.code, e.reason)

Handling HTTP Authentication (Basic Auth)

For websites that require username/password authentication, you can use HTTPBasicAuthHandler.

import urllib2

import base64 # For encoding the username:password string

# The URL that requires authentication

url = "http://www.example.com/protected"

# Create a password manager

password_mgr = urllib2.HTTPPasswordMgrWithDefaultRealm()

# Add the username and password for the URL

# The realm can be None if you don't know it

top_level_url = "http://www.example.com/"

username = "user"

password = "pass"

password_mgr.add_password(None, top_level_url, username, password)

# Create the handler for basic authentication

auth_handler = urllib2.HTTPBasicAuthHandler(password_mgr)

# Build an opener with this handler

opener = urllib2.build_opener(auth_handler)

# Install the opener

urllib2.install_opener(opener)

# Now, the request will include the authentication headers

try:

response = urllib2.urlopen(url)

print "Authentication successful."

print "Content:", response.read()[:200]

response.close()

except urllib2.HTTPError as e:

print "Authentication failed. Error:", e.code, e.reason

Python 3 Equivalent:

from urllib.request import build_opener, install_opener, Request, urlopen

from urllib.error import HTTPError

from urllib.parse import urlparse

from http.cookiejar import CookieJar

from http.client import HTTPBasicAuthHandler

url = "http://www.example.com/protected"

top_level_url = "http://www.example.com/"

username = "user"

password = "pass"

password_mgr = HTTPPasswordMgrWithDefaultRealm()

password_mgr.add_password(None, top_level_url, username, password)

auth_handler = HTTPBasicAuthHandler(password_mgr)

opener = build_opener(auth_handler)

install_opener(opener)

try:

with urlopen(url) as response:

print("Authentication successful.")

print("Content:", response.read()[:200].decode('utf-8'))

except HTTPError as e:

print("Authentication failed. Error:", e.code, e.reason)

Summary Table: urllib2 vs. urllib.request (Python 3)

| Task | Python 2 (urllib2) |

Python 3 (urllib.request) |

|---|---|---|

| Open URL | urllib2.urlopen(url) |

urllib.request.urlopen(url) |

| Create Request | urllib2.Request(url) |

urllib.request.Request(url) |

| Add Header | req.add_header('Key', 'Val') |

req.add_header('Key', 'Val') |

| Encode Data | urllib.urlencode(dict) |

urllib.parse.urlencode(dict).encode() |

| Handle Cookies | cookielib.CookieJarurllib2.HTTPCookieProcessor |

http.cookiejar.CookieJarurllib.request.HTTPCookieProcessor |

| Handle Auth | urllib2.HTTPBasicAuthHandler |

urllib.request.HTTPBasicAuthHandler |

| Build Opener | urllib2.build_opener(handler) |

urllib.request.build_opener(handler) |

| Install Opener | urllib2.install_opener(opener) |

urllib.request.install_opener(opener) |

| HTTP Error | urllib2.HTTPError |

urllib.error.HTTPError |

| URL Error | urllib2.URLError |

urllib.error.URLError |