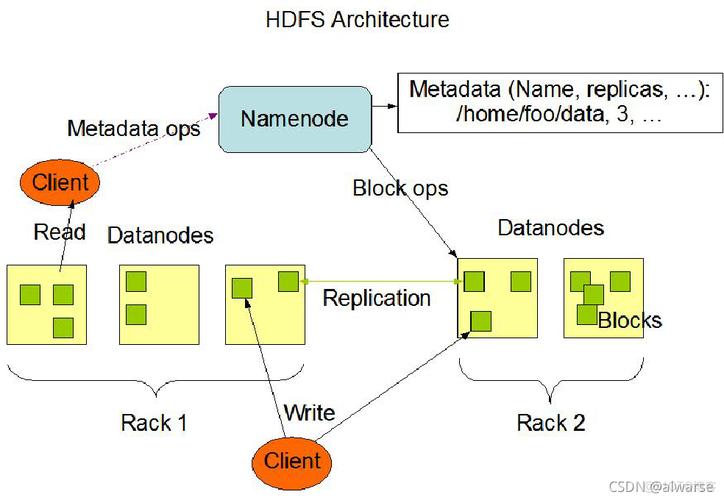

Of course! The hadoop java jar command is the fundamental way to execute a Java JAR file on a Hadoop cluster. It's the bridge between your compiled Java code and the Hadoop Distributed File System (HDFS) and the MapReduce/YARN execution framework.

Let's break it down from the basics to advanced usage.

The Basic Command Structure

The core command is straightforward:

hadoop jar <your-jar-file.jar> <main-class> [main-args]

hadoop: The Hadoop command-line interface script.jar: The subcommand telling Hadoop to execute a Java JAR file.<your-jar-file.jar>: The path to your compiled Java archive (JAR file) containing your MapReduce (or other Hadoop) job.<main-class>: The fully qualified name of the main class inside your JAR file that contains thepublic static void main(String[] args)method. This is the entry point for your application.[main-args]: Any arguments you want to pass to yourmainmethod.

A Complete Step-by-Step Example

Let's create a simple "Word Count" application and run it.

Step 1: Write the Java Code (WordCount.java)

This is the classic example. It reads text files, splits them into words, counts the occurrences of each word, and writes the output.

// WordCount.java

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

// Mapper Class

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

// Reducer Class

public static class IntSumReducer

extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

// Main Method

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Step 2: Compile and Package the Code

You need Hadoop's libraries in your classpath to compile. The easiest way is to use the hadoop classpath.

# Create a directory for your project mkdir wordcount cd wordcount # Save the code above as WordCount.java # Compile the Java file # The -cp flag adds all Hadoop libraries to the classpath javac -cp $(hadoop classpath) WordCount.java # Package the compiled .class files into a JAR file # The 'e' flag specifies the entry point (main class) jar -cvfe WordCount.jar WordCount *.class # You should now have a WordCount.jar file ls -l

Step 3: Prepare Input Data on HDFS

Let's put some sample text files into HDFS.

# Start Hadoop (if not already running) start-dfs.sh start-yarn.sh # Create an input directory in HDFS hdfs dfs -mkdir -p /user/hadoop/input # Put some local text files into HDFS # For example, if you have files file1.txt, file2.txt in your local directory hdfs dfs -put file1.txt /user/hadoop/input/ hdfs dfs -put file2.txt /user/hadoop/input/ # Verify the files are in HDFS hdfs dfs -ls /user/hadoop/input

Step 4: Run the Job with hadoop jar

Now, execute the JAR file. We need to provide the input and output paths as arguments to our main method.

# Run the WordCount job # The output directory must NOT exist before running the job. hadoop jar WordCount.jar WordCount /user/hadoop/input /user/hadoop/output

Explanation of the command:

hadoop jar WordCount.jar: Execute theWordCount.jarfile.WordCount: The name of the main class to run./user/hadoop/input: The first argument passed tomain, which our code uses as the input path./user/hadoop/output: The second argument passed tomain, which our code uses as the output path.

Step 5: Check the Results

After the job completes, you can see the results in the output directory on HDFS.

# Check the job's output directory hdfs dfs -cat /user/hadoop/output/part-r-00000 # Example output: # hadoop 2 # hello 1 # world 2

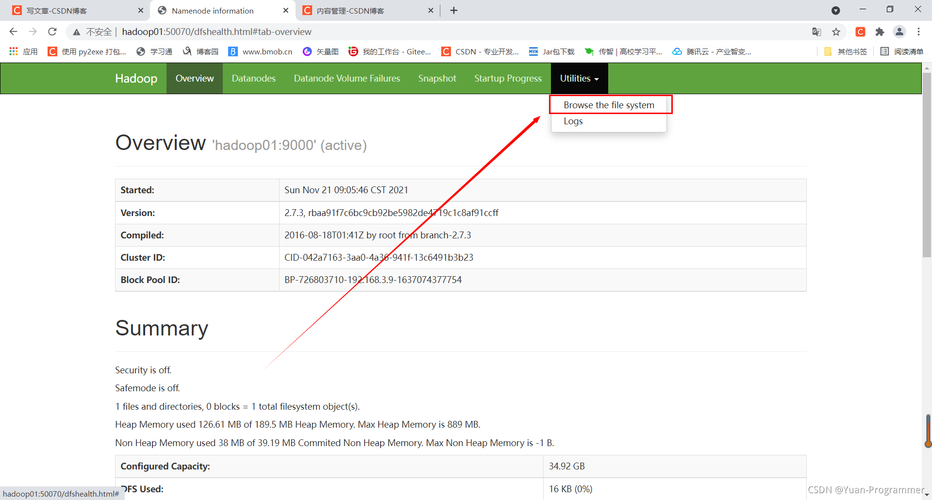

You can also view the Hadoop UI (usually at http://<namenode-host:8088) to see job status, logs, and counters.

Advanced Options and Common Flags

The hadoop jar command supports many options to control job execution. You can see all of them with hadoop jar --help.

Here are the most important ones:

Specifying a Different Main Class

If your JAR has multiple main classes, you can specify which one to use without re-packaging the JAR using the -D flag.

hadoop jar MyBigApp.jar -Dmapreduce.job.main.class=com.company.alternative.MainClass

Managing Resources

You can control how much memory and CPU your job uses.

-

Memory for Map and Reduce Tasks:

# Set map task memory to 2GB and reduce task memory to 4GB hadoop jar MyJob.jar MyJob /input /output \ -D mapreduce.map.memory.mb=2048 \ -D mapreduce.reduce.memory.mb=4096

-

Number of Map and Reduce Tasks:

# Set the number of map tasks to 50 and reduce tasks to 10 hadoop jar MyJob.jar MyJob /input /output \ -D mapreduce.job.maps=50 \ -D mapreduce.job.reduces=10

Debugging and Logging

-

Verbose Mode: Prints more detailed logs during execution.

hadoop jar MyJob.jar MyJob /input /output -verbose

-

Viewing Job Logs: The standard output and error streams from your mappers and reducers are written to log files on the HDFS. You can view them with:

# Get the standard output logs hdfs dfs -cat /user/hadoop/logs/hadoop-hadoop-tasktracker-<hostname>.log.stdout # Get the error logs hdfs dfs -cat /user/hadoop/logs/hadoop-hadoop-tasktracker-<hostname>.log.stderr

(Note: The exact path may vary slightly depending on your Hadoop version and configuration.)

Managing JAR Dependencies

If your job depends on other libraries, you have two main options:

-

Package Everything in One Fat JAR (Recommended): Use a build tool like Maven or Gradle with a "shade" or "assembly" plugin. This packages all dependencies into a single, executable JAR.

# Example with Maven (after running 'mvn package') hadoop jar target/my-fat-jar-1.0.jar com.company.MyDriver /input /output

-

Use the

-libjarsOption: Pass additional JAR files as a comma-separated list. Hadoop will distribute these JARs to the cluster nodes for your job.hadoop jar MyJob.jar MyJob /input /output -libjars /path/to/lib1.jar,/path/to/lib2.jar

Modern Alternative: yarn jar

For Hadoop 2.x and later, which uses YARN (Yet Another Resource Negotiator) as its resource management layer, the yarn jar command is functionally identical to hadoop jar but is more semantically correct.

# These two commands do the same thing hadoop jar WordCount.jar WordCount /input /output yarn jar WordCount.jar WordCount /input /output

In practice, hadoop jar is still widely used and understood, but you might see yarn jar in more modern documentation or scripts.