Of course! Here is a comprehensive guide to implementing and using the Adaptive Lasso in Python, including the underlying theory, a step-by-step implementation from scratch, and a practical example using popular libraries.

What is the Adaptive Lasso?

The Adaptive Lasso is an extension of the Lasso (Least Absolute Shrinkage and Selection Operator) designed to overcome one of the Lasso's main limitations: its inability to consistently select the true model when there are highly correlated predictors.

The key idea is to use weighted penalties. Variables that are more important (or more likely to be in the true model) are given a smaller penalty, while less important variables are penalized more heavily. This adaptive weighting helps the method converge to the "oracle" estimator—one that behaves as if it knew the true model from the start.

How it Works: The Two-Stage Process

The Adaptive Lasso is typically implemented in two stages:

-

Stage 1: Obtain Initial Weights

(图片来源网络,侵删)

(图片来源网络,侵删)- First, we run a "preliminary" regression to get initial estimates of the coefficients ($\hat{\beta}_j^{(0)}$).

- A common choice for this preliminary estimator is the Ridge Regression (L2 penalty), which is known to perform well even with correlated predictors and doesn't perform variable selection itself.

- We then use these preliminary estimates to calculate weights for each variable $j$: $w_j = \frac{1}{|\hat{\beta}_j^{(0)}|^\gamma}$

- Here, $\gamma > 0$ is a tuning parameter. A common choice is $\gamma = 1$. Notice that variables with larger preliminary estimates get smaller weights.

-

Stage 2: Weighted Lasso

- We then perform a standard Lasso regression, but we modify the penalty term to include the weights $w_j$.

- The optimization problem becomes: $\min{\beta} \left{ \frac{1}{2n} \sum{i=1}^{n} (yi - \sum{j=1}^{p} x_{ij} \betaj)^2 + \lambda \sum{j=1}^{p} w_j |\beta_j| \right}$

- This weighted penalty shrinks the coefficients of less important variables (those with small preliminary estimates and thus large weights $w_j$) all the way to zero, effectively performing variable selection. Meanwhile, it shrinks the coefficients of more important variables (small $w_j$) less, preserving them in the model.

Implementation from Scratch

This implementation will follow the two-stage process using numpy and scipy.

import numpy as np

from scipy.optimize import minimize

def adaptive_lasso(X, y, gamma=1.0, lambda_ridge=1.0, n_lambdas=100, max_iter=1000):

"""

Implements the Adaptive Lasso in two stages.

Stage 1: Ridge regression to obtain initial coefficients and weights.

Stage 2: Weighted Lasso regression using the calculated weights.

Parameters:

-----------

X : ndarray of shape (n_samples, n_features)

The input data matrix.

y : ndarray of shape (n_samples,)

The target vector.

gamma : float, default=1.0

The exponent for the weights (w_j = 1 / |beta_j^0|^gamma).

lambda_ridge : float, default=1.0

The regularization strength for the initial Ridge regression.

n_lambdas : int, default=100

The number of lambda values to try for the Lasso path.

max_iter : int, default=1000

Maximum number of iterations for the Lasso solver.

Returns:

--------

beta_hat : ndarray of shape (n_features,)

The final estimated coefficients from the Adaptive Lasso.

"""

n_samples, n_features = X.shape

# --- Stage 1: Obtain initial weights using Ridge Regression ---

# Add intercept term to X for Ridge regression

X_intercept = np.column_stack([np.ones(n_samples), X])

# Ridge regression closed-form solution: (X'X + lambda*I)^-1 X'y

# We regularize only the coefficients, not the intercept

identity = np.eye(n_features + 1)

identity[0, 0] = 0 # Do not penalize the intercept

# Solve for Ridge coefficients

ridge_coeffs = np.linalg.inv(X_intercept.T @ X_intercept + lambda_ridge * identity) @ (X_intercept.T @ y)

# Extract coefficients for features (excluding the intercept)

beta_ridge = ridge_coeffs[1:]

# Calculate weights. Add a small epsilon to avoid division by zero.

epsilon = 1e-5

weights = 1.0 / (np.abs(beta_ridge) ** gamma + epsilon)

# --- Stage 2: Solve the Weighted Lasso ---

# We need to find the optimal lambda for the Lasso.

# A common approach is to use cross-validation. For simplicity here,

# we will find a lambda that gives a reasonable number of non-zero coefficients.

# Define the objective function for the weighted Lasso

def lasso_objective(beta, lambda_lasso):

# beta includes the intercept

residuals = y - (X_intercept @ beta)

mse = np.sum(residuals**2) / (2 * n_samples)

l1_penalty = lambda_lasso * np.sum(weights * np.abs(beta[1:])) # Don't penalize intercept

return mse + l1_penalty

# Initial guess for coefficients (start with Ridge solution)

initial_beta = ridge_coeffs

# Find a lambda that gives a sparse solution

# We'll try a range of lambdas and pick one that results in a desired sparsity.

# For a real application, use cross-validation.

# Let's find a lambda that sets at least half of the coefficients to zero

target_sparsity = n_features // 2

# Try decreasing lambda until we hit the target sparsity

lambda_lasso = 1.0

for _ in range(100):

res = minimize(lasso_objective, initial_beta, args=(lambda_lasso,),

method='L-BFGS-B', jac=None, options={'maxiter': max_iter})

beta_lasso = res.x

non_zero_count = np.sum(np.abs(beta_lasso[1:]) > 1e-6)

if non_zero_count <= target_sparsity:

print(f"Found lambda: {lambda_lasso:.4f}, Non-zero coefficients: {non_zero_count}")

break

lambda_lasso *= 0.9 # Decrease lambda

if non_zero_count > target_sparsity:

print(f"Warning: Could not find lambda to achieve target sparsity. Final non-zero count: {non_zero_count}")

return beta_lasso[1:] # Return coefficients for features only

# --- Example Usage ---

if __name__ == '__main__':

np.random.seed(42)

# Generate synthetic data with some correlated features

n_samples, n_features = 100, 10

X = np.random.randn(n_samples, n_features)

# Create a true model with only 3 non-zero coefficients

true_coef = np.zeros(n_features)

true_coef[[0, 2, 5]] = [3.0, -1.5, 2.0]

# Create y with some noise

y = X @ true_coef + np.random.normal(0, 1, n_samples)

print("True non-zero coefficients are at indices:", np.where(true_coef != 0)[0])

# Run Adaptive Lasso

final_coef = adaptive_lasso(X, y, gamma=1.0)

print("\nAdaptive Lasso estimated coefficients:")

print(final_coef)

# Check which features were selected

selected_features = np.where(np.abs(final_coef) > 1e-6)[0]

print("\nSelected feature indices:", selected_features)

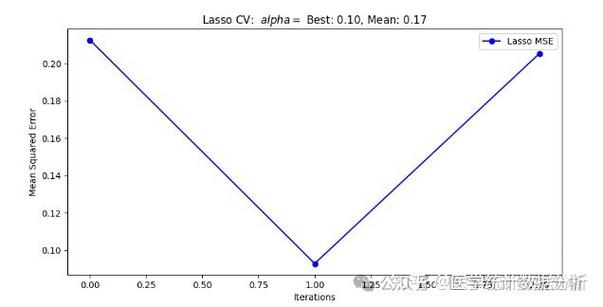

Practical Implementation using scikit-learn

While the above is great for understanding, in practice, you'd use scikit-learn. It provides tools to easily build a pipeline for the two-stage process.

The key is to use RidgeCV for the first stage to automatically select the best lambda_ridge via cross-validation, and then LassoCV for the second stage to select the best lambda_lasso.

import numpy as np

from sklearn.linear_model import RidgeCV, LassoCV

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

def adaptive_lasso_sklearn(X, y, gamma=1.0, cv=5):

"""

Implements the Adaptive Lasso using scikit-learn.

Parameters:

-----------

X : ndarray of shape (n_samples, n_features)

The input data matrix.

y : ndarray of shape (n_samples,)

The target vector.

gamma : float, default=1.0

The exponent for the weights (w_j = 1 / |beta_j